storm集成kafka的应用,从kafka读取,写入kafka

storm集成kafka的应用,从kafka读取,写入kafka

by 小闪电

0前言

storm的主要作用是进行流式的实时计算,对于一直产生的数据流处理是非常迅速的,然而大部分数据并不是均匀的数据流,而是时而多时而少。对于这种情况下进行批处理是不合适的,因此引入了kafka作为消息队列,与storm完美配合,这样可以实现稳定的流式计算。下面是一个简单的示例实现从kafka读取数据,并写入到kafka,以此来掌握storm与kafka之间的交互。

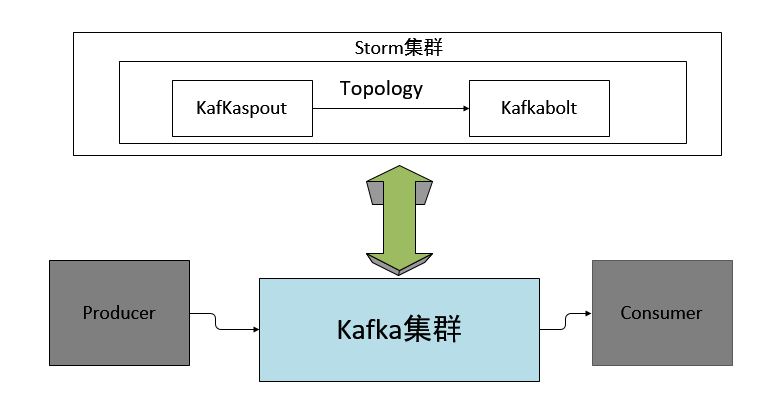

1程序框图

实质上就是storm的kafkaspout作为一个consumer,kafkabolt作为一个producer。

框图如下:

2 pom.xml

建立一个maven项目,将storm,kafka,zookeeper的外部依赖叠加起来。

- <?xml version="1.0" encoding="UTF-8"?>

- <project xmlns="http://maven.apache.org/POM/4.0.0"

- xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

- xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

- <modelVersion>4.0.0</modelVersion>

- <groupId>org.tony</groupId>

- <artifactId>storm-example</artifactId>

- <version>1.0-SNAPSHOT</version>

- <dependencies>

- <dependency>

- <groupId>org.apache.storm</groupId>

- <artifactId>storm-core</artifactId>

- <version>0.9.3</version>

- <!--<scope>provided</scope>-->

- </dependency>

- <dependency>

- <groupId>org.apache.storm</groupId>

- <artifactId>storm-kafka</artifactId>

- <version>0.9.3</version>

- <!--<scope>provided</scope>-->

- </dependency>

- <dependency>

- <groupId>com.google.protobuf</groupId>

- <artifactId>protobuf-java</artifactId>

- <version>2.5.0</version>

- </dependency>

- <!-- storm-kafka模块需要的依赖 -->

- <dependency>

- <groupId>org.apache.curator</groupId>

- <artifactId>curator-framework</artifactId>

- <version>2.5.0</version>

- <exclusions>

- <exclusion>

- <groupId>log4j</groupId>

- <artifactId>log4j</artifactId>

- </exclusion>

- <exclusion>

- <groupId>org.slf4j</groupId>

- <artifactId>slf4j-log4j12</artifactId>

- </exclusion>

- </exclusions>

- </dependency>

- <!-- kafka -->

- <dependency>

- <groupId>org.apache.kafka</groupId>

- <artifactId>kafka_2.10</artifactId>

- <version>0.8.1.1</version>

- <exclusions>

- <exclusion>

- <groupId>org.apache.zookeeper</groupId>

- <artifactId>zookeeper</artifactId>

- </exclusion>

- <exclusion>

- <groupId>log4j</groupId>

- <artifactId>log4j</artifactId>

- </exclusion>

- </exclusions>

- </dependency>

- </dependencies>

- <repositories>

- <repository>

- <id>central</id>

- <url>http://repo1.maven.org/maven2/</url>

- <snapshots>

- <enabled>false</enabled>

- </snapshots>

- <releases>

- <enabled>true</enabled>

- </releases>

- </repository>

- <repository>

- <id>clojars</id>

- <url>https://clojars.org/repo/</url>

- <snapshots>

- <enabled>true</enabled>

- </snapshots>

- <releases>

- <enabled>true</enabled>

- </releases>

- </repository>

- <repository>

- <id>scala-tools</id>

- <url>http://scala-tools.org/repo-releases</url>

- <snapshots>

- <enabled>true</enabled>

- </snapshots>

- <releases>

- <enabled>true</enabled>

- </releases>

- </repository>

- <repository>

- <id>conjars</id>

- <url>http://conjars.org/repo/</url>

- <snapshots>

- <enabled>true</enabled>

- </snapshots>

- <releases>

- <enabled>true</enabled>

- </releases>

- </repository>

- </repositories>

- <build>

- <plugins>

- <plugin>

- <groupId>org.apache.maven.plugins</groupId>

- <artifactId>maven-compiler-plugin</artifactId>

- <version>3.1</version>

- <configuration>

- <source>1.6</source>

- <target>1.6</target>

- <encoding>UTF-8</encoding>

- <showDeprecation>true</showDeprecation>

- <showWarnings>true</showWarnings>

- </configuration>

- </plugin>

- <plugin>

- <artifactId>maven-assembly-plugin</artifactId>

- <configuration>

- <descriptorRefs>

- <descriptorRef>jar-with-dependencies</descriptorRef>

- </descriptorRefs>

- <archive>

- <manifest>

- <mainClass></mainClass>

- </manifest>

- </archive>

- </configuration>

- <executions>

- <execution>

- <id>make-assembly</id>

- <phase>package</phase>

- <goals>

- <goal>single</goal>

- </goals>

- </execution>

- </executions>

- </plugin>

- </plugins>

- </build>

- </project>

3 kafkaspout的消费逻辑,修改MessageScheme类,其中定义了俩个字段,key和message,方便分发到kafkabolt。代码如下

- package com.tony.storm_kafka.util;

- import java.io.UnsupportedEncodingException;

- import java.util.List;

- import backtype.storm.spout.Scheme;

- import backtype.storm.tuple.Fields;

- import backtype.storm.tuple.Values;

- /*

- *author: hi

- *public class MessageScheme{ }

- **/

- public class MessageScheme implements Scheme {

- @Override

- public List<Object> deserialize(byte[] arg0) {

- try{

- String msg = new String(arg0, "UTF-8");

- String msg_0 = "hello";

- return new Values(msg_0,msg);

- }

- catch (UnsupportedEncodingException e) {

- // TODO: handle exception

- e.printStackTrace();

- }

- return null;

- }

- @Override

- public Fields getOutputFields() {

- return new Fields("key","message");

- }

- }

4.编写topology主类,配置kafka,提交topology到storm的代码,其中kafkaspout的zkhost有动态和静态俩种配置,尽量使用动态自寻的方式。

- package org.tony.storm_kafka.common;

- import backtype.storm.Config;

- import backtype.storm.LocalCluster;

- import backtype.storm.StormSubmitter;

- import backtype.storm.generated.AlreadyAliveException;

- import backtype.storm.generated.InvalidTopologyException;

- import backtype.storm.generated.StormTopology;

- import backtype.storm.spout.SchemeAsMultiScheme;

- import backtype.storm.topology.BasicOutputCollector;

- import backtype.storm.topology.OutputFieldsDeclarer;

- import backtype.storm.topology.TopologyBuilder;

- import backtype.storm.topology.base.BaseBasicBolt;

- import backtype.storm.tuple.Tuple;

- import storm.kafka.BrokerHosts;

- import storm.kafka.KafkaSpout;

- import storm.kafka.SpoutConfig;

- import storm.kafka.ZkHosts;

- import storm.kafka.trident.TridentKafkaState;

- import java.util.Arrays;

- import java.util.Properties;

- import org.tony.storm_kafka.bolt.ToKafkaBolt;

- import com.tony.storm_kafka.util.MessageScheme;

- public class KafkaBoltTestTopology {

- //配置kafka spout参数

- public static String kafka_zk_port = null;

- public static String topic = null;

- public static String kafka_zk_rootpath = null;

- public static BrokerHosts brokerHosts;

- public static String spout_name = "spout";

- public static String kafka_consume_from_start = null;

- public static class PrinterBolt extends BaseBasicBolt {

- /**

- *

- */

- private static final long serialVersionUID = 9114512339402566580L;

- // @Override

- public void declareOutputFields(OutputFieldsDeclarer declarer) {

- }

- // @Override

- public void execute(Tuple tuple, BasicOutputCollector collector) {

- System.out.println("-----"+(tuple.getValue(1)).toString());

- }

- }

- public StormTopology buildTopology(){

- //kafkaspout 配置文件

- kafka_consume_from_start = "true";

- kafka_zk_rootpath = "/kafka08";

- String spout_id = spout_name;

- brokerHosts = new ZkHosts("192.168.201.190:2191,192.168.201.191:2191,192.168.201.192:2191", kafka_zk_rootpath+"/brokers");

- kafka_zk_port = "2191";

- SpoutConfig spoutConf = new SpoutConfig(brokerHosts, "testfromkafka", kafka_zk_rootpath, spout_id);

- spoutConf.scheme = new SchemeAsMultiScheme(new MessageScheme());

- spoutConf.zkPort = Integer.parseInt(kafka_zk_port);

- spoutConf.zkRoot = kafka_zk_rootpath;

- spoutConf.zkServers = Arrays.asList(new String[] {"10.9.201.190", "10.9.201.191", "10.9.201.192"});

- //是否從kafka第一條數據開始讀取

- if (kafka_consume_from_start == null) {

- kafka_consume_from_start = "false";

- }

- boolean kafka_consume_frome_start_b = Boolean.valueOf(kafka_consume_from_start);

- if (kafka_consume_frome_start_b != true && kafka_consume_frome_start_b != false) {

- System.out.println("kafka_comsume_from_start must be true or false!");

- }

- System.out.println("kafka_consume_from_start: " + kafka_consume_frome_start_b);

- spoutConf.forceFromStart=kafka_consume_frome_start_b;

- TopologyBuilder builder = new TopologyBuilder();

- builder.setSpout("spout", new KafkaSpout(spoutConf));

- builder.setBolt("forwardToKafka", new ToKafkaBolt<String, String>()).shuffleGrouping("spout");

- return builder.createTopology();

- }

- public static void main(String[] args) {

- KafkaBoltTestTopology kafkaBoltTestTopology = new KafkaBoltTestTopology();

- StormTopology stormTopology = kafkaBoltTestTopology.buildTopology();

- Config conf = new Config();

- //设置kafka producer的配置

- Properties props = new Properties();

- props.put("metadata.broker.list", "192.10.43.150:9092");

- props.put("producer.type","async");

- props.put("request.required.acks", "0"); // 0 ,-1 ,1

- props.put("serializer.class", "kafka.serializer.StringEncoder");

- conf.put(TridentKafkaState.KAFKA_BROKER_PROPERTIES, props);

- conf.put("topic","testTokafka");

- if(args.length > 0){

- // cluster submit.

- try {

- StormSubmitter.submitTopology("kafkaboltTest", conf, stormTopology);

- } catch (AlreadyAliveException e) {

- e.printStackTrace();

- } catch (InvalidTopologyException e) {

- e.printStackTrace();

- }

- }else{

- new LocalCluster().submitTopology("kafkaboltTest", conf, stormTopology);

- }

- }

- }

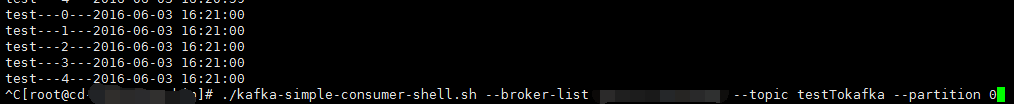

5 示例结果,testfromkafka topic里面的数据可以通过另外写个类来进行持续的生产。

topic testfromkafka的数据

topic testTokafka的数据

6 补充ToKfakaBolt,集成基础的Bolt类,主要改写Excute,同时加上Ack机制。

- import java.util.Map;

- import java.util.Properties;

- import kafka.javaapi.producer.Producer;

- import kafka.producer.KeyedMessage;

- import kafka.producer.ProducerConfig;

- import org.slf4j.Logger;

- import org.slf4j.LoggerFactory;

- import storm.kafka.bolt.mapper.FieldNameBasedTupleToKafkaMapper;

- import storm.kafka.bolt.mapper.TupleToKafkaMapper;

- import storm.kafka.bolt.selector.KafkaTopicSelector;

- import storm.kafka.bolt.selector.DefaultTopicSelector;

- import backtype.storm.task.OutputCollector;

- import backtype.storm.task.TopologyContext;

- import backtype.storm.topology.OutputFieldsDeclarer;

- import backtype.storm.topology.base.BaseRichBolt;

- import backtype.storm.tuple.Tuple;

- /*

- *author: yue

- *public class ToKafkaBolt{ }

- **/

- public class ToKafkaBolt<K,V> extends BaseRichBolt{

- private static final Logger Log = LoggerFactory.getLogger(ToKafkaBolt.class);

- public static final String TOPIC = "topic";

- public static final String KAFKA_BROKER_PROPERTIES = "kafka.broker.properties";

- private Producer<K, V> producer;

- private OutputCollector collector;

- private TupleToKafkaMapper<K, V> Mapper;

- private KafkaTopicSelector topicselector;

- public ToKafkaBolt<K,V> withTupleToKafkaMapper(TupleToKafkaMapper<K, V> mapper){

- this.Mapper = mapper;

- return this;

- }

- public ToKafkaBolt<K, V> withTopicSelector(KafkaTopicSelector topicSelector){

- this.topicselector = topicSelector;

- return this;

- }

- @Override

- public void prepare(Map stormConf, TopologyContext context,

- OutputCollector collector) {

- if (Mapper == null) {

- this.Mapper = new FieldNameBasedTupleToKafkaMapper<K, V>();

- }

- if (topicselector == null) {

- this.topicselector = new DefaultTopicSelector((String)stormConf.get(TOPIC));

- }

- Map configMap = (Map) stormConf.get(KAFKA_BROKER_PROPERTIES);

- Properties properties = new Properties();

- properties.putAll(configMap);

- ProducerConfig config = new ProducerConfig(properties);

- producer = new Producer<K, V>(config);

- this.collector = collector;

- }

- @Override

- public void execute(Tuple input) {

- // String iString = input.getString(0);

- K key = null;

- V message = null;

- String topic = null;

- try {

- key = Mapper.getKeyFromTuple(input);

- message = Mapper.getMessageFromTuple(input);

- topic = topicselector.getTopic(input);

- if (topic != null) {

- producer.send(new KeyedMessage<K, V>(topic,message));

- }else {

- Log.warn("skipping key = "+key+ ",topic selector returned null.");

- }

- } catch ( Exception e) {

- // TODO: handle exception

- Log.error("Could not send message with key = " + key

- + " and value = " + message + " to topic = " + topic, e);

- }finally{

- collector.ack(input);

- }

- }

- @Override

- public void declareOutputFields(OutputFieldsDeclarer declarer) {

- }

- }

作 者:小闪电

出处:http://www.cnblogs.com/yueyanyu/

本文版权归作者和博客园共有,欢迎转载、交流,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文链接。如果觉得本文对您有益,欢迎点赞、欢迎探讨。本博客来源于互联网的资源,若侵犯到您的权利,请联系博主予以删除。

storm集成kafka的应用,从kafka读取,写入kafka的更多相关文章

- Storm集成Kafka应用的开发

我们知道storm的作用主要是进行流式计算,对于源源不断的均匀数据流流入处理是非常有效的,而现实生活中大部分场景并不是均匀的数据流,而是时而多时而少的数据流入,这种情况下显然用批量处理是不合适的,如果 ...

- Storm集成Kafka编程模型

原创文章,转载请注明: 转载自http://www.cnblogs.com/tovin/p/3974417.html 本文主要介绍如何在Storm编程实现与Kafka的集成 一.实现模型 数据流程: ...

- 5、Storm集成Kafka

1.pom文件依赖 <!--storm相关jar --> <dependency> <groupId>org.apache.storm</groupId> ...

- Flume 读取RabbitMq消息队列消息,并将消息写入kafka

首先是关于flume的基础介绍 组件名称 功能介绍 Agent代理 使用JVM 运行Flume.每台机器运行一个agent,但是可以在一个agent中包含多个sources和sinks. Client ...

- Spark(二十一)【SparkSQL读取Kudu,写入Kafka】

目录 SparkSQL读取Kudu,写出到Kafka 1. pom.xml 依赖 2.将KafkaProducer利用lazy val的方式进行包装, 创建KafkaSink 3.利用广播变量,将Ka ...

- Springboot集成mybatis(mysql),mail,mongodb,cassandra,scheduler,redis,kafka,shiro,websocket

https://blog.csdn.net/a123demi/article/details/78234023 : Springboot集成mybatis(mysql),mail,mongodb,c ...

- Kafka设计解析(十八)Kafka与Flink集成

转载自 huxihx,原文链接 Kafka与Flink集成 Apache Flink是新一代的分布式流式数据处理框架,它统一的处理引擎既可以处理批数据(batch data)也可以处理流式数据(str ...

- spark读取 kafka nginx网站日志消息 并写入HDFS中(转)

原文链接:spark读取 kafka nginx网站日志消息 并写入HDFS中 spark 版本为1.0 kafka 版本为0.8 首先来看看kafka的架构图 详细了解请参考官方 我这边有三台机器用 ...

- Mysql增量写入Hdfs(一) --将Mysql数据写入Kafka Topic

一. 概述 在大数据的静态数据处理中,目前普遍采用的是用Spark+Hdfs(Hive/Hbase)的技术架构来对数据进行处理. 但有时候有其他的需求,需要从其他不同数据源不间断得采集数据,然后存储到 ...

随机推荐

- Matlab forward Euler demo

% forward Euler demo % take two steps in the solution of % dy/dt = y, y(0) = 1 % exact solution is y ...

- Web应用架构入门之11个基本要素

译者: 读完这篇博客,你就可以回答一个经典的面试题:当你访问Google时,到底发生了什么? 原文:Web Architecture 101 译者:Fundebug 为了保证可读性,本文采用意译而非直 ...

- jQuery中是事件绑定方式--on、bind、live、delegate

概述:jQuery是我们最常用的js库,对于事件的绑定也是有很多种,on.one.live.bind.delegate等等,接下来我们逐一来进行讲解. 本片文章中事件所带的为版本号,例:v1.7+为1 ...

- 微信小程序地图报错——ret is not defined

刚刚在使用微信的map做地图时候 发现如下报错: 后来找了一会发现错误为经纬度写反了导致经纬度超出了范围 正确取值范围: latitude 纬度 浮点数,范围 -90 ~ 90 longitud ...

- mysql 之库, 表的简易操作

一. 库的操作 1.创建数据库 创建数据库: create database 库名 charset utf8; charset uft8 可选项 1.2 数据库命名规范: 可以由字母.数字.下划 ...

- SWFUpload多文件上传使用指南

SWFUpload是一个flash和js相结合而成的文件上传插件,其功能非常强大.以前在项目中用过几次,但它的配置参数太多了,用过后就忘记怎么用了,到以后要用时又得到官网上看它的文档,真是太烦了.所以 ...

- EasyUI tabs指定要显示的tab

<div id="DivBox" class="easyui-tabs" style="width: 100%; height: 100%;& ...

- jQ 移动端返回顶部代码整理

//返回顶部 $('#btn-scroll').on('touchend',function(){ var T = $(window).scrollTop(); var t = setInterval ...

- Django admin 的模仿流程

- 使用hint优化Oracle的运行计划 以及 SQL Tune Advisor的使用

背景: 某表忽然出现查询很缓慢的情况.cost 100+ 秒以上:严重影响生产. 原SQL: explain plan for select * from ( select ID id,RET_NO ...