review backpropagation

The goal of backpropagation is to compute the partial derivatives ∂C/∂w and ∂C/∂b of the cost function C with respect to any weight ww or bias b in the network.

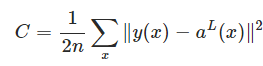

we use the quadratic cost function

two assumptions :

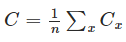

1: The first assumption we need is that the cost function can be written as an average

(case for the quadratic cost function)

(case for the quadratic cost function)

The reason we need this assumption is because what backpropagation actually lets us do is compute the partial derivatives

∂Cx/∂w and ∂Cx/∂b for a single training example. We then recover ∂C/∂w and ∂C/∂b by averaging over training examples. In

fact, with this assumption in mind, we'll suppose the training example x has been fixed, and drop the x subscript, writing the

cost Cx as C. We'll eventually put the x back in, but for now it's a notational nuisance that is better left implicit.

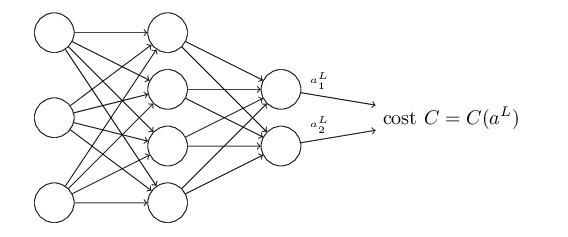

2: The cost function can be written as a function of the outputs from the neural network

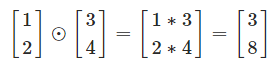

the Hadamard product

(s⊙t)j=sjtj(s⊙t)j=sjtj

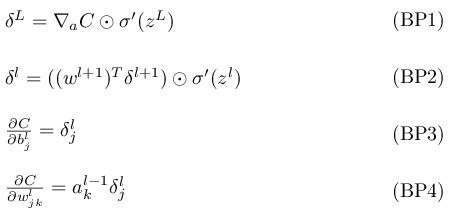

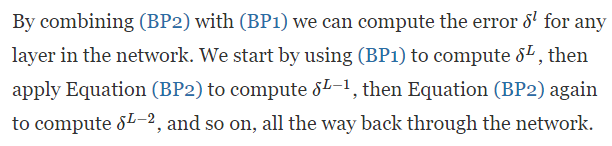

The four fundamental equations behind backpropagation

BP1

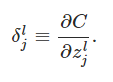

:the error in the jth neuron in the lth layer

:the error in the jth neuron in the lth layer

You might wonder why the demon is changing the weighted input zlj. Surely it'd be more natural to imagine the demon changing

the output activation alj, with the result that we'd be using ∂C/∂alj as our measure of error. In fact, if you do this things work out quite

similarly to the discussion below. But it turns out to make the presentation of backpropagation a little more algebraically complicated.

So we'll stick with δlj=∂C/∂zlj as our measure of error.

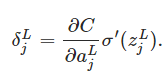

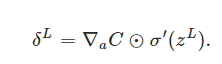

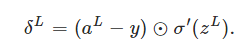

An equation for the error in the output layer, δL: The components of δL are given by

it's easy to rewrite the equation in a matrix-based form, as

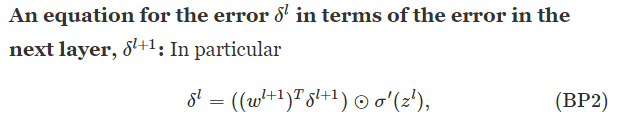

BP2

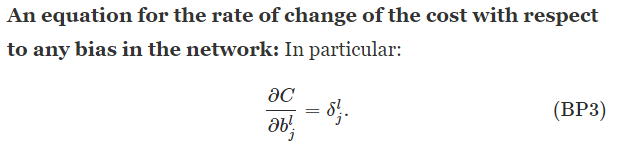

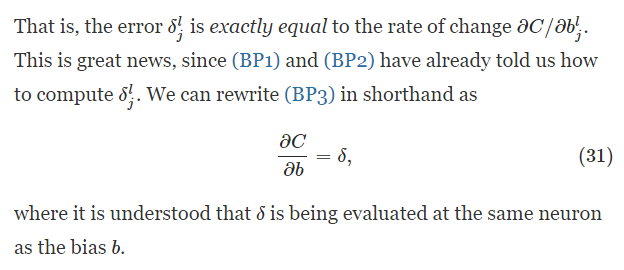

BP3

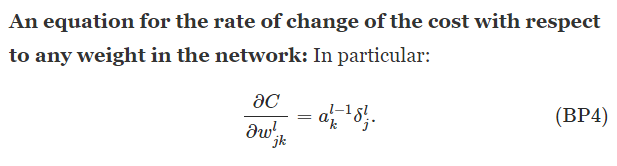

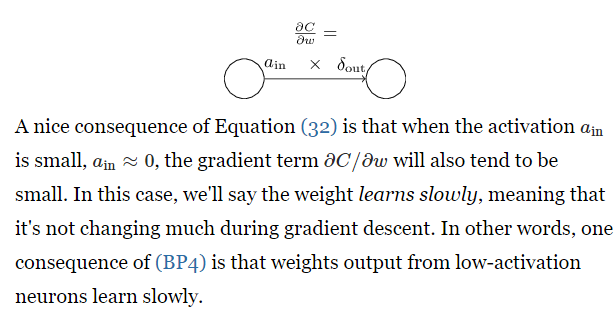

BP4

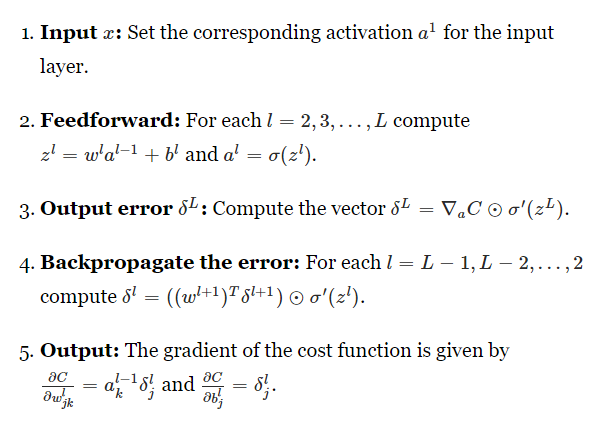

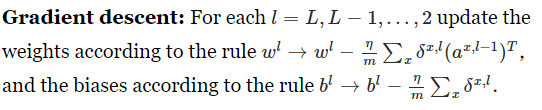

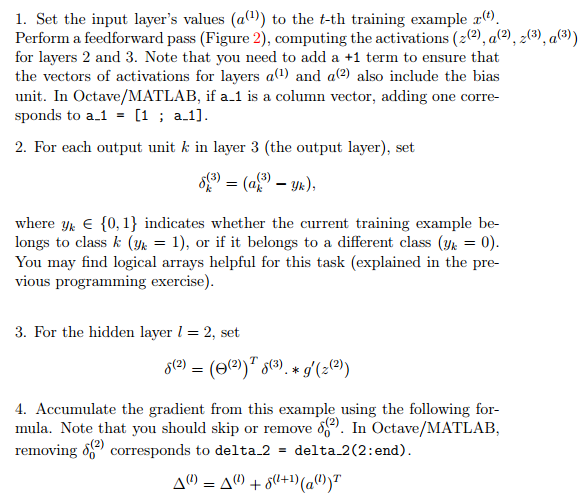

The backpropagation algorithm

Of course, to implement stochastic gradient descent in practice you also need an outer loop generating mini-batches

of training examples, and an outer loop stepping through multiple epochs of training. I've omitted those for simplicity.

reference: http://neuralnetworksanddeeplearning.com/chap2.html

------------------------------------------------------------------------------------------------

reference:Machine Learning byAndrew Ng

review backpropagation的更多相关文章

- (Review cs231n) Backpropagation and Neural Network

损失由两部分组成: 数据损失+正则化损失(data loss + regularization) 想得到损失函数关于权值矩阵W的梯度表达式,然后进性优化操作(损失相当于海拔,你在山上的位置相当于W,你 ...

- A review of learning in biologically plausible spiking neural networks

郑重声明:原文参见标题,如有侵权,请联系作者,将会撤销发布! Contents: ABSTRACT 1. Introduction 2. Biological background 2.1. Spik ...

- Deep Learning论文翻译(Nature Deep Review)

原论文出处:https://www.nature.com/articles/nature14539 by Yann LeCun, Yoshua Bengio & Geoffrey Hinton ...

- 我们是怎么做Code Review的

前几天看了<Code Review 程序员的寄望与哀伤>,想到我们团队开展Code Review也有2年了,结果还算比较满意,有些经验应该可以和大家一起分享.探讨.我们为什么要推行Code ...

- Code Review 程序员的寄望与哀伤

一个程序员,他写完了代码,在测试环境通过了测试,然后他把它发布到了线上生产环境,但很快就发现在生产环境上出了问题,有潜在的 bug. 事后分析,是生产环境的一些微妙差异,使得这种 bug 场景在线下测 ...

- AutoMapper:Unmapped members were found. Review the types and members below. Add a custom mapping expression, ignore, add a custom resolver, or modify the source/destination type

异常处理汇总-后端系列 http://www.cnblogs.com/dunitian/p/4523006.html 应用场景:ViewModel==>Mode映射的时候出错 AutoMappe ...

- Git和Code Review流程

Code Review流程1.根据开发任务,建立git分支, 分支名称模式为feature/任务名,比如关于API相关的一项任务,建立分支feature/api.git checkout -b fea ...

- 神经网络与深度学习(3):Backpropagation算法

本文总结自<Neural Networks and Deep Learning>第2章的部分内容. Backpropagation算法 Backpropagation核心解决的问题: ∂C ...

- 故障review的一些总结

故障review的一些总结 故障review的目的 归纳出现故障产生的原因 检查故障的产生是否具有普遍性,并尽可能的保证同类问题不在出现, 回顾故障的处理流程,并检查处理过程中所存在的问题.并确定此类 ...

随机推荐

- 一文搞定 Git 相关概念和常用指令

我几乎每天都使用 Git,但仍然无法记住很多命令. 通常,只需要记住下图中的 6 个命令就足以供日常使用.但是,为了确保使用地很顺滑,其实你应该记住 60 到 100 个命令. Git 相关术语 Gi ...

- qq群文件管理

一.怎样登录QQ群空间查看.管理群文件 1)登录自己的QQ,打开主面板!小编在这里以访问自己的群“我们的六班”为例.2)鼠标移动到主面板中“我们的六班”群图标处,右建单击——选择“访问QQ群空间”—— ...

- 【转】Flash AS3 保存图片到本地

核心提示:如果你想保存从视频,图表或表格中获取的图片数据到本地,可以使用BitmapData类. 原文地址: http://www.adobe.com/cfusion/communityeng ...

- 机器学习:集成学习(Bagging、Pasting)

一.集成学习算法的问题 可参考:模型集成(Enxemble) 博主:独孤呆博 思路:集成多个算法,让不同的算法对同一组数据进行分析,得到结果,最终投票决定各个算法公认的最好的结果: 弊端:虽然有很多机 ...

- Cassandra 学习三 安装

1: 下载Cassandra 2 解压 3 设置环境变量 4 修改cassandra里的conf目录下配置文件 配置文件地址是 D:\cassandra\apache ...

- 基于opencv+ffmpeg的镜头分割

镜头分割常常被用于视频智能剪辑.视频关键帧提取等场景. 本文给出一种解决镜头分割问题的思路,可分为两个步骤: 1.根据镜头分割算法对视频进行分割标记 核心在于镜头分割算法,这里简单描述一种算法思路:r ...

- 一张图看懂------left join;right join;inner join

- Celery-4.1 用户指南: Periodic Tasks (定时任务)

简介 celery beat 是一个调度器:它以常规的时间间隔开启任务,任务将会在集群中的可用节点上运行. 默认情况下,入口项是从 beat_schedule 设置中获取,但是自定义的存储也可以使用, ...

- 2016.7.10 SqlServer语句中类似decode、substr、instr、replace、length等函数的用法

Decode() 对应 case when函数 case CHARINDEX('/',start_point_name) when 0 then start_point_name else subst ...

- 11-16网页基础--HTML

网页制作部分主要讲解三大部分: 1.HTML 超文本标记语言( 全称:Hyper Text Markup Language) 专门编辑静态网页 2.CSS 网页美化:是HTML控制的 ...