sklearn学习笔记2

Text classifcation with Naïve Bayes

In this section we will try to classify newsgroup messages using a dataset that can be retrieved from within scikit-learn. This dataset consists of around 19,000 newsgroup messages from 20 different topics ranging from politics and religion to sports and science.

As usual, we frst start by importing our pylab environment:

%pylab inline

Our dataset can be obtained by importing the fetch_20newgroups function from the sklearn.datasets module. We have to specify if we want to import a part or all of the set of instances (we will import all of them).

from sklearn.datasets import fetch_20newsgroups

news = fetch_20newsgroups(subset='all')

If we look at the properties of the dataset, we will fnd that we have the usual ones: DESCR, data, target, and target_names. The difference now is that data holds a list of text contents, instead of a numpy matrix:

print(type(news.data), type(news.target), type(news.target_names))

print(news.target_names)

print(len(news.data))

print(len(news.target))

<class 'list'> <class 'numpy.ndarray'> <class 'list'>

['alt.atheism', 'comp.graphics', 'comp.os.ms-windows.misc', 'comp.sys.ibm.pc.hardware', 'comp.sys.mac.hardware', 'comp.windows.x', 'misc.forsale', 'rec.autos', 'rec.motorcycles', 'rec.sport.baseball', 'rec.sport.hockey', 'sci.crypt', 'sci.electronics', 'sci.med', 'sci.space', 'soc.religion.christian', 'talk.politics.guns', 'talk.politics.mideast', 'talk.politics.misc', 'talk.religion.misc']

18846

18846

If you look at, say, the frst instance, you will see the content of a newsgroup message, and you can get its corresponding category:

print(news.data[0])

print(news.target[0], news.target_names[news.target[0]])

From: Mamatha Devineni Ratnam <mr47+@andrew.cmu.edu>

Subject: Pens fans reactions

Organization: Post Office, Carnegie Mellon, Pittsburgh, PA

Lines: 12

NNTP-Posting-Host: po4.andrew.cmu.edu I am sure some bashers of Pens fans are pretty confused about the lack

of any kind of posts about the recent Pens massacre of the Devils. Actually,

I am bit puzzled too and a bit relieved. However, I am going to put an end

to non-PIttsburghers' relief with a bit of praise for the Pens. Man, they

are killing those Devils worse than I thought. Jagr just showed you why

he is much better than his regular season stats. He is also a lot

fo fun to watch in the playoffs. Bowman should let JAgr have a lot of

fun in the next couple of games since the Pens are going to beat the pulp out of Jersey anyway. I was very disappointed not to see the Islanders lose the final

regular season game. PENS RULE!!! 10 rec.sport.hockey

Preprocessing the data

Our machine learning algorithms can work only on numeric data, so our next step will be to convert our text-based dataset to a numeric dataset. Currently we only have one feature, the text content of the message; we need some function that transforms a text into a meaningful set of numeric features.

Intuitively one could try to look at which are the words (or more precisely, tokens, including numbers or punctuation signs) that are used in each of the text categories, and try to characterize

each category with the frequency distribution of each of those words. The sklearn.

feature_extraction.text module has some useful utilities to build numeric feature vectors from text documents.

Before starting the transformation, we will have to partition our data into training and testing set. The loaded data is already in a random order, so we only have to split the data into, for example, 75 percent for training and the rest 25 percent for testing:

If you look inside the sklearn.feature_extraction.text module, you will fnd three different classes that can transform text into numeric features: CountVectorizer, HashingVectorizer, and TfidfVectorizer.

The difference between them resides in the calculations they perform to obtain the numeric features.

- CountVectorizer主要从语料库创建了一个单词的字典,将每一个样本转化成一个关于每个单词在文档中出现次数的向量。

- HashingVectorizer不是在内存中压缩和维护字典,而是实现了一个将标记映射到特征索引的哈希函数,然后同一样计数。

- TfidfVectorizer与CountVectorizer类似,不过它使用一种更高级的计算方式,叫做Term Frequency Inverse Document Frequency (TF-IDF)。这是一种统计来测量一个单词在文档或语料库中的重要性。直观地,它寻找在当前文档中与整个文档集相比更加频繁的单词。你可以把它看做一种方式,用来标准化结果和避免单词太频繁,因此不能用来描述样本。

Training a Naïve Bayes classifer

We will create a Naïve Bayes classifer that is composed of a feature vectorizer and the actual Bayes classifer. We will use the MultinomialNB class from the sklearn.naive_bayes module. Scikitlearn has a very useful class called Pipeline (available in the sklearn.pipeline module) that eases the construction of a compound classifer, which consists of several vectorizers and classifers.

We will create three different classifers by combining MultinomialNB with the three different text vectorizers just mentioned, and compare which one performs better using the default parameters:

from sklearn.naive_bayes import MultinomialNB

from sklearn.pipeline import Pipeline

from sklearn.feature_extraction.text import TfidfVectorizer, HashingVectorizer, CountVectorizer

clf_1 = Pipeline([

('vect', CountVectorizer()),

('clf', MultinomialNB()),

])

clf_2 = Pipeline([

('vect', HashingVectorizer(non_negative=True)),

('clf', MultinomialNB()),

])

clf_3 = Pipeline([

('vect', TfidfVectorizer()),

('clf', MultinomialNB()),

])

We will defne a function that takes a classifer and performs the K-fold crossvalidation over the specifed X and y values:

from sklearn.cross_validation import cross_val_score, KFold

from scipy.stats import sem

def evaluate_cross_validation(clf, X, y, K):

# create a k-fold cross validation iterator of k=5 folds

cv = KFold(len(y), K, shuffle=True, random_state=0)

# by default the score used is the one returned by score method of the estimator (accuracy)

scores = cross_val_score(clf, X, y, cv=cv)

print(scores)

print(("Mean score: {0:.3f} (+/-{1:.3f})").format(np.mean(scores), sem(scores)))

Then we will perform a fve-fold cross-validation by using each one of the classifers.

clfs = [clf_1, clf_2, clf_3]

for clf in clfs:

evaluate_cross_validation(clf, news.data, news.target, 5)

[ 0.85782493 0.85725657 0.84664367 0.85911382 0.8458477 ]

Mean score: 0.853 (+/-0.003)

[ 0.75543767 0.77659857 0.77049615 0.78508888 0.76200584]

Mean score: 0.770 (+/-0.005)

[ 0.84482759 0.85990979 0.84558238 0.85990979 0.84213319]

Mean score: 0.850 (+/-0.004)

As you can see CountVectorizer and TfidfVectorizer had similar performances, and much better than HashingVectorizer.

Let's continue with TfidfVectorizer; we could try to improve the results by trying to parse the text documents into tokens with a different regular expression.

clf_4 = Pipeline([

('vect', TfidfVectorizer(

token_pattern=ur"\b[a-z0-9_\-\.]+[a-z][a-z0-9_\-\.]+\b",

)),

('clf', MultinomialNB()),

])

(不知道为什么报错SyntaxError: invalid syntax)

The default regular expression: ur"\b\w\w+\b" considers alphanumeric characters and the underscore. Perhaps also considering the slash and the dot could improve the tokenization, and begin considering tokens as Wi-Fi and site.com. The new regular expression could be: ur"\b[a-z0-9_\-\.]+[a-z][a-z0-9_\-\.]+\b". If you have queries about how to defne regular expressions, please refer to the Python re module documentation. Let's try our new classifer:

evaluate_cross_validation(clf_4, news.data, news.target, 5)

We have a slight improvement from 0.84 to 0.85.

Another parameter that we can use is stop_words: this argument allows us to pass a list of words we do not want to take into account, such as too frequent words, or words we do not a priori expect to provide information about the particular topic.

We will defne a function to load the stop words from a text fle as follows:

def get_stop_words():

result = set()

for line in open('stopwords_en.txt', 'r').readlines():

result.add(line.strip())

return result

And create a new classifer with this new parameter as follows:

clf_5 = Pipeline([

('vect', TfidfVectorizer(

stop_words=get_stop_words(),

token_pattern=ur"\b[a-z0-9_\-\.]+[a-z][a-z0-9_\-\.]+\b",

)),

('clf', MultinomialNB()),

])

evaluate_cross_validation(clf_5, news.data, news.target, 5)

The preceding code shows another improvement from 0.85 to 0.87.

Let's keep this vectorizer and start looking at the MultinomialNB parameters. This classifer has few parameters to tweak; the most important is the alpha parameter, which is a smoothing parameter. Let's set it to a lower value; instead of setting alpha to 1.0 (the default value), we will set it to 0.01:

clf_6 = Pipeline([

('vect', TfidfVectorizer(

stop_words=get_stop_words(),

token_pattern=ur"\b[a-z0-9_\-\.]+[a-z][a-z0-9_\-\.]+\b",

)),

('clf', MultinomialNB(alpha=0.01)),

]) evaluate_cross_validation(clf_6, X_train, Y_train, 5)

The results had an important boost from 0.89 to 0.92, pretty good. At this point, we could continue doing trials by using different values of alpha or doing new modifcations of the vectorizer.

Evaluating the performance

If we decide that we have made enough improvements in our model, we are ready to evaluate its performance on the testing set.

We will defne a helper function(见http://www.cnblogs.com/iamxyq/p/5912048.html train_and_evaluate函数) that will train the model in the entire training setand evaluate the accuracy in the training and in the testing sets.

We will evaluate our best classifer.

train_and_evaluate(clf_7, X_train, X_test, y_train, y_test)

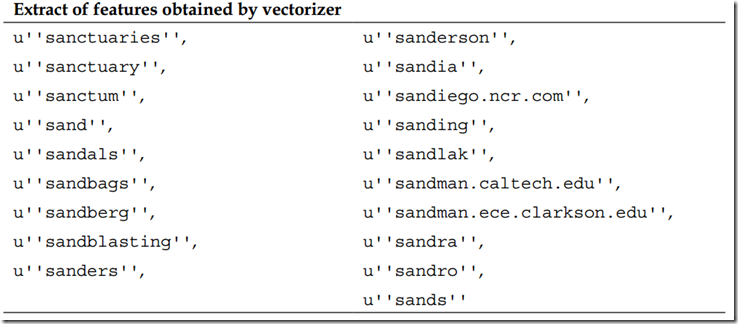

If we look inside the vectorizer, we can see which tokens have been used to create our dictionary:

print len(clf_7.named_steps['vect'].get_feature_names())

Let's print the feature names.

clf_7.named_steps['vect'].get_feature_names()

The following table presents an extract of the results:

You can see that some words are semantically very similar, for example, sand and sands, sanctuaries and sanctuary. Perhaps if the plurals and the singulars are counted to the same bucket, we would better represent the documents. This is a very common task, which could be solved using stemming, a technique that relates two words having the same lexical root.

sklearn学习笔记2的更多相关文章

- sklearn学习笔记之简单线性回归

简单线性回归 线性回归是数据挖掘中的基础算法之一,从某种意义上来说,在学习函数的时候已经开始接触线性回归了,只不过那时候并没有涉及到误差项.线性回归的思想其实就是解一组方程,得到回归函数,不过在出现误 ...

- sklearn学习笔记3

Explaining Titanic hypothesis with decision trees decision trees are very simple yet powerful superv ...

- sklearn学习笔记1

Image recognition with Support Vector Machines #our dataset is provided within scikit-learn #let's s ...

- sklearn学习笔记

用Bagging优化模型的过程:1.对于要使用的弱模型(比如线性分类器.岭回归),通过交叉验证的方式找到弱模型本身的最好超参数:2.然后用这个带着最好超参数的弱模型去构建强模型:3.对强模型也是通过交 ...

- sklearn学习笔记(一)——数据预处理 sklearn.preprocessing

https://blog.csdn.net/zhangyang10d/article/details/53418227 数据预处理 sklearn.preprocessing 标准化 (Standar ...

- sklearn学习笔记之岭回归

岭回归 岭回归是一种专用于共线性数据分析的有偏估计回归方法,实质上是一种改良的最小二乘估计法,通过放弃最小二乘法的无偏性,以损失部分信息.降低精度为代价获得回归系数更为符合实际.更可靠的回归方法,对病 ...

- sklearn学习笔记之开始

简介 自2007年发布以来,scikit-learn已经成为Python重要的机器学习库了.scikit-learn简称sklearn,支持包括分类.回归.降维和聚类四大机器学习算法.还包含了特征 ...

- sklearn学习笔记(1)--make_blobs函数及相应参数简介

make_blobs方法: sklearn.datasets.make_blobs(n_samples=100,n_features=2,centers=3, cluster_std=1.0,cent ...

- Google TensorFlow深度学习笔记

Google Deep Learning Notes Google 深度学习笔记 由于谷歌机器学习教程更新太慢,所以一边学习Deep Learning教程,经常总结是个好习惯,笔记目录奉上. Gith ...

随机推荐

- [转]透过 Linux 内核看无锁编程

非阻塞型同步 (Non-blocking Synchronization) 简介 如何正确有效的保护共享数据是编写并行程序必须面临的一个难题,通常的手段就是同步.同步可分为阻塞型同步(Blocking ...

- JavaScript HTML CSS外部链接

HTML文件 <!--<html> <head><link rel="stylesheet" type="text/css" ...

- ListView 的优化(原)

随着ListView的不断深入使用,对于其的优化是必不可免的一个过程,现把其常见的优化步骤分享下,一些粗浅见识... 优化分四步走: 第一,复用convertView对象,如果之前有条目对象,就复用, ...

- github中redme添加图片

README文件后缀名为md.md是markdown的缩写,markdown是一种编辑博客的语言.格式如下:  即 叹号! + 方括号[ ] + 括号( ) 其中叹号里是图片的 ...

- 新冲刺Sprint3(第四天)

一.Sprint介绍 实现了SQLite数据库记录自动登录.注销功能 真机测试效果图: 二.Sprint周期 看板: 燃尽图:

- fcitx error

(ERROR-2016 /build/fcitx-J2yftF/fcitx-4.2.9.1/src/lib/fcitx/ui.c:165) no usable user interface.(ERRO ...

- oracle 执行计划查看

1) sql command窗口里explain plan for select * from emp: 2) select * from table(dbms_xplan.display);

- python built-in zip()

zip([iterable, ...]) 返回一个list ,list里的元素是元组tuple.第i个元组内的元素是所有iteralbe中第i个元素组成的. 当所有的iterable拥有同样的长度的时 ...

- C++ exception

从没用过C++STL中的exception(异常类),在使用rapidxml,操作XML文件时,发现在一个抛出异常的错误.关注了下,就模范着做. 我也专门写了个函数来分配内存,如果发现分配不成功,就抛 ...

- 【转载】关于OpenGL的图形流水线

本文转载自 http://blog.csdn.net/racehorse/article/details/6593719 GLSL教程 这是一些列来自lighthouse3d的GLSL教程,非常适合入 ...