DeepLearning Intro - sigmoid and shallow NN

This is a series of Machine Learning summary note. I will combine the deep learning book with the deeplearning open course . Any feedback is welcomed!

First let's go through some basic NN concept using Bernoulli classification problem as an example.

Activation Function

1.Bernoulli Output

1. Definition

When dealing with Binary classification problem, what activavtion function should we use in the output layer?

Basically given \(x \in R^n\), How to get \(P(y=1|x)\) ?

2. Loss function

Let $\hat{y} = P(y=1|x) $, We would expect following output

\[P(y|x) = \begin{cases}

\hat{y} & \quad when & y= 1\\

1-\hat{y} & \quad when & y= 0\\

\end{cases}

\]

Above can be simplified as

\[P(y|x)= \hat{y}^{y}(1-\hat{y})^{1-y}\]

Therefore, the maximum likelihood of m training samples will be

\[\theta_{ML} = argmax\prod^{m}_{i=1}P(y^i|x^i)\]

As ususal we take the log of above function and get following. Actually for gradient descent log has other advantages, which we will discuss later.

\[log(\theta_{ML}) = argmax\sum^{m}_{i=1} ylog(\hat{y})+(1-y)log(1-\hat{y}) \]

And the cost function for optimization is following

\[J(w,b) = \sum ^{m}_{i=1}L(y^i,\hat{y}^i)= -\sum^{m}_{i=1}ylog(\hat{y})+(1-y)log(1-\hat{y})\]

The cost function is the sum of loss from m training samples, which measures the performance of classification algo.

And yes here it is exactly the negative of log likelihood. While Cost function can be different from negative log likelihood, when we apply regularization. But here let's start with simple version.

So here comes our next problem, how can we get 1-dimension $ log(\hat{y})$, given input \(x\), which is n-dimension vector ?

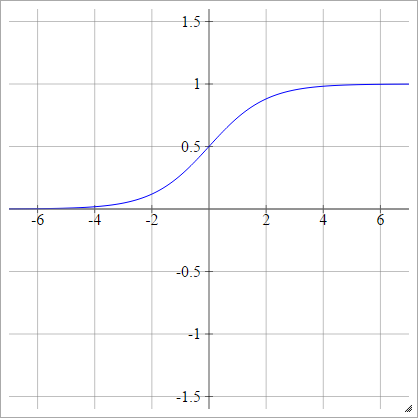

3. Activtion function - Sigmoid

Let \(h\) denotes the output from the previous hidden layer that goes into the final output layer. And a linear transformation is applied to \(h\) before activation function.

Let \(z = w^Th +b\)

The assumption here is

\[log(\hat{y}) = \begin{cases}

z & \quad when & y= 1\\

0 & \quad when & y= 0\\

\end{cases}

\]

Above can be simplified as

\[log(\hat{y}) = yz\quad \to \quad \hat{y} = exp(yz)\]

This is an unnormalized distribution of \(\hat{y}\). Because \(y\) denotes probability, we need to further normalize it to $ [0,1]$.

\[\hat{y} = \frac{exp(yz)} {\sum^1_{y=0}exp(yz)} \\

=\frac{exp(z)}{1+exp(z)}\\

\quad \quad \quad \quad = \frac{1}{1+exp(-z)} = \sigma(z)

\]

Bingo! Here we go - Sigmoid Function: \(\sigma(z) = \frac{1}{1+exp(-z)}\)

\[p(y|x) = \begin{cases}

\sigma(z) & \quad when & y= 1\\

1-\sigma(z) & \quad when & y= 0\\

\end{cases}

\]

Sigmoid function has many pretty cool features like following:

\[ 1- \sigma(x) = \sigma(-x) \\

\frac{d}{dx} \sigma(x) = \sigma(x)(1-\sigma(x)) \\

\quad \quad = \sigma(x)\sigma(-x)

\]

Using the first feature above, we can further simply the bernoulli output into following:

\[p(y|x) = \sigma((2y-1)z)\]

4. gradient descent and back propagation

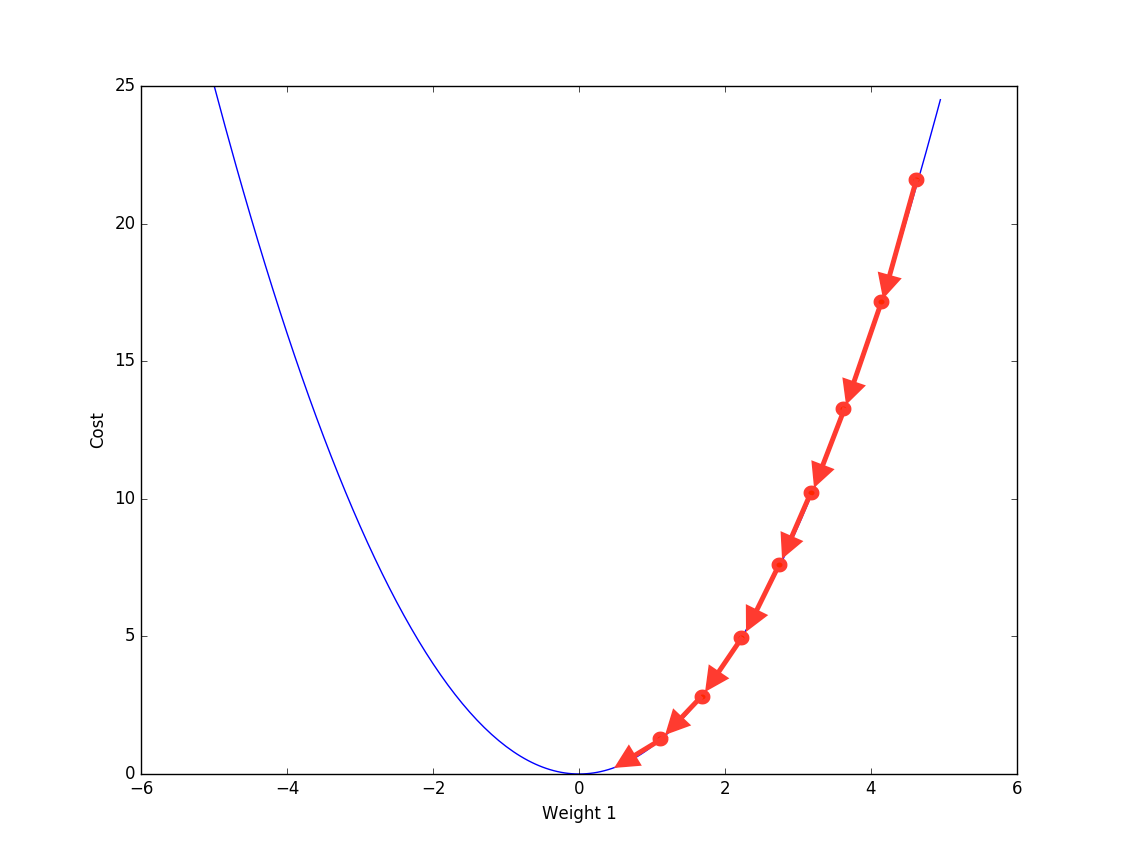

Now we have target cost fucntion to optimize. How does the NN learn from training data? The answer is -- Back Propagation.

Actually back propagation is not some fancy method that is designed for Neural Network. When training sample is big, we can use back propagation to train linear regerssion too.

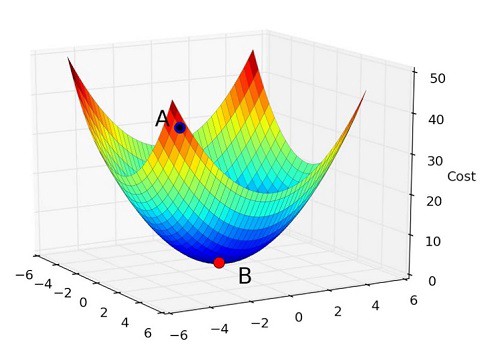

Back Propogation is iteratively using the partial derivative of cost function to update the parameter, in order to reach local optimum.

$ \

Looping \quad m \quad samples :\

w= w - \frac{\partial J(w,b)}{\partial w} \

b= b - \frac{\partial J(w,b)}{\partial b}

$

Bascically, for each training sample \((x,y)\), we compare the \(y\) with \(\hat{y}\) from output layer. Get the difference, and compute which part of difference is from which parameter( by partial derivative). And then update the parameter accordingly.

And the derivative of sigmoid function can be calcualted using chaining method:

For each training sample, let \(\hat{y}=a = \sigma(z)\)

\[ \frac{\partial L(a,y)}{\partial w} =

\frac{\partial L(a,y)}{\partial a} \cdot

\frac{\partial a}{\partial z} \cdot

\frac{\partial z}{\partial w}\]

Where

1.$\frac{\partial L(a,y)}{\partial a}

=-\frac{y}{a} + \frac{1-y}{1-a} $

Given loss function is

\(L(a,y) = -(ylog(a) + (1-y)log(1-a))\)

2.\(\frac{\partial a}{\partial z} = \sigma(z)(1-\sigma(z)) = a(1-a)\).

See above for sigmoid features.

3.\(\frac{\partial z}{\partial w} = x\)

Put them together we get :

\[ \frac{\partial L(a,y)}{\partial w} = (a-y)x\]

This is exactly the update we will have from each training sample \((x,y)\) to the parameter \(w\).

5. Entire work flow.

Summarizing everything. A 1-layer binary classification neural network is trained as following:

- Forward propagation: From \(x\), we calculate \(\hat{y}= \sigma(z)\)

- Calculate the cost function \(J(w,b)\)

- Back propagation: update parameter \((w,b)\) using gradient descent.

- keep doing above until the cost function stop improving (improment < certain threshold)

6. what's next?

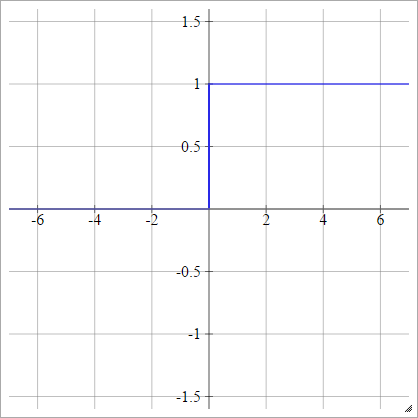

When NN has more than 1 layer, there will be hidden layers in between. And to get non-linear transformation of x, we also need different types of activation function for hidden layer.

However sigmoid is rarely used as hidden layer activation function for following reasons

- vanishing gradient descent

the reason we can't use [left] as activation function is because the gradient is 0 when \(z>1 ,z <0\).

Sigmoid only solves this problem partially. Becuase \(gradient \to 0\), when \(z>1 ,z <0\).

| \(p(y=1\|x)= max\{0,min\{1,z\}\}\) | \(p(y=1\|x)= \sigma(z)\) |

|---|---|

|

|

- non-zero centered

To be continued

Reference

- Ian Goodfellow, Yoshua Bengio, Aaron Conrville, "Deep Learning"

- Deeplearning.ai https://www.deeplearning.ai/

DeepLearning Intro - sigmoid and shallow NN的更多相关文章

- Sigmoid function in NN

X = [ones(m, ) X]; temp = X * Theta1'; t = size(temp, ); temp = [ones(t, ) temp]; h = temp * Theta2' ...

- Deeplearning - Overview of Convolution Neural Network

Finally pass all the Deeplearning.ai courses in March! I highly recommend it! If you already know th ...

- DeepLearning - Regularization

I have finished the first course in the DeepLearnin.ai series. The assignment is relatively easy, bu ...

- DeepLearning - Forard & Backward Propogation

In the previous post I go through basic 1-layer Neural Network with sigmoid activation function, inc ...

- Pytorch_第六篇_深度学习 (DeepLearning) 基础 [2]---神经网络常用的损失函数

深度学习 (DeepLearning) 基础 [2]---神经网络常用的损失函数 Introduce 在上一篇"深度学习 (DeepLearning) 基础 [1]---监督学习和无监督学习 ...

- 0802_转载-nn模块中的网络层介绍

0802_转载-nn 模块中的网络层介绍 目录 一.写在前面 二.卷积运算与卷积层 2.1 1d 2d 3d 卷积示意 2.2 nn.Conv2d 2.3 转置卷积 三.池化层 四.线性层 五.激活函 ...

- Neural Networks and Deep Learning

Neural Networks and Deep Learning This is the first course of the deep learning specialization at Co ...

- 关于BP算法在DNN中本质问题的几点随笔 [原创 by 白明] 微信号matthew-bai

随着deep learning的火爆,神经网络(NN)被大家广泛研究使用.但是大部分RD对BP在NN中本质不甚清楚,对于为什这么使用以及国外大牛们是什么原因会想到用dropout/sigmoid ...

- Pytorch实现UNet例子学习

参考:https://github.com/milesial/Pytorch-UNet 实现的是二值汽车图像语义分割,包括 dense CRF 后处理. 使用python3,我的环境是python3. ...

随机推荐

- react native基本调试技巧

刚入坑RN,很多小坑都要摸索很久才明白.今天咱们就来填console.log()的坑. 废话不多说,开始讲步骤!! 1.在模拟器中打开 开发者菜单,选择 Debug JS Remotely,会自动在浏 ...

- RMAN_PIPE

涉及的dbms_pipe包中的过程和函数:(1)PACK_MESSAGE Procedures用途:Builds message in local buffer(2)SEND_MESSAGE Func ...

- python3爬虫-网易云排行榜,网易云歌手及作品

import requests, re, json, os, time from fake_useragent import UserAgent from lxml import etree from ...

- Jquery与js简单对比

//Javascript window.onload=function () { var oBtn=document.getElementById('btn1'); oBtn.onclick=func ...

- Zabbix——设置阈值和报警

前提条件: Zabbix 服务器可以正常监控其他设备 Zbbix 已经配置完成了邮件报警 Zabbix server版本为4.0 配置ICMP监测,1分钟如果ping不通,将会发送邮件 找到Templ ...

- 转型大数据之学前准备,掌握linux(一)

导语:为什么要学习linux?学到什么程度? 大数据技术是运行在集群,且是linux操作系统这样的集群当中的,所以学习大数据之前,你得先掌握了linux的简单操作,没错,我们不是专门的做linux工作 ...

- react路由配置(未完)

React路由 React推了两个版本 一个是react-router 一个是react-router-dom 个人建议使用第二个 因为他多了一个Link组件 Npm install react-ro ...

- 为什么后台返回的日期我输出处理了在苹果手机里显示NAN?

现象: //结束时间var ent_time ="2018-04-28 09:36:00"alert((Date.parse(new Date(ent_time))));/ ...

- Java学习笔记二十三:Java的继承初始化顺序

Java的继承初始化顺序 当使用继承这个特性时,程序是如何执行的: 继承的初始化顺序 1.初始化父类再初始子类 2.先执行初始化对象中属性,再执行构造方法中的初始化 当使用继承这个特性时,程序是如何执 ...

- 北京Uber优步司机奖励政策(1月23日)

滴快车单单2.5倍,注册地址:http://www.udache.com/ 如何注册Uber司机(全国版最新最详细注册流程)/月入2万/不用抢单:http://www.cnblogs.com/mfry ...