kubeadm 线上集群部署(一) 外部 ETCD 集群搭建

| IP | Hostname | |

| 172.16.100.251 | nginx01 | 代理 apiverser |

| 172.16.100.252 | nginx02 | 代理 apiverser |

| 172.16.100.254 | apiserver01.xxx.com | VIP地址,主要用于nginx高可用确保nginx中途不会中途 |

| 172.16.100.51 | k8s-etcd-01 | etcd集群节点,默认关于ETCD所有操作均在此节点上操作 |

| 172.16.100.52 | k8s-etcd-02 | etcd集群节点 |

| 172.16.100.53 | k8s-etcd-03 | etcd集群节点 |

| 172.16.100.31 | k8s-master-01 | Work Master集群节点,默认关于k8s所有操作均在此节点上操作 |

| 172.16.100.32 | k8s-master-02 | Work Master集群节点 |

| 172.16.100.33 | k8s-master-03 | Work Master集群节点 |

| 172.16.100.34 | k8s-master-04 | Work Master集群节点 |

| 172.16.100.35 | k8s-master-05 | Work Master集群节点 |

| 172.16.100.36 | k8s-node-01 | Work node节点 |

| 172.16.100.37 | k8s-node-02 | Work node节点 |

| 172.16.100.38 | k8s-node-03 | Work node节点 |

介绍: Kubeadm集成了关于k8s部署的所有功能,在这里要强调的是,Kubeadm只负责安装和部署组件,不会参与其他服务的部署,比如有人以为可以用kubeadm安装nginx,这是k8s内部干的事情,和他没关系,在实际的生产环境过程当中,如果我们不熟悉每个组件的工作原理,那么我们将很难开展工作,比如排查故障,系统升级等。

首先,我们知道ETCD的安装在通信过程中可以使用http也可以使用https(默认),在作为基础设施的一部分,为安全考虑着想,一般线上都是使用的https,通过证书的方式进行加密通信,所以本次ETCD部署也会使用后者。

首先证书方面;

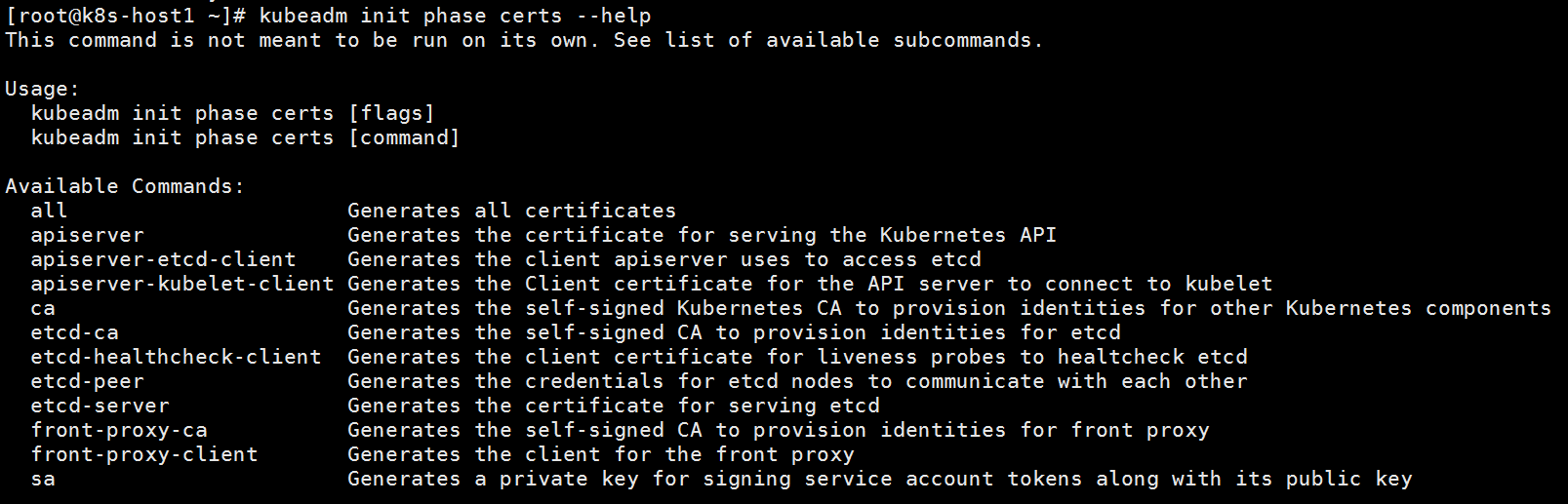

kubeadm集成了有关etcd和k8s所有证书的生成,如果你想生成的证书年限长一点通常可以直接修改源码重新编译打包成二进制文件,然后保存在你自己的文件里即可,这里推荐一篇别人写的.

证书期限修改 https://blog.51cto.com/lvsir666/2344986?source=dra

kubeadm创建证书的命令

kubeadm init phase certs --help

vim system_initializer.sh

#!/usr/bin/env bash

systemctl stop firewalld

systemctl disable firewalld

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

setenforce

sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/selinux/config

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward =

net.bridge.bridge-nf-call-ip6tables =

net.bridge.bridge-nf-call-iptables =

fs.may_detach_mounts =

vm.overcommit_memory=

vm.panic_on_oom=

vm.swappiness =

fs.inotify.max_user_watches=

fs.file-max=

fs.nr_open=

net.netfilter.nf_conntrack_max=

EOF

sysctl --system

yum install ipvsadm ipset sysstat conntrack libseccomp wget -y

:> /etc/modules-load.d/ipvs.conf

module=(

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

nf_conntrack_ipv4

)

for kernel_module in ${module[@]};do

/sbin/modinfo -F filename $kernel_module |& grep -qv ERROR && echo $kernel_module >> /etc/modules-load.d/ipvs.conf || :

done

systemctl enable --now systemd-modules-load.service

mkdir -p /etc/yum.repos.d/bak

mv /etc/yum.repos.d/CentOS* /etc/yum.repos.d/bak

wget -P /etc/yum.repos.d/ http://mirrors.aliyun.com/repo/Centos-7.repo

wget -P /etc/yum.repos.d/ http://mirrors.aliyun.com/repo/epel-7.repo

wget -P /etc/yum.repos.d/ https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum clean all cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=

gpgcheck=

repo_gpgcheck=

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF echo "* soft nofile 65536" >> /etc/security/limits.conf

echo "* hard nofile 65536" >> /etc/security/limits.conf

echo "* soft nproc 65536" >> /etc/security/limits.conf

echo "* hard nproc 65536" >> /etc/security/limits.conf

echo "* soft memlock unlimited" >> /etc/security/limits.conf

echo "* hard memlock unlimited" >> /etc/security/limits.conf # 安装k8s组建

kubeadm reset

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

ipvsadm --clear

yum remove kubelet* -y

yum remove kubectl* -y

yum remove docker-ce*

mkdir -p /data/kubelet

ln -s /data/kubelet /var/lib/kubelet

yum update -y && yum install -y kubeadm-1.13.* kubelet-1.13.* kubectl-1.13.* kubernetes-cni-0.6* --disableexcludes=kubernetes # 替换kubeadm

# 安装工具 yum install chrony vim net-tools -y

## 让集群支持nfs挂载

yum -y install nfs-utils && yum -y install rpcbind

# 安装时间同步ntp

yum install -y ntp

echo "/usr/sbin/ntpdate cn.ntp.org.cn edu.ntp.org.cn &> /dev/null" >> /var/spool/cron/root

# 安装docker,docker版本选择k8s官方推荐的版本

# https://kubernetes.io/docs/setup/cri/

yum install yum-utils -y

yum install device-mapper-persistent-data lvm2 -y

yum-config-manager \

--add-repo \

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum install docker-ce-18.06..ce -y

mkdir /etc/docker

cat >/etc/docker/daemon.json<<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://fz5yth0r.mirror.aliyuncs.com"],

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"log-driver": "json-file",

"log-opts": {

"max-size": "1000m",

"max-file": ""

}

}

EOF

# docker 自动补全

yum install -y epel-release && cp /usr/share/bash-completion/completions/docker /etc/bash_completion.d/

yum install -y bash-completion

systemctl enable --now docker.service

systemctl enable --now kubelet.service

systemctl start kubelet

systemctl start start

systemctl enable chronyd.service

systemctl start chronyd.service

yum install -y epel-release && cp /usr/share/bash-completion/completions/docker /etc/bash_completion.d/

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

# kubectl taint node k8s-host1 node-role.kubernetes.io/master=:NoSchedule

vim base_env_etcd_cluster_init.sh

#!/usr/bin/env bash

export HOST0=172.16.100.51

export HOST1=172.16.100.52

export HOST2=172.16.100.53

ETCDHOSTS=(${HOST0} ${HOST1} ${HOST2})

NAMES=("k8s-etcd-01" "k8s-etcd-02" "k8s-etcd-03")

sed -i '$a\'$HOST0' k8s-etcd-01' /etc/hosts

sed -i '$a\'$HOST1' k8s-etcd-02' /etc/hosts

sed -i '$a\'$HOST2' k8s-etcd-03' /etc/hosts mkdir -p /etc/systemd/system/kubelet.service.d/

cat << EOF > /etc/systemd/system/kubelet.service.d/20-etcd-service-manager.conf

[Service]

ExecStart=

ExecStart=/usr/bin/kubelet --address=127.0.0.1 --pod-manifest-path=/etc/kubernetes/manifests --allow-privileged=true --cgroup-driver=systemd --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.1

Restart=always

EOF # hostnamectl set-hostname

systemctl stop firewalld

systemctl disable firewalld

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

setenforce 0

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/selinux/config

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

vm.swappiness = 0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

EOF

sysctl -p /etc/sysctl.d/k8s.conf

wget -P /etc/yum.repos.d/ http://mirrors.aliyun.com/repo/epel-7.repo

wget -P /etc/yum.repos.d/ https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF echo "* soft nofile 65536" >> /etc/security/limits.conf

echo "* hard nofile 65536" >> /etc/security/limits.conf

echo "* soft nproc 65536" >> /etc/security/limits.conf

echo "* hard nproc 65536" >> /etc/security/limits.conf

echo "* soft memlock unlimited" >> /etc/security/limits.conf

echo "* hard memlock unlimited" >> /etc/security/limits.conf

yum install ipvsadm ipset sysstat conntrack libseccomp wget -y

yum update -y

yum install -y kubeadm-1.13.5* kubelet-1.13.5* kubectl-1.13.5* kubernetes-cni-0.6* --disableexcludes=kubernetes

yum-config-manager \

--add-repo \

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum install docker-ce-18.06.2.ce -y

mkdir /etc/docker

cat >/etc/docker/daemon.json<<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"log-driver": "json-file",

"log-opts": {

"max-size": "1000m",

"max-file": "50"

}

}

EOF

mkdir -p /data/docker

sed -i 's/ExecStart=\/usr\/bin\/dockerd/ExecStart=\/usr\/bin\/dockerd --graph=\/data\/docker/g' /usr/lib/systemd/system/docker.service

# docker 自动补全

systemctl start docker

yum install -y epel-release && cp /usr/share/bash-completion/completions/docker /etc/bash_completion.d/

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

docker pull registry.aliyuncs.com/google_containers/etcd:3.2.24

systemctl enable --now docker

systemctl enable --now kubelet

一目了然,你基本知道etcd所需要的证书是哪些了,下面我们来创建证书,创建证书之前我们需要生成关于etcd的初始化文件,通过执行 base_env_etcd_cluster_init.sh 获取

vim start.sh 修改IP

#!/usr/bin/env bash

# sh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.0.104

## 参考链接

# #ED#ED#ED

# https://kubernetes.io/docs/setup/independent/setup-ha-etcd-with-kubeadm/

# master 使用外部etcd集群

# https://kubernetes.io/docs/setup/independent/high-availability/

export HOST0=172.16.100.51

export HOST1=172.16.100.52

export HOST2=172.16.100.53 yum install -y wget

# 初始化 kubeadm config

mkdir -p /data/etcd

curl -s https://gitee.com/hewei8520/File/raw/master/1.13.5/initializer_etcd_cluster/system_initializer.sh | bash

curl -s https://gitee.com/hewei8520/File/raw/master/1.13.5/initializer_etcd_cluster/base_env_etcd_cluster_init.sh | bash

wget https://github.com/qq676596084/QuickDeploy/raw/master/1.13.5/bin/kubeadm && chmod +x kubeadm

./kubeadm init phase certs etcd-ca

./kubeadm init phase certs etcd-server --config=/tmp/${HOST0}/kubeadmcfg.yaml

./kubeadm init phase certs etcd-peer --config=/tmp/${HOST0}/kubeadmcfg.yaml

./kubeadm init phase certs etcd-healthcheck-client --config=/tmp/${HOST0}/kubeadmcfg.yaml

./kubeadm init phase certs apiserver-etcd-client --config=/tmp/${HOST0}/kubeadmcfg.yaml

systemctl restart kubelet

sleep

kubeadm init phase etcd local --config=/tmp/${HOST0}/kubeadmcfg.yaml USER=root

for HOST in ${HOST1} ${HOST2}

do

scp -r /tmp/${HOST}/* ${USER}@${HOST}:

ssh ${USER}@${HOST} 'yum install -y wget'

ssh ${USER}@${HOST} 'mkdir -p /etc/kubernetes/'

scp -r /etc/kubernetes/pki ${USER}@${HOST}:/etc/kubernetes/

# 初始化系统 安装依赖以及docker

ssh ${USER}@${HOST} 'curl -s https://gitee.com/hewei8520/File/raw/master/1.13.5/initializer_etcd_cluster/system_initializer.sh | bash'

ssh ${USER}@${HOST} 'systemctl restart kubelet'

sleep 3

ssh ${USER}@${HOST} 'kubeadm init phase etcd local --config=/root/kubeadmcfg.yaml'

done sleep 5

docker run --rm -it \

--net host \

-v /etc/kubernetes:/etc/kubernetes registry.aliyuncs.com/google_containers/etcd:3.2.24 etcdctl \

--cert-file /etc/kubernetes/pki/etcd/peer.crt \

--key-file /etc/kubernetes/pki/etcd/peer.key \

--ca-file /etc/kubernetes/pki/etcd/ca.crt \

--endpoints https://${HOST0}:2379 cluster-health

耐心等待即可

注意:

如果你在初始化k8s时候,使用kubeadm reset操作,建议你手动重置你的ETCD集群,只需删掉数据目录手动重启kubelet服务即可,当服务可用就可以了。

参考资料:

https://kubernetes.io/docs/setup/independent/setup-ha-etcd-with-kubeadm/

https://kubernetes.io/docs/setup/independent/high-availability/

kubeadm 线上集群部署(一) 外部 ETCD 集群搭建的更多相关文章

- 基于k8s集群部署prometheus监控etcd

目录 基于k8s集群部署prometheus监控etcd 1.背景和环境概述 2.修改prometheus配置 3.检查是否生效 4.配置grafana图形 基于k8s集群部署prometheus监控 ...

- kubeadm 线上集群部署(二) K8S Master集群安装以及工作节点的部署

PS:所有机器主机名请提前设置好 在上一篇,ETCD集群我们已经搭建成功了,下面我们需要搭建master相关组件,apiverser需要与etcd通信并操作 1.配置证书 将etcd证书上传到mast ...

- K8s二进制部署单节点 etcd集群,flannel网络配置 ——锥刺股

K8s 二进制部署单节点 master --锥刺股 k8s集群搭建: etcd集群 flannel网络插件 搭建master组件 搭建node组件 1.部署etcd集群 2.Flannel 网络 ...

- 一个kubeadm.config文件--定义了token,扩展了默认端口,外部ETCD集群,自定义docker仓库,基于ipvs的kubeproxy

这个版本是基于kubeadm.k8s.io/v1alpha3的,如果到了beta1,可能还要变动呢. apiVersion: kubeadm.k8s.io/v1alpha3 kind: InitCon ...

- MongoDB(7):集群部署实践,包含复制集,分片

注: 刚开始学习MongoDB,写的有点麻烦了,网上教程都是很少的代码就完成了集群的部署, 纯属个人实践,错误之处望指正!有好的建议和资料请联系我QQ:1176479642 集群架构: 2mongos ...

- 彻底搞懂 etcd 系列文章(三):etcd 集群运维部署

0 专辑概述 etcd 是云原生架构中重要的基础组件,由 CNCF 孵化托管.etcd 在微服务和 Kubernates 集群中不仅可以作为服务注册与发现,还可以作为 key-value 存储的中间件 ...

- linux运维、架构之路-Kubernetes集群部署TLS双向认证

一.kubernetes的认证授权 Kubernetes集群的所有操作基本上都是通过kube-apiserver这个组件进行的,它提供HTTP RESTful形式的API供集群内外客户端调 ...

- k8s-rabbitmq-(一)集群部署

K8S版本:1.10.1 rabbitmq版本:3.6.14 从来没用过这个软件,所以对里面很多术语看不太懂.最后通过https://www.kubernetes.org.cn/2629.html 大 ...

- 2.Storm集群部署及单词统计案例

1.集群部署的基本流程 2.集群部署的基础环境准备 3.Storm集群部署 4.Storm集群的进程及日志熟悉 5.Storm集群的常用操作命令 6.Storm源码下载及目录熟悉 7.Storm 单词 ...

随机推荐

- 「GXOI / GZOI2019」旧词

题目 确定这不是思博题 看起来很神仙,本来以为是\([LNOI2014]LCA\)的加强版,结果发现一个点的贡献是\(s_i\times (deep_i^k-(deep_i-1)^k)\),\(s_i ...

- P3623 [APIO2008]免费道路

3624: [Apio2008]免费道路 Time Limit: 2 Sec Memory Limit: 128 MBSec Special Judge Submit: 2143 Solved: 88 ...

- 记录一下mac上码云的使用

项目比较多的时候用第三方的托管平台管理自己的代码还是挺不错的,记录一下码云的基本使用 分两部分进行说明: 一 :怎么上传自己本地的代码到码云.(方式,通过终端输入命令行) 具体的步骤: 1 :首先得在 ...

- P2854 [USACO06DEC]牛的过山车Cow Roller Coaster

题目描述 The cows are building a roller coaster! They want your help to design as fun a roller coaster a ...

- vlc源码分析(七) 调试学习HLS协议

HTTP Live Streaming(HLS)是苹果公司提出来的流媒体传输协议.与RTP协议不同的是,HLS可以穿透某些允许HTTP协议通过的防火墙. 一.HLS播放模式 (1) 点播模式(Vide ...

- C++ - 类的虚函数\虚继承所占的空间

类的虚函数\虚继承所占的空间 本文地址: http://blog.csdn.net/caroline_wendy/article/details/24236469 char占用一个字节, 但不满足4的 ...

- 文本处理三剑客之 awk

GAWK:报告生成器,格式化文本输出 awk [options] ‘program’ var=value file… awk [options] -f programfile var=value fi ...

- mysql where语句多条件查询是and和or联合使用bug

公司项目中有段功能是全局搜索框和下拉列表的联合查询,在联调开发中发现单独用下拉查询是正确的,单独用全局搜索框也是正确的,测试发现是sql语法有问题. 问题截图: 出现问题的核心还是在于搜索框是用于多个 ...

- plupload分片上传视频文件源码展示

plupload分片上传视频文件目录结构如下: |- images//视频上传小图片 |-js// plupload js文件 |-uploads//视频文件存放文件夹 里面是按日期存放 |-ajax ...

- 2.7 usb摄像头之usb摄像头描述符打印

学习目标:参考lsusb源码,打印USB摄像头的设备描述符.配置描述符.接口联合描述符.端点描述符: 一.lsusb命令和源码 使用命令lsusb可以看看设备的id,并执行 # lsusb -v -d ...