Hadoop生态圈-Hive快速入门篇之HQL的基础语法

Hadoop生态圈-Hive快速入门篇之HQL的基础语法

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

本篇博客的重点是介绍Hive中常见的数据类型,DDL数据定义,DML数据操作以及常用的查询操作。如果你没有hive的安装环境的话,可以参考我之前分析搭建hive的笔记:https://www.cnblogs.com/yinzhengjie/p/9154324.html

一.Hive常见的属性配置

1>.Hive数据仓库位置配置

>.Default数据仓库的最原始位置在“hdfs:/user/hive/warehouse/ ”路径下

>.在仓库目录下,没有对默认的数据库default的创建文件夹(也就是说,如果有表属于default数据库,那么默认会存放在根路径下)。如果某张表属于default数据库,直接在数据仓库目录下创建一个文件夹

>.修改default数据仓库原始位置(将默认配置文件“hive-defalut.xml.template”如下配置信息拷贝到hive-site.xml文件中

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

2>.配置当前数据库,以及查询表的头信息配置

在hive-site.xml文件中添加如下配置信息,即可以实现显示当前数据库,以及查询表的头信息配置。配置之后需要重启hive客户端

<property>

<name>hive.cli.print.header</name>

<value>true</value>

<description>Whether to print the names of the columns in query output.</description>

</property> <property>

<name>hive.cli.print.current.db</name>

<value>true</value>

<description>Whether to include the current database in the Hive prompt.</description>

</property>

配置以上设置后,重启hive客户端,你会发现多了两个功能,可以查看表头以及当前所在的数据库:

3>.Hive运行日志信息配置

>.Hive的log默认存放在"/tmp/atguigu/hive.log"目录下(当前用户名下)。 >.修改hive的log存放日志到"/home/yinzhengjie/hive/logs",我们可以修改hive-log4j2.properties进行配置,具体操作如下:

[yinzhengjie@s101 ~]$ cd /soft/hive/conf/

[yinzhengjie@s101 conf]$

[yinzhengjie@s101 conf]$ cp hive-log4j2.properties.template hive-log4j2.properties #拷贝模板文件生成配置文件

[yinzhengjie@s101 conf]$ grep property.hive.log.dir hive-log4j2.properties | grep -v ^#

property.hive.log.dir = /home/yinzhengjie/hive/logs #指定log的存放位置

[yinzhengjie@s101 conf]$

[yinzhengjie@s101 conf]$ ll /home/yinzhengjie/hive/logs/hive.log

-rw-rw-r-- yinzhengjie yinzhengjie Aug : /home/yinzhengjie/hive/logs/hive.log #重启hive,查看日志文件中的内容

[yinzhengjie@s101 conf]$

4>.查看参数配置方式

>.查看当前的所有配置信息(hive (yinzhengjie)> set;)

配置文件方式:

默认配置文件: hive-default.xml

用户自定义配置文件: hive-site.xml

注意:用户自定义配置会覆盖默认配置。另外,Hive也会读入Hadoop的配置,因为Hive是作为Hadoop的客户端启用的,Hive的配置会覆盖Hadoop的配置。配置文件的设定对本机启动的所有Hive进程都有效。 >.参数的配置三种方式以及优先级介绍

启动命令行时声明参数方式:

[yinzhengjie@s101 ~]$ hive -hiveconf mapred.reduce.tasks=

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. Logging initialized using configuration in file:/soft/apache-hive-2.1.-bin/conf/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive .X releases.

hive (default)> set mapred.reduce.tasks;

mapred.reduce.tasks=

hive (default)> quit;

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. Logging initialized using configuration in file:/soft/apache-hive-2.1.-bin/conf/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive .X releases.

hive (default)> set mapred.reduce.tasks;

mapred.reduce.tasks=-

hive (default)> exit;

[yinzhengjie@s101 ~]$ 启动命令行后参数声明方式:

[yinzhengjie@s101 ~]$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. Logging initialized using configuration in file:/soft/apache-hive-2.1.-bin/conf/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive .X releases.

hive (default)> set mapred.reduce.tasks;

mapred.reduce.tasks=-

hive (default)> set mapred.reduce.tasks=;

hive (default)> set mapred.reduce.tasks;

mapred.reduce.tasks=

hive (default)> quit;

[yinzhengjie@s101 ~]$ 三种方式优先级温馨提示:

以上三种设定方式的优先级依次递增。即"配置文件"<"启动命令行时"<"启动命令行后"。注意某些系统级的参数,例如log4j相关的设定,必须用前两种方式设定,因为那些参数的读取在会话建立以前已经完成了。

二.Hive数据类型

1>.基本数据类型

对于Hive的String类型相当于数据库的varchar类型,该类型是一个可变的字符串,不过它不能声明其中最多能存储多少个字符,理论上它可以存储2GB的字符数。

|

Hive数据类型 |

Java数据类型 |

长度 |

例子 |

|

TINYINT |

byte |

1byte有符号整数 |

20 |

|

SMALINT |

short |

2byte有符号整数 |

20 |

|

INT |

int |

4byte有符号整数 |

20 |

|

BIGINT |

long |

8byte有符号整数 |

20 |

|

BOOLEAN |

boolean |

布尔类型,true或者false |

TRUE FALSE |

|

FLOAT |

float |

单精度浮点数 |

3.14159 |

|

DOUBLE |

double |

双精度浮点数 |

3.14159 |

|

STRING |

string |

字符系列。可以指定字符集。可以使用单引号或者双引号。 |

‘now is the time’ “for all good men” |

|

TIMESTAMP |

时间类型 |

||

|

BINARY |

字节数组 |

2>.集合数据类型

Hive有三种复杂数据类型ARRAY、MAP 和 STRUCT。ARRAY和MAP与Java中的Array和Map类似,而STRUCT与C语言中的Struct类似,它封装了一个命名字段集合,复杂数据类型允许任意层次的嵌套。

|

数据类型 |

描述 |

语法示例 |

|

STRUCT |

和c语言中的struct类似,都可以通过“点”符号访问元素内容。例如,如果某个列的数据类型是STRUCT{first STRING, last STRING},那么第1个元素可以通过字段.first来引用。 |

struct() |

|

MAP |

MAP是一组键-值对元组集合,使用数组表示法可以访问数据。例如,如果某个列的数据类型是MAP,其中键->值对是’first’->’John’和’last’->’Doe’,那么可以通过字段名[‘last’]获取最后一个元素 |

map() |

|

ARRAY |

数组是一组具有相同类型和名称的变量的集合。这些变量称为数组的元素,每个数组元素都有一个编号,编号从零开始。例如,数组值为[‘John’, ‘Doe’],那么第2个元素可以通过数组名[1]进行引用。 |

Array() |

3>类型转化

Hive的原子数据类型是可以进行隐式转换的,类似于Java的类型转换,例如某表达式使用INT类型,TINYINT会自动转换为INT类型,但是Hive不会进行反向转化,例如,某表达式使用TINYINT类型,INT不会自动转换为TINYINT类型,它会返回错误,除非使用CAST操作。隐式类型转换规则如下。

第一:任何整数类型都可以隐式地转换为一个范围更广的类型,如TINYINT可以转换成INT,INT可以转换成BIGINT。

第二:所有整数类型、FLOAT和STRING类型都可以隐式地转换成DOUBLE。

第三:TINYINT、SMALLINT、INT都可以转换为FLOAT。

第四:BOOLEAN类型不可以转换为任何其它的类型。

温馨提示:可以使用CAST操作显示进行数据类型转换,例如CAST('1' AS INT)将把字符串'1' 转换成整数1;如果强制类型转换失败,如执行CAST('X' AS INT),表达式返回空值 NULL。

4>.小试牛刀

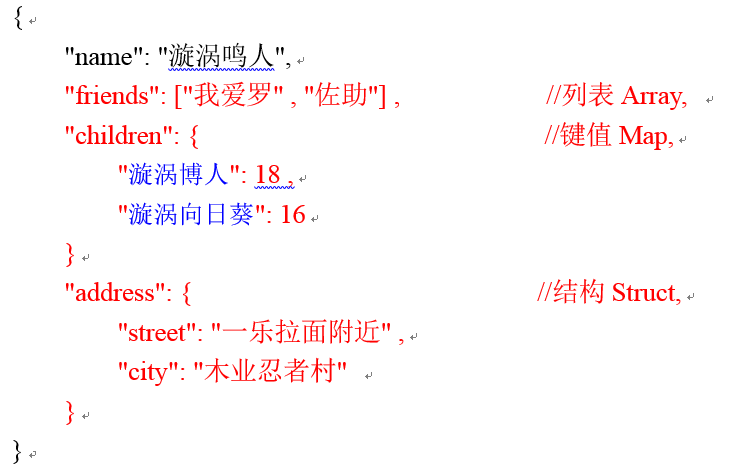

假设某表有如下一行,我们用JSON格式来表示其数据结构。在Hive下访问的格式为:

基于上述数据结构,我们在Hive里创建对应的表,并导入数据。创建本地测试文件test.txt内容如下:(注意,MAP,STRUCT和ARRAY里的元素间关系都可以用同一个字符表示,这里用“_”。)

[yinzhengjie@s101 download]$ cat /home/yinzhengjie/download/test.txt

漩涡鸣人,我爱罗_佐助,漩涡博人:18_漩涡向日葵:,一乐拉面附近_木业忍者村

宇智波富岳,宇智波美琴_志村团藏,宇智波鼬:28_宇智波佐助:,木叶警务部_木业忍者村

[yinzhengjie@s101 download]$

Hive上创建测试表test,如下:

create table test(

name string,

friends array<string>,

children map<string, int>,

address struct<street:string, city:string>

)

row format delimited fields terminated by ','

collection items terminated by '_'

map keys terminated by ':'

lines terminated by '\n';

导入文本数据到测试表:

hive (yinzhengjie)> load data local inpath '/home/yinzhengjie/download/test.txt' into table test;

Loading data to table yinzhengjie.test

OK

Time taken: 0.335 seconds

hive (yinzhengjie)> select * from test;

OK

test.name test.friends test.children test.address

漩涡鸣人 ["我爱罗","佐助"] {"漩涡博人":,"漩涡向日葵":} {"street":"一乐拉面附近","city":"木业忍者村"}

宇智波富岳 ["宇智波美琴","志村团藏"] {"宇智波鼬":,"宇智波佐助":} {"street":"木叶警务部","city":"木业忍者村"}

Time taken: 0.099 seconds, Fetched: row(s)

hive (yinzhengjie)>

访问三种集合列里的数据,以下分别是ARRAY,MAP,STRUCT的访问方式:

hive (yinzhengjie)> select * from test;

OK

test.name test.friends test.children test.address

漩涡鸣人 ["我爱罗","佐助"] {"漩涡博人":,"漩涡向日葵":} {"street":"一乐拉面附近","city":"木业忍者村"}

宇智波富岳 ["宇智波美琴","志村团藏"] {"宇智波鼬":,"宇智波佐助":} {"street":"木叶警务部","city":"木业忍者村"}

Time taken: 0.085 seconds, Fetched: row(s)

hive (yinzhengjie)> select friends[],children['漩涡博人'],address.city from test where name="漩涡鸣人";

OK

_c0 _c1 city

我爱罗 木业忍者村

Time taken: 0.096 seconds, Fetched: row(s)

hive (yinzhengjie)> select friends[],children['漩涡向日葵'],address.city from test where name="漩涡鸣人";

OK

_c0 _c1 city

佐助 木业忍者村

Time taken: 0.1 seconds, Fetched: row(s)

hive (yinzhengjie)>

三.Hive的常用命令(HQL)用法展示

温馨提示:在使用Hive交互命令或是执行HQL语句时都会启动Hive,而hive依赖于Hadoop的hdfs提供存储和MapReduce提供计算,因此在启动Hive之前,需要启动Hadoop集群哟。

[yinzhengjie@s101 ~]$ more `which xcall.sh`

#!/bin/bash

#@author :yinzhengjie

#blog:http://www.cnblogs.com/yinzhengjie

#EMAIL:y1053419035@qq.com #判断用户是否传参

if [ $# -lt ];then

echo "请输入参数"

exit

fi #获取用户输入的命令

cmd=$@ for (( i=;i<=;i++ ))

do

#使终端变绿色

tput setaf

echo ============= s$i $cmd ============

#使终端变回原来的颜色,即白灰色

tput setaf

#远程执行命令

ssh s$i $cmd

#判断命令是否执行成功

if [ $? == ];then

echo "命令执行成功"

fi

done

[yinzhengjie@s101 ~]$

查看集群的命令脚本([yinzhengjie@s101 ~]$ more `which xcall.sh`)

[yinzhengjie@s101 ~]$ more `which start-dfs.sh` | grep -v ^# | grep -v ^$

usage="Usage: start-dfs.sh [-upgrade|-rollback] [other options such as -clusterId]"

bin=`dirname "${BASH_SOURCE-$0}"`

bin=`cd "$bin"; pwd`

DEFAULT_LIBEXEC_DIR="$bin"/../libexec

HADOOP_LIBEXEC_DIR=${HADOOP_LIBEXEC_DIR:-$DEFAULT_LIBEXEC_DIR}

. $HADOOP_LIBEXEC_DIR/hdfs-config.sh

if [[ $# -ge ]]; then

startOpt="$1"

shift

case "$startOpt" in

-upgrade)

nameStartOpt="$startOpt"

;;

-rollback)

dataStartOpt="$startOpt"

;;

*)

echo $usage

exit

;;

esac

fi

nameStartOpt="$nameStartOpt $@"

NAMENODES=$($HADOOP_PREFIX/bin/hdfs getconf -namenodes)

echo "Starting namenodes on [$NAMENODES]"

"$HADOOP_PREFIX/sbin/hadoop-daemons.sh" \

--config "$HADOOP_CONF_DIR" \

--hostnames "$NAMENODES" \

--script "$bin/hdfs" start namenode $nameStartOpt

if [ -n "$HADOOP_SECURE_DN_USER" ]; then

echo \

"Attempting to start secure cluster, skipping datanodes. " \

"Run start-secure-dns.sh as root to complete startup."

else

"$HADOOP_PREFIX/sbin/hadoop-daemons.sh" \

--config "$HADOOP_CONF_DIR" \

--script "$bin/hdfs" start datanode $dataStartOpt

fi

SECONDARY_NAMENODES=$($HADOOP_PREFIX/bin/hdfs getconf -secondarynamenodes >/dev/null)

if [ -n "$SECONDARY_NAMENODES" ]; then

echo "Starting secondary namenodes [$SECONDARY_NAMENODES]"

"$HADOOP_PREFIX/sbin/hadoop-daemons.sh" \

--config "$HADOOP_CONF_DIR" \

--hostnames "$SECONDARY_NAMENODES" \

--script "$bin/hdfs" start secondarynamenode

fi

SHARED_EDITS_DIR=$($HADOOP_PREFIX/bin/hdfs getconf -confKey dfs.namenode.shared.edits.dir >&-)

case "$SHARED_EDITS_DIR" in

qjournal://*)

JOURNAL_NODES=$(echo "$SHARED_EDITS_DIR" | sed 's,qjournal://\([^/]*\)/.*,\1,g; s/;/ /g; s/:[0-9]*//g')

echo "Starting journal nodes [$JOURNAL_NODES]"

"$HADOOP_PREFIX/sbin/hadoop-daemons.sh" \

--config "$HADOOP_CONF_DIR" \

--hostnames "$JOURNAL_NODES" \

--script "$bin/hdfs" start journalnode ;;

esac

AUTOHA_ENABLED=$($HADOOP_PREFIX/bin/hdfs getconf -confKey dfs.ha.automatic-failover.enabled)

if [ "$(echo "$AUTOHA_ENABLED" | tr A-Z a-z)" = "true" ]; then

echo "Starting ZK Failover Controllers on NN hosts [$NAMENODES]"

"$HADOOP_PREFIX/sbin/hadoop-daemons.sh" \

--config "$HADOOP_CONF_DIR" \

--hostnames "$NAMENODES" \

--script "$bin/hdfs" start zkfc

fi

[yinzhengjie@s101 ~]$

HDFS分布式文件系统启动脚本([yinzhengjie@s101 ~]$ more `which start-dfs.sh` | grep -v ^# | grep -v ^$)

[yinzhengjie@s101 ~]$ cat /soft/hadoop/sbin/start-yarn.sh | grep -v ^# | grep -v ^$

echo "starting yarn daemons"

bin=`dirname "${BASH_SOURCE-$0}"`

bin=`cd "$bin"; pwd`

DEFAULT_LIBEXEC_DIR="$bin"/../libexec

HADOOP_LIBEXEC_DIR=${HADOOP_LIBEXEC_DIR:-$DEFAULT_LIBEXEC_DIR}

. $HADOOP_LIBEXEC_DIR/yarn-config.sh

"$bin"/yarn-daemon.sh --config $YARN_CONF_DIR start resourcemanager

"$bin"/yarn-daemons.sh --config $YARN_CONF_DIR start nodemanager

[yinzhengjie@s101 ~]$

Yarn启动脚本([yinzhengjie@s101 ~]$ cat /soft/hadoop/sbin/start-yarn.sh | grep -v ^# | grep -v ^$)

[yinzhengjie@s101 ~]$ more `which xzk.sh`

#!/bin/bash

#@author :yinzhengjie

#blog:http://www.cnblogs.com/yinzhengjie

#EMAIL:y1053419035@qq.com #判断用户是否传参

if [ $# -ne ];then

echo "无效参数,用法为: $0 {start|stop|restart|status}"

exit

fi #获取用户输入的命令

cmd=$ #定义函数功能

function zookeeperManger(){

case $cmd in

start)

echo "启动服务"

remoteExecution start

;;

stop)

echo "停止服务"

remoteExecution stop

;;

restart)

echo "重启服务"

remoteExecution restart

;;

status)

echo "查看状态"

remoteExecution status

;;

*)

echo "无效参数,用法为: $0 {start|stop|restart|status}"

;;

esac

} #定义执行的命令

function remoteExecution(){

for (( i= ; i<= ; i++ )) ; do

tput setaf

echo ========== s$i zkServer.sh $ ================

tput setaf

ssh s$i "source /etc/profile ; zkServer.sh $1"

done

} #调用函数

zookeeperManger

[yinzhengjie@s101 ~]$

zookeeper启动脚本([yinzhengjie@s101 ~]$ more `which xzk.sh`)

[yinzhengjie@s101 ~]$ xzk.sh start

启动服务

========== s102 zkServer.sh start ================

ZooKeeper JMX enabled by default

Using config: /soft/zk/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

========== s103 zkServer.sh start ================

ZooKeeper JMX enabled by default

Starting zookeeper ... Using config: /soft/zk/bin/../conf/zoo.cfg

STARTED

========== s104 zkServer.sh start ================

ZooKeeper JMX enabled by default

Using config: /soft/zk/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ xcall.sh jps

============= s101 jps ============

Jps

命令执行成功

============= s102 jps ============

QuorumPeerMain

Jps

命令执行成功

============= s103 jps ============

QuorumPeerMain

Jps

命令执行成功

============= s104 jps ============

Jps

QuorumPeerMain

命令执行成功

============= s105 jps ============

Jps

命令执行成功

[yinzhengjie@s101 ~]$

启动zookeeper([yinzhengjie@s101 ~]$ xzk.sh start)

[yinzhengjie@s101 ~]$ start-dfs.sh

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

Starting namenodes on [s101 s105]

s101: starting namenode, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-namenode-s101.out

s105: starting namenode, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-namenode-s105.out

s103: starting datanode, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-datanode-s103.out

s102: starting datanode, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-datanode-s102.out

s104: starting datanode, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-datanode-s104.out

Starting journal nodes [s102 s103 s104]

s102: starting journalnode, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-journalnode-s102.out

s103: starting journalnode, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-journalnode-s103.out

s104: starting journalnode, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-journalnode-s104.out

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

Starting ZK Failover Controllers on NN hosts [s101 s105]

s101: starting zkfc, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-zkfc-s101.out

s105: starting zkfc, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-zkfc-s105.out

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ xcall.sh jps

============= s101 jps ============

Jps

NameNode

DFSZKFailoverController

命令执行成功

============= s102 jps ============

JournalNode

QuorumPeerMain

DataNode

Jps

命令执行成功

============= s103 jps ============

Jps

DataNode

JournalNode

QuorumPeerMain

命令执行成功

============= s104 jps ============

Jps

DataNode

QuorumPeerMain

JournalNode

命令执行成功

============= s105 jps ============

DFSZKFailoverController

NameNode

Jps

命令执行成功

[yinzhengjie@s101 ~]$

启动HDFS分布式文件系统([yinzhengjie@s101 ~]$ start-dfs.sh )

[yinzhengjie@s101 ~]$ start-yarn.sh

starting yarn daemons

s101: starting resourcemanager, logging to /soft/hadoop-2.7./logs/yarn-yinzhengjie-resourcemanager-s101.out

s105: starting resourcemanager, logging to /soft/hadoop-2.7./logs/yarn-yinzhengjie-resourcemanager-s105.out

s103: starting nodemanager, logging to /soft/hadoop-2.7./logs/yarn-yinzhengjie-nodemanager-s103.out

s102: starting nodemanager, logging to /soft/hadoop-2.7./logs/yarn-yinzhengjie-nodemanager-s102.out

s104: starting nodemanager, logging to /soft/hadoop-2.7./logs/yarn-yinzhengjie-nodemanager-s104.out

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ xcall.sh jps

============= s101 jps ============

ResourceManager

Jps

NameNode

DFSZKFailoverController

命令执行成功

============= s102 jps ============

JournalNode

QuorumPeerMain

NodeManager

Jps

DataNode

命令执行成功

============= s103 jps ============

DataNode

JournalNode

NodeManager

Jps

QuorumPeerMain

命令执行成功

============= s104 jps ============

NodeManager

Jps

DataNode

QuorumPeerMain

JournalNode

命令执行成功

============= s105 jps ============

DFSZKFailoverController

NameNode

Jps

命令执行成功

[yinzhengjie@s101 ~]$

启动yarn资源调度([yinzhengjie@s101 ~]$ start-yarn.sh )

1>.hive交互命令

[yinzhengjie@s101 download]$ cat teachers.txt

Dennis MacAlistair Ritchie

Linus Benedict Torvalds

Bjarne Stroustrup

Guido van Rossum

James Gosling

Martin Odersky

Rob Pike

Rasmus Lerdorf

Brendan Eich

[yinzhengjie@s101 download]$

[yinzhengjie@s101 download]$ cat teachers.txt

[yinzhengjie@s101 ~]$ hive -help

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

usage: hive

-d,--define <key=value> Variable subsitution to apply to hive

commands. e.g. -d A=B or --define A=B

--database <databasename> Specify the database to use

-e <quoted-query-string> SQL from command line

-f <filename> SQL from files

-H,--help Print help information

--hiveconf <property=value> Use value for given property

--hivevar <key=value> Variable subsitution to apply to hive

commands. e.g. --hivevar A=B

-i <filename> Initialization SQL file

-S,--silent Silent mode in interactive shell

-v,--verbose Verbose mode (echo executed SQL to the

console)

[yinzhengjie@s101 ~]$

查看帮助信息([yinzhengjie@s101 ~]$ hive -help)

[yinzhengjie@s101 ~]$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. Logging initialized using configuration in jar:file:/soft/apache-hive-2.1.-bin/lib/hive-common-2.1..jar!/hive-log4j2.properties Async: true

default

yinzhengjie

Hive-on-MR is deprecated in Hive and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive .X releases.

hive>

登录hive的shell命令行交互界面([yinzhengjie@s101 ~]$ hive)

[yinzhengjie@s101 ~]$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. Logging initialized using configuration in jar:file:/soft/apache-hive-2.1.-bin/lib/hive-common-2.1..jar!/hive-log4j2.properties Async: true

default

yinzhengjie

Hive-on-MR is deprecated in Hive and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive .X releases.

hive> show databases;

OK

default

yinzhengjie

Time taken: 0.01 seconds, Fetched: row(s)

hive>

查看已经存在的库名(hive> show databases;)

[yinzhengjie@s101 ~]$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. Logging initialized using configuration in jar:file:/soft/apache-hive-2.1.-bin/lib/hive-common-2.1..jar!/hive-log4j2.properties Async: true

default

yinzhengjie

Hive-on-MR is deprecated in Hive and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive .X releases.

hive> show databases;

OK

default

yinzhengjie

Time taken: 0.008 seconds, Fetched: row(s)

hive> use yinzhengjie;

OK

Time taken: 0.018 seconds

hive>

使用已经存在的数据库(hive> use yinzhengjie;)

hive> show databases;

OK

default

yinzhengjie

Time taken: 0.008 seconds, Fetched: row(s)

hive> use yinzhengjie;

OK

Time taken: 0.018 seconds

hive> show tables;

OK

az_top3

az_wc

test1

test2

test3

test4

yzj

Time taken: 0.025 seconds, Fetched: row(s)

hive>

查看当前库已经存在的表(hive> show tables;)

hive> show databases;

OK

default

yinzhengjie

Time taken: 0.008 seconds, Fetched: row(s)

hive> use yinzhengjie;

OK

Time taken: 0.018 seconds

hive> show tables;

OK

az_top3

az_wc

test1

test2

test3

test4

yzj

Time taken: 0.025 seconds, Fetched: row(s)

hive> create table Teacher(id int,name string)row format delimited fields terminated by '\t';

OK

Time taken: 0.626 seconds

hive> show tables;

OK

az_top3

az_wc

teacher

test1

test2

test3

test4

yzj

Time taken: 0.028 seconds, Fetched: row(s)

hive>

创建一个teacher表(hive> create table Teacher(id int,name string)row format delimited fields terminated by '\t';)

hive> show tables;

OK

teacher

yzj

Time taken: 0.022 seconds, Fetched: row(s)

hive> select * from teacher;

OK

Time taken: 0.105 seconds

hive> load data local inpath '/home/yinzhengjie/download/teachers.txt' into table yinzhengjie.teacher;

Loading data to table yinzhengjie.teacher

OK

Time taken: 0.256 seconds

hive> select * from teacher;

OK

Dennis MacAlistair Ritchie

Linus Benedict Torvalds

Bjarne Stroustrup

Guido van Rossum

James Gosling

Martin Odersky

Rob Pike

Rasmus Lerdorf

Brendan Eich

Time taken: 0.104 seconds, Fetched: row(s)

hive>

从本地加载数据到hive中已经存在的表(hive> load data local inpath '/home/yinzhengjie/download/teachers.txt' into table yinzhengjie.teacher;)

hive (yinzhengjie)> load data inpath '/home/yinzhengjie/data/logs/umeng/raw-log/201808/06/2346' into table raw_logs partition(ym=201808 , day=06 ,hm=2346);

Loading data to table yinzhengjie.raw_logs partition (ym=201808, day=6, hm=2346)

OK

Time taken: 1.846 seconds

hive (yinzhengjie)>

从hdfs上加载数据到hive中已经存在的表(hive (yinzhengjie)> load data inpath '/home/yinzhengjie/data/logs/umeng/raw-log/201808/06/2346' into table raw_logs partition(ym=201808 , day=06 ,hm=2346);)

[yinzhengjie@s101 download]$ cat /home/yinzhengjie/download/umeng_create_logs_ddl.sql

use yinzhengjie ; --startuplogs

create table if not exists startuplogs

(

appChannel string ,

appId string ,

appPlatform string ,

appVersion string ,

brand string ,

carrier string ,

country string ,

createdAtMs bigint ,

deviceId string ,

deviceStyle string ,

ipAddress string ,

network string ,

osType string ,

province string ,

screenSize string ,

tenantId string

)

partitioned by (ym int ,day int , hm int)

stored as parquet ; --eventlogs

create table if not exists eventlogs

(

appChannel string ,

appId string ,

appPlatform string ,

appVersion string ,

createdAtMs bigint ,

deviceId string ,

deviceStyle string ,

eventDurationSecs bigint ,

eventId string ,

osType string ,

tenantId string

)

partitioned by (ym int ,day int , hm int)

stored as parquet ; --errorlogs

create table if not exists errorlogs

(

appChannel string ,

appId string ,

appPlatform string ,

appVersion string ,

createdAtMs bigint ,

deviceId string ,

deviceStyle string ,

errorBrief string ,

errorDetail string ,

osType string ,

tenantId string

)

partitioned by (ym int ,day int , hm int)

stored as parquet ; --usagelogs

create table if not exists usagelogs

(

appChannel string ,

appId string ,

appPlatform string ,

appVersion string ,

createdAtMs bigint ,

deviceId string ,

deviceStyle string ,

osType string ,

singleDownloadTraffic bigint ,

singleUploadTraffic bigint ,

singleUseDurationSecs bigint ,

tenantId string

)

partitioned by (ym int ,day int , hm int)

stored as parquet ; --pagelogs

create table if not exists pagelogs

(

appChannel string ,

appId string ,

appPlatform string ,

appVersion string ,

createdAtMs bigint ,

deviceId string ,

deviceStyle string ,

nextPage string ,

osType string ,

pageId string ,

pageViewCntInSession int ,

stayDurationSecs bigint ,

tenantId string ,

visitIndex int

)

partitioned by (ym int ,day int , hm int)

stored as parquet ;

[yinzhengjie@s101 download]$

HQL测试语句([yinzhengjie@s101 download]$ cat /home/yinzhengjie/download/umeng_create_logs_ddl.sql)

hive (yinzhengjie)> show tables;

OK

tab_name

myusers

raw_logs

student

teacher

teacherbak

teachercopy

Time taken: 0.044 seconds, Fetched: 6 row(s)

hive (yinzhengjie)>

hive (yinzhengjie)> source /home/yinzhengjie/download/umeng_create_logs_ddl.sql;

OK

Time taken: 0.008 seconds

OK

Time taken: 0.257 seconds

OK

Time taken: 0.058 seconds

OK

Time taken: 0.073 seconds

OK

Time taken: 0.065 seconds

OK

Time taken: 0.053 seconds

hive (yinzhengjie)> show tables;

OK

tab_name

errorlogs

eventlogs

myusers

pagelogs

raw_logs

startuplogs

student

teacher

teacherbak

teachercopy

usagelogs

Time taken: 0.014 seconds, Fetched: 11 row(s)

hive (yinzhengjie)>

在hive中执行HQL语句文本文件(hive (yinzhengjie)> source /home/yinzhengjie/download/umeng_create_logs_ddl.sql;)

[yinzhengjie@s101 ~]$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. Logging initialized using configuration in jar:file:/soft/apache-hive-2.1.-bin/lib/hive-common-2.1..jar!/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive .X releases.

hive> dfs -cat /user/hive/warehouse/yinzhengjie.db/teacher/teachers.txt;

Dennis MacAlistair Ritchie

Linus Benedict Torvalds

Bjarne Stroustrup

Guido van Rossum

James Gosling

Martin Odersky

Rob Pike

Rasmus Lerdorf

Brendan Eich

hive>

在hive的命令行窗口中查看hdfs文件系统中的文件内容(hive> dfs -cat /user/hive/warehouse/yinzhengjie.db/teacher/teachers.txt;)

[yinzhengjie@s101 ~]$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. Logging initialized using configuration in jar:file:/soft/apache-hive-2.1.-bin/lib/hive-common-2.1..jar!/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive .X releases.

hive> ! ls /home/yinzhengjie/download; derby.log

hivef.sql

metastore_db

MySpark.jar

spark-2.1.-bin-hadoop2..tgz

teachers.txt

temp

hive>

在hive命令行窗口查看Linux文件系统中的文件内容(hive> ! ls /home/yinzhengjie/download;)

[yinzhengjie@s101 download]$ cat ~/.hivehistory

show databases;

quit;

show databases;

quit

;

create table(id int,name string) row format delimited

fields terminated by '\t'

lines terminated by '\n'

stored as textfile;

create table users(id int , name string) row format delimited

fields terminated by '\t'

lines terminated by '\n'

stored as textfile;

load data local inpath 'user.txt' into table users;

!pwd

;

!cd /home/yinzhengjie

;

!pwd

;

quit;

load data local inpath 'user.txt' into table users;

load data inpath 'user.txt' into table users;

hdfs dfs -put 'user.txt';

hdfs dfs put 'user.txt';

dfs put 'user.txt';

dfs -put 'user.txt';

dfs -put 'user.txt' /;

dfs -put user.txt ;

dfs -put user.txt /;

load data inpath 'user.txt' into table users;

load data inpath '/user.txt' into table users;

;;

;

;;

ipconfig

;

quit

quit;

exit

exit;

show databases;

use yinzhengjie

;

show tables;

SET hive.support.concurrency = true;

show tables;

use yinzhengjie;

show tables;

select * from yzj;

SET hive.support.concurrency = true;

SET hive.enforce.bucketing = true;

SET hive.exec.dynamic.partition.mode = nonstrict;

SET hive.txn.manager = org.apache.hadoop.hive.ql.lockmgr.DbTxnManager;

SET hive.compactor.initiator.on = true;

SET hive.compactor.worker.threads = ;

select * from yzj;

use yinzhengjie;

SET hive.support.concurrency = true;

SET hive.enforce.bucketing = true;

SET hive.exec.dynamic.partition.mode = nonstrict;

SET hive.txn.manager = org.apache.hadoop.hive.ql.lockmgr.DbTxnManager;

SET hive.compactor.initiator.on = true;

SET hive.compactor.worker.threads = ;

show tables;

select * from yzj;

show databases;

use yinzhengjie;

show tables;

hive

show databases;

use yinzhengjie;

show tables;

select * from az_top3;

quit;

show databases;

use yinzhengjie

;

show tables;

use yinzhengjie;

show databases;

use yinzhengjie;

show tables;

create table Teacher(id int,name string)row format delimited fields terminated by '\t';

show tables;

load data local inpath '/home/yinzhengjie/download/teachers.txt'

;

show tables;

drop table taacher;

show databases;

use yinzhengjie;

show tables;

drop table teacher;

show tables;

;

show tables;

create table Teacher(id int,name string)row format delimited fields terminated by '\t';

show tables;

drop table test1,test2,test3;

drop table test1;

drop table test2;

drop table test3;

drop table test4;

show tables;

drop table az_top3;

drop table az_wc;

show tbales;

show databasers;

show databases;

drop database yinzhengjie;

;

use yinzhengjie;

show tables;

drop table teacher;

show tables;

create table Teacher(id int,name string)row format delimited fields terminated by '\t';

show tables;

load data local inpath '/home/yinzhengjie/download/teachers.txt';

load data local inpath `/home/yinzhengjie/download/teachers.txt`;

use yinzhengjie

;

show tables;

load data local inpath '/home/yinzhengjie/download/teachers.txt' into table yinzhengjie.teacher;

select * from teacher;

drop table teacher;

;

create table Teacher(id int,name string)row format delimited fields terminated by '\t';

show tables;

select * from teacher;

load data local inpath '/home/yinzhengjie/download/teachers.txt' into table yinzhengjie.teacher;

select * from teacher;

quit;

exit;

exit

;

dfs -cat /user/hive/warehouse/yinzhengjie.db/teacher/teachers.txt;

dfs -lsr /;

;

! ls /home/yinzhengjie;

! ls /home/yinzhengjie/download;

[yinzhengjie@s101 download]$

查看hive中输入的所有历史命令([yinzhengjie@s101 download]$ cat ~/.hivehistory )

[yinzhengjie@s101 download]$ hive -e "select * from yinzhengjie.teacher;"

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. Logging initialized using configuration in jar:file:/soft/apache-hive-2.1.-bin/lib/hive-common-2.1..jar!/hive-log4j2.properties Async: true

default

yinzhengjie

OK

Dennis MacAlistair Ritchie

Linus Benedict Torvalds

Bjarne Stroustrup

Guido van Rossum

James Gosling

Martin Odersky

Rob Pike

Rasmus Lerdorf

Brendan Eich

Time taken: 3.414 seconds, Fetched: row(s)

[yinzhengjie@s101 download]$

在shell命令行中执行HQL语句([yinzhengjie@s101 download]$ hive -e "select * from yinzhengjie.teacher;")

[yinzhengjie@s101 download]$ hive -f /home/yinzhengjie/download/hivef.sql

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. Logging initialized using configuration in jar:file:/soft/apache-hive-2.1.-bin/lib/hive-common-2.1..jar!/hive-log4j2.properties Async: true

default

yinzhengjie

OK

Time taken: 0.023 seconds

OK

teacher

yzj

Time taken: 0.085 seconds, Fetched: row(s)

OK

Dennis MacAlistair Ritchie

Linus Benedict Torvalds

Bjarne Stroustrup

Guido van Rossum

James Gosling

Martin Odersky

Rob Pike

Rasmus Lerdorf

Brendan Eich

Time taken: 2.044 seconds, Fetched: row(s)

[yinzhengjie@s101 download]$

执行HQL语句的脚本文件([yinzhengjie@s101 download]$ hive -f /home/yinzhengjie/download/hivef.sql )

[yinzhengjie@s101 ~]$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. Logging initialized using configuration in jar:file:/soft/apache-hive-2.1.-bin/lib/hive-common-2.1..jar!/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive .X releases.

hive> quit;

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. Logging initialized using configuration in jar:file:/soft/apache-hive-2.1.-bin/lib/hive-common-2.1..jar!/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive .X releases.

hive> exit;

[yinzhengjie@s101 ~]$

退出hive窗口(hive> exit;或者hive> quit;)

2>.DDL数据定义

hive (yinzhengjie)> show databases;

OK

database_name

default

yinzhengjie

Time taken: 0.007 seconds, Fetched: row(s)

hive (yinzhengjie)> create database if not exists db_hive;

OK

Time taken: 0.034 seconds

hive (yinzhengjie)> show databases;

OK

database_name

db_hive

default

yinzhengjie

Time taken: 0.009 seconds, Fetched: row(s)

hive (yinzhengjie)>

创建一个数据库的标准写法(hive (yinzhengjie)> create database if not exists db_hive;),创建的数据库默认存放在hdfs中的“/user/hive/warehouse”

hive (yinzhengjie)> show databases;

OK

database_name

db_hive

default

yinzhengjie

Time taken: 0.008 seconds, Fetched: row(s)

hive (yinzhengjie)> create database if not exists db_hive2 location "/db_hive2";

OK

Time taken: 0.04 seconds

hive (yinzhengjie)> show databases;

OK

database_name

db_hive

db_hive2

default

yinzhengjie

Time taken: 0.006 seconds, Fetched: row(s)

hive (yinzhengjie)>

创建一个数据库,使用location关键字指定数据库在HDFS上的存放位置并起一个别名(hive (yinzhengjie)> create database if not exists db_hive2 location "/db_hive2";),这种方式我不推荐大家使用,因为它和defalut数据库的存储方式很像

用户可以使用ALTER DATABASE 命令为某个数据库的DBPROPERTIES设置键-值对属性值,来描述这个数据库的属性信息。

数据库的其他元数据信息都是不可更改的,包括数据库名和数据库所在的目录位置。 hive (yinzhengjie)> show databases;

OK

database_name

db_hive

db_hive2

default

yinzhengjie

Time taken: 0.007 seconds, Fetched: row(s)

hive (yinzhengjie)> ALTER DATABASE db_hive set dbproperties('Owner'='yinzhengjie'); #给数据库添加额外的属性,注意,这里并没有修改数据库里的元数据!

OK

Time taken: 0.03 seconds

hive (yinzhengjie)> desc database db_hive; #使用这条命令是查不到的咱们定义的属性的哟!

OK

db_name comment location owner_name owner_type parameters

db_hive hdfs://mycluster/user/hive/warehouse/db_hive.db yinzhengjie USER

Time taken: 0.017 seconds, Fetched: row(s)

hive (yinzhengjie)> desc database extended db_hive; #我们需要在数据库前加一个extended关键字,就能查看到我们定义的数据库属性。

OK

db_name comment location owner_name owner_type parameters

db_hive hdfs://mycluster/user/hive/warehouse/db_hive.db yinzhengjie USER {Owner=yinzhengjie}

Time taken: 0.011 seconds, Fetched: row(s)

hive (yinzhengjie)>

修改数据库属性( hive (yinzhengjie)> ALTER DATABASE db_hive set dbproperties('Owner'='yinzhengjie'); )

hive (yinzhengjie)> show databases; #显示所有的数据库

OK

database_name

db_hive

db_hive2

default

yinzhengjie

Time taken: 0.008 seconds, Fetched: row(s)

hive (yinzhengjie)>

hive (yinzhengjie)> show databases like 'yin*'; #过滤显示的查询的数据库

OK

database_name

yinzhengjie

Time taken: 0.009 seconds, Fetched: row(s)

hive (yinzhengjie)>

hive (yinzhengjie)> desc database db_hive; #显示数据库信息

OK

db_name comment location owner_name owner_type parameters

db_hive hdfs://mycluster/user/hive/warehouse/db_hive.db yinzhengjie USER

Time taken: 0.012 seconds, Fetched: row(s)

hive (yinzhengjie)> desc database extended db_hive; #显示数据库详细信息,使用关键字:extended

OK

db_name comment location owner_name owner_type parameters

db_hive hdfs://mycluster/user/hive/warehouse/db_hive.db yinzhengjie USER {Owner=yinzhengjie}

Time taken: 0.013 seconds, Fetched: row(s)

hive (yinzhengjie)>

hive (yinzhengjie)> show databases;

OK

database_name

db_hive

db_hive2

default

yinzhengjie

Time taken: 0.006 seconds, Fetched: row(s)

hive (yinzhengjie)> use default; #使用数据库

OK

Time taken: 0.012 seconds

hive (default)>

查询数据库的常用姿势介绍(hive (yinzhengjie)> show databases like 'yin*';)

hive (yinzhengjie)> show databases;

OK

database_name

db_hive

db_hive2

default

yinzhengjie

Time taken: 0.006 seconds, Fetched: row(s)

hive (yinzhengjie)> use db_hive2; #使用db_hive2数据库

OK

Time taken: 0.014 seconds

hive (db_hive2)> show tables; #db_hive2数据库中没有任何表

OK

tab_name

Time taken: 0.015 seconds

hive (db_hive2)> drop database if exists db_hive2; #删除空的数据库db_hive2

OK

Time taken: 0.05 seconds

hive (db_hive2)> show databases;

OK

database_name

db_hive

default

yinzhengjie

Time taken: 0.006 seconds, Fetched: row(s)

hive (db_hive2)> use db_hive; #使用db_hive数据库

OK

Time taken: 0.012 seconds

hive (db_hive)> show tables; #db_hive2数据库中是有数据表的

OK

tab_name

classlist

student

teacher

Time taken: 0.016 seconds, Fetched: row(s)

hive (db_hive)> drop database db_hive cascade; #使用关键字cascade强制删除有数据的数据库db_hive

OK

Time taken: 0.304 seconds

hive (db_hive)> use yinzhengjie;

OK

Time taken: 0.016 seconds

hive (yinzhengjie)> show databases;

OK

database_name

default

yinzhengjie

Time taken: 0.007 seconds, Fetched: row(s)

hive (yinzhengjie)>

删除数据库的常用姿势介绍(hive (db_hive)> drop database db_hive cascade;)

一.建表语法以及字段解释

>.建表语句如下:

CREATE [EXTERNAL] TABLE [IF NOT EXISTS] table_name

[(col_name data_type [COMMENT col_comment], ...)]

[COMMENT table_comment]

[PARTITIONED BY (col_name data_type [COMMENT col_comment], ...)]

[CLUSTERED BY (col_name, col_name, ...)

[SORTED BY (col_name [ASC|DESC], ...)] INTO num_buckets BUCKETS]

[ROW FORMAT row_format]

[STORED AS file_format]

[LOCATION hdfs_path]

>.字段解释说明:

a>.CREATE TABLE 创建一个指定名字的表。如果相同名字的表已经存在,则抛出异常;用户可以用 IF NOT EXISTS 选项来忽略这个异常。

b>.EXTERNAL关键字可以让用户创建一个外部表,在建表的同时指定一个指向实际数据的路径(LOCATION),Hive创建内部表时,会将数据移动到数据仓库指向的路径;若创建外部表,仅记录数据所在的路径,不对数据的位置做任何改变。在删除表的时候,内部表的元数据和数据会被一起删除,而外部表只删除元数据,不删除数据。

c>.COMMENT:为表和列添加注释。

d>.PARTITIONED BY创建分区表

e>.CLUSTERED BY创建分桶表

f>.SORTED BY不常用

g>.ROW FORMAT

DELIMITED [FIELDS TERMINATED BY char] [COLLECTION ITEMS TERMINATED BY char]

[MAP KEYS TERMINATED BY char] [LINES TERMINATED BY char]

| SERDE serde_name [WITH SERDEPROPERTIES (property_name=property_value, property_name=property_value, ...)]

用户在建表的时候可以自定义SerDe或者使用自带的SerDe。如果没有指定ROW FORMAT 或者ROW FORMAT DELIMITED,将会使用自带的SerDe。在建表的时候,用户还需要为表指定列,用户在指定表的列的同时也会指定自定义的SerDe,Hive通过SerDe确定表的具体的列的数据。

h>.STORED AS指定存储文件类型

常用的存储文件类型:SEQUENCEFILE(二进制序列文件)、TEXTFILE(文本)、RCFILE(列式存储格式文件)

如果文件数据是纯文本,可以使用STORED AS TEXTFILE。如果数据需要压缩,使用 STORED AS SEQUENCEFILE。

i>.LOCATION :指定表在HDFS上的存储位置。

j>.LIKE允许用户复制现有的表结构,但是不复制数据。 二.管理表(内部表)理论

默认创建的表都是所谓的管理表,有时也被称为内部表。因为这种表,Hive会(或多或少地)控制着数据的生命周期。Hive默认情况下会将这些表的数据存储在由配置项hive.metastore.warehouse.dir(例如,/user/hive/warehouse)所定义的目录的子目录下。 当我们删除一个管理表时,Hive也会删除这个表中数据。管理表不适合和其他工具共享数据。 三.外部表

>.理论

因为表是外部表,所以Hive并非认为其完全拥有这份数据。删除该表并不会删除掉这份数据,不过描述表的元数据信息会被删除掉。

>.管理表和外部表的使用场景:

每天将收集到的网站日志定期流入HDFS文本文件。在外部表(原始日志表)的基础上做大量的统计分析,用到的中间表、结果表使用内部表存储,数据通过SELECT+INSERT进入内部表。 四.分区表

分区表实际上就是对应一个HDFS文件系统上的独立的文件夹,该文件夹下是该分区所有的数据文件。Hive中的分区就是分目录,把一个大的数据集根据业务需要分割成小的数据集。在查询时通过WHERE子句中的表达式选择查询所需要的指定的分区,这样的查询效率会提高很多。

建表语法与管理表(内部表),外部表以及分区理论知识扫描,如果你小白,这里的内容强烈推荐你看三遍!!!

hive (yinzhengjie)> show tables;

OK

tab_name

student

teacher

Time taken: 0.015 seconds, Fetched: row(s)

hive (yinzhengjie)> create table if not exists teacherbak as select id, name from teacher; #根据查询结果创建表,即查询的结果会添加到新创建的表中,它会自动启用一个job

WARNING: Hive-on-MR is deprecated in Hive and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive .X releases.

Query ID = yinzhengjie_20180806000505_71d796a2----b5d39abd58c9

Total jobs =

Launching Job out of

Number of reduce tasks is set to since there's no reduce operator

Starting Job = job_1533518652134_0001, Tracking URL = http://s101:8088/proxy/application_1533518652134_0001/

Kill Command = /soft/hadoop-2.7./bin/hadoop job -kill job_1533518652134_0001

Hadoop job information for Stage-: number of mappers: ; number of reducers:

-- ::, Stage- map = %, reduce = %

-- ::, Stage- map = %, reduce = %, Cumulative CPU 2.02 sec

MapReduce Total cumulative CPU time: seconds msec

Ended Job = job_1533518652134_0001

Stage- is selected by condition resolver.

Stage- is filtered out by condition resolver.

Stage- is filtered out by condition resolver.

Moving data to directory hdfs://mycluster/user/hive/warehouse/yinzhengjie.db/.hive-staging_hive_2018-08-06_00-05-05_947_8165112419833752968-1/-ext-10002

Moving data to directory hdfs://mycluster/user/hive/warehouse/yinzhengjie.db/teacherbak

MapReduce Jobs Launched:

Stage-Stage-: Map: Cumulative CPU: 2.02 sec HDFS Read: HDFS Write: SUCCESS

Total MapReduce CPU Time Spent: seconds msec

OK

id name

Time taken: 33.117 seconds

hive (yinzhengjie)> show tables;

OK

tab_name

student

teacher

teacherbak

Time taken: 0.014 seconds, Fetched: row(s)

hive (yinzhengjie)> select id, name from teacher; #查看teacher表中的数据

OK

id name

Dennis MacAlistair Ritchie

Linus Benedict Torvalds

Bjarne Stroustrup

Guido van Rossum

James Gosling

Martin Odersky

Rob Pike

Rasmus Lerdorf

Brendan Eich

Time taken: 0.093 seconds, Fetched: row(s)

hive (yinzhengjie)>

hive (yinzhengjie)> select id, name from teacherbak; #查看teacherbak表中的数据,我们会发现其内容和teacher一致

OK

id name

Dennis MacAlistair Ritchie

Linus Benedict Torvalds

Bjarne Stroustrup

Guido van Rossum

James Gosling

Martin Odersky

Rob Pike

Rasmus Lerdorf

Brendan Eich

Time taken: 0.083 seconds, Fetched: row(s)

hive (yinzhengjie)>

管理表(内部表)-根据查询结果创建表,即查询的结果会添加到新创建的表中(hive (yinzhengjie)> create table if not exists teacherbak as select id, name from teacher;)

hive (yinzhengjie)> show tables;

OK

tab_name

student

teacher

teacherbak

Time taken: 0.013 seconds, Fetched: row(s)

hive (yinzhengjie)> desc teacher;

OK

col_name data_type comment

id int

name string

Time taken: 0.029 seconds, Fetched: row(s)

hive (yinzhengjie)> select * from teacher;

OK

teacher.id teacher.name

Dennis MacAlistair Ritchie

Linus Benedict Torvalds

Bjarne Stroustrup

Guido van Rossum

James Gosling

Martin Odersky

Rob Pike

Rasmus Lerdorf

Brendan Eich

Time taken: 0.1 seconds, Fetched: row(s)

hive (yinzhengjie)> create table if not exists teacherCopy like teacher; #根据已经存在的表结构创建表,即只复制表结构,并不会复制表中的数据

OK

Time taken: 0.181 seconds

hive (yinzhengjie)> show tables;

OK

tab_name

student

teacher

teacherbak

teachercopy

Time taken: 0.014 seconds, Fetched: row(s)

hive (yinzhengjie)> select * from teachercopy;

OK

teachercopy.id teachercopy.name

Time taken: 0.103 seconds

hive (yinzhengjie)> desc teachercopy;

OK

col_name data_type comment

id int

name string

Time taken: 0.03 seconds, Fetched: row(s)

hive (yinzhengjie)>

管理表(内部表)-根据已经存在的表结构创建表,即只复制表结构,并不会复制表中的数据(hive (yinzhengjie)> create table if not exists teacherCopy like teacher;)

hive (yinzhengjie)> show tables;

OK

tab_name

student

teacher

teacherbak

teachercopy

Time taken: 0.012 seconds, Fetched: row(s)

hive (yinzhengjie)> desc formatted teacher; #查询表的类型

OK

col_name data_type comment

# col_name data_type comment id int

name string # Detailed Table Information

Database: yinzhengjie

Owner: yinzhengjie

CreateTime: Sun Aug :: PDT

LastAccessTime: UNKNOWN

Retention:

Location: hdfs://mycluster/user/hive/warehouse/yinzhengjie.db/teacher

Table Type: MANAGED_TABLE #显示器对面的小哥哥小姐姐往这里看,这里可以查看当前表的类型哟,这里明显是管理表,也称为内部表。

Table Parameters:

numFiles

numRows

rawDataSize

totalSize

transient_lastDdlTime # Storage Information

SerDe Library: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

InputFormat: org.apache.hadoop.mapred.TextInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

Compressed: No

Num Buckets: -

Bucket Columns: []

Sort Columns: []

Storage Desc Params:

field.delim \t

serialization.format \t

Time taken: 0.036 seconds, Fetched: row(s)

hive (yinzhengjie)>

管理表(内部表)-查询表的类型(hive (yinzhengjie)> desc formatted teacher;)

一.查看原始数据

[yinzhengjie@s101 download]$ pwd

/home/yinzhengjie/download

[yinzhengjie@s101 download]$

[yinzhengjie@s101 download]$ cat dept.dat

ACCOUNTING

RESEARCH

SALES

OPERATIONS

[yinzhengjie@s101 download]$

[yinzhengjie@s101 download]$ more emp.dat

SMITH CLERK -- 800.00

ALLEN SALESMAN -- 1600.00 300.00

WARD SALESMAN -- 1250.00 500.00

JONES MANAGER -- 2975.00

MARTIN SALESMAN -- 1250.00 1400.00

BLAKE MANAGER -- 2850.00

CLARK MANAGER -- 2450.00

SCOTT ANALYST -- 3000.00

KING PRESIDENT -- 5000.00

TURNER SALESMAN -- 1500.00 0.00

ADAMS CLERK -- 1100.00

JAMES CLERK -- 950.00

FORD ANALYST -- 3000.00

MILLER CLERK -- 1300.00

[yinzhengjie@s101 download]$ 二.使用关键字external创建外部表语句

>.创建部门表

hive (yinzhengjie)> create external table if not exists yinzhengjie.dept(

> deptno int,

> dname string,

> loc int

> )

> row format delimited fields terminated by '\t';

OK

Time taken: 0.096 seconds

hive (yinzhengjie)> >.创建员工表

hive (yinzhengjie)> create external table if not exists yinzhengjie.emp(

> empno int,

> ename string,

> job string,

> mgr int,

> hiredate string,

> sal double,

> comm double,

> deptno int

> )

> row format delimited fields terminated by '\t';

OK

Time taken: 0.064 seconds

hive (yinzhengjie)> >.向外部表中导入数据

hive (yinzhengjie)> load data local inpath '/home/yinzhengjie/download/dept.dat' into table yinzhengjie.dept;

Loading data to table yinzhengjie.dept

OK

Time taken: 0.222 seconds

hive (yinzhengjie)>

hive (yinzhengjie)> select * from dept; #导入成功后需要查看dept表中是否有数据

OK

dept.deptno dept.dname dept.loc

ACCOUNTING

RESEARCH

SALES

OPERATIONS

Time taken: 0.088 seconds, Fetched: row(s)

hive (yinzhengjie)>

hive (yinzhengjie)> load data local inpath '/home/yinzhengjie/download/emp.dat' into table yinzhengjie.emp;

Loading data to table yinzhengjie.emp

OK

Time taken: 0.21 seconds

hive (yinzhengjie)>

hive (yinzhengjie)> select * from emp; #导入成功后需要查看emp表中是否有数据

OK

emp.empno emp.ename emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

SMITH CLERK -- 800.0 NULL

ALLEN SALESMAN -- 1600.0 300.0

WARD SALESMAN -- 1250.0 500.0

JONES MANAGER -- 2975.0 NULL

MARTIN SALESMAN -- 1250.0 1400.0

BLAKE MANAGER -- 2850.0 NULL

CLARK MANAGER -- 2450.0 NULL

SCOTT ANALYST -- 3000.0 NULL

KING PRESIDENT NULL -- 5000.0 NULL

TURNER SALESMAN -- 1500.0 0.0

ADAMS CLERK -- 1100.0 NULL

JAMES CLERK -- 950.0 NULL

FORD ANALYST -- 3000.0 NULL

MILLER CLERK -- 1300.0 NULL

Time taken: 0.079 seconds, Fetched: row(s)

hive (yinzhengjie)> >.查看表类型

hive (yinzhengjie)> desc formatted dept; #查看dept表格式化数据

OK

col_name data_type comment

# col_name data_type comment deptno int

dname string

loc int # Detailed Table Information

Database: yinzhengjie

Owner: yinzhengjie

CreateTime: Mon Aug :: PDT

LastAccessTime: UNKNOWN

Retention:

Location: hdfs://mycluster/user/hive/warehouse/yinzhengjie.db/dept

Table Type: EXTERNAL_TABLE #Duang~显示器面前的小哥哥小姐姐往这看,这里有查看dept表的的类型是外部表哟!

Table Parameters:

EXTERNAL TRUE

numFiles

numRows

rawDataSize

totalSize

transient_lastDdlTime # Storage Information

SerDe Library: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

InputFormat: org.apache.hadoop.mapred.TextInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

Compressed: No

Num Buckets: -

Bucket Columns: []

Sort Columns: []

Storage Desc Params:

field.delim \t

serialization.format \t

Time taken: 0.036 seconds, Fetched: row(s)

hive (yinzhengjie)> desc formatted emp; #查看emp表格式化数据

OK

col_name data_type comment

# col_name data_type comment empno int

ename string

job string

mgr int

hiredate string

sal double

comm double

deptno int # Detailed Table Information

Database: yinzhengjie

Owner: yinzhengjie

CreateTime: Mon Aug :: PDT

LastAccessTime: UNKNOWN

Retention:

Location: hdfs://mycluster/user/hive/warehouse/yinzhengjie.db/emp

Table Type: EXTERNAL_TABLE #Duang~显示器面前的小哥哥小姐姐往这看,这里有查看emp表的的类型是外部表哟!

Table Parameters:

EXTERNAL TRUE

numFiles

numRows

rawDataSize

totalSize

transient_lastDdlTime # Storage Information

SerDe Library: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

InputFormat: org.apache.hadoop.mapred.TextInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

Compressed: No

Num Buckets: -

Bucket Columns: []

Sort Columns: []

Storage Desc Params:

field.delim \t

serialization.format \t

Time taken: 0.036 seconds, Fetched: row(s)

hive (yinzhengjie)> >.在hive中删除外部表并不会删除hdfs的真实数据

hive (yinzhengjie)> show tables;

OK

tab_name

dept

emp

student

teacher

teacherbak

teachercopy

Time taken: 0.014 seconds, Fetched: row(s)

hive (yinzhengjie)> drop table dept;

OK

Time taken: 0.122 seconds

hive (yinzhengjie)> drop table emp;

OK

Time taken: 0.079 seconds

hive (yinzhengjie)> show tables; #你会发现删除了元数据表,并没有删除真实数据,我们可以在hive中通过dfs命令查看真实数据

OK

tab_name

student

teacher

teacherbak

teachercopy

Time taken: 0.013 seconds, Fetched: row(s)

hive (yinzhengjie)>

hive (yinzhengjie)> dfs -cat /user/hive/warehouse/yinzhengjie.db/dept/dept.dat; #怎么样?hdfs中的文件内容依旧存在,并没有删除,hive只是删除了元数据而已。

ACCOUNTING

RESEARCH

SALES

OPERATIONS

hive (yinzhengjie)>

> dfs -cat /user/hive/warehouse/yinzhengjie.db/emp/emp.dat; #怎么样?hdfs中的文件内容依旧存在,并没有删除,hive只是删除了元数据而已。

SMITH CLERK -- 800.00

ALLEN SALESMAN -- 1600.00 300.00

WARD SALESMAN -- 1250.00 500.00

JONES MANAGER -- 2975.00

MARTIN SALESMAN -- 1250.00 1400.00

BLAKE MANAGER -- 2850.00

CLARK MANAGER -- 2450.00

SCOTT ANALYST -- 3000.00

KING PRESIDENT -- 5000.00

TURNER SALESMAN -- 1500.00 0.00

ADAMS CLERK -- 1100.00

JAMES CLERK -- 950.00

FORD ANALYST -- 3000.00

MILLER CLERK -- 1300.00

hive (yinzhengjie)>

外部表案例实操-分别创建部门和员工外部表,并向表中导入数据。

分区表的特点总结如下:

>.分区表实际上就是对应一个HDFS文件系统上的独立的文件夹,该文件夹下是该分区所有的数据文件。

>.Hive中的分区就是对应一个HDFS文件系统上分目录,把一个大的数据集根据业务的需要分割成小的数据集。

>.在查询时通过where子句中的表达式选择查询所需要的指定分区,这样的查询效率会提高很多。 [yinzhengjie@s101 download]$ cat users.txt

yinzhengjie

Guido van Rossum

Martin Odersky

Rasmus Lerdorf

[yinzhengjie@s101 download]$

[yinzhengjie@s101 download]$ cat dept.txt

开发部门

运维部门

测试部门

产品部门

销售部门

财务部门

人事部门

[yinzhengjie@s101 download]$

分区表的特点总结以及测试数据“dept.txt”和"users.txt"文本内容

hive (yinzhengjie)> show tables;

OK

tab_name

Time taken: 0.038 seconds

hive (yinzhengjie)> create table dept_partition(

> deptno int,

> dname string,

> loc string

> )

> partitioned by (month string)

> row format delimited fields terminated by '\t';

OK

Time taken: 0.262 seconds

hive (yinzhengjie)>

hive (yinzhengjie)> show tables;

OK

tab_name

dept_partition

Time taken: 0.035 seconds, Fetched: row(s)

hive (yinzhengjie)>

分区表-创建一个分区表语法(hive (yinzhengjie)> create table dept_partition(deptno int,dname string,loc string) partitioned by (month string)row format delimited fields terminated by '\t';)

hive (yinzhengjie)> show tables;

OK

tab_name

dept_partition

raw_logs

student

teacher

teacherbak

teachercopy

Time taken: 0.016 seconds, Fetched: 6 row(s)

hive (yinzhengjie)> load data local inpath '/home/yinzhengjie/download/dept.txt' into table yinzhengjie.dept_partition partition(month=''); #加载数据指定分区

Loading data to table yinzhengjie.dept_partition partition (month=201803)

OK

Time taken: 0.609 seconds

hive (yinzhengjie)> load data local inpath '/home/yinzhengjie/download/dept.txt' into table yinzhengjie.dept_partition partition(month='');

Loading data to table yinzhengjie.dept_partition partition (month=201804)

OK

Time taken: 0.868 seconds

hive (yinzhengjie)> load data local inpath '/home/yinzhengjie/download/dept.txt' into table yinzhengjie.dept_partition partition(month='');

Loading data to table yinzhengjie.dept_partition partition (month=201805)

OK

Time taken: 0.462 seconds

hive (yinzhengjie)> select * from dept_partition;

OK

dept_partition.deptno dept_partition.dname dept_partition.loc dept_partition.month

10 开发部门 20000 201803

20 运维部门 13000 201803

30 测试部门 8000 201803

40 产品部门 6000 201803

50 销售部门 15000 201803

60 财务部门 17000 201803

70 人事部门 16000 201803

10 开发部门 20000 201804

20 运维部门 13000 201804

30 测试部门 8000 201804

40 产品部门 6000 201804

50 销售部门 15000 201804

60 财务部门 17000 201804

70 人事部门 16000 201804

10 开发部门 20000 201805

20 运维部门 13000 201805

30 测试部门 8000 201805

40 产品部门 6000 201805

50 销售部门 15000 201805

60 财务部门 17000 201805

70 人事部门 16000 201805

Time taken: 0.129 seconds, Fetched: 21 row(s)

hive (yinzhengjie)> select * from dept_partition where month='';

OK

dept_partition.deptno dept_partition.dname dept_partition.loc dept_partition.month

10 开发部门 20000 201805

20 运维部门 13000 201805

30 测试部门 8000 201805

40 产品部门 6000 201805

50 销售部门 15000 201805

60 财务部门 17000 201805

70 人事部门 16000 201805

Time taken: 1.017 seconds, Fetched: 7 row(s)

hive (yinzhengjie)>

分区表-加载数据指定一个分区表(hive (yinzhengjie)> load data local inpath '/home/yinzhengjie/download/dept.txt' into table yinzhengjie.dept_partition partition(month='201805');)

hive (yinzhengjie)> show partitions dept_partition;

OK

partition

month=201803

month=201804

month=201805

Time taken: 0.563 seconds, Fetched: 3 row(s)

hive (yinzhengjie)>

分区表-查看分区表现有的分区个数(hive (yinzhengjie)> show partitions dept_partition;)

hive (yinzhengjie)> select * from dept_partition where month=''; #单分区查询

OK

dept_partition.deptno dept_partition.dname dept_partition.loc dept_partition.month

10 开发部门 20000 201805

20 运维部门 13000 201805

30 测试部门 8000 201805

40 产品部门 6000 201805

50 销售部门 15000 201805

60 财务部门 17000 201805

70 人事部门 16000 201805

Time taken: 1.017 seconds, Fetched: 7 row(s)

hive (yinzhengjie)>

hive (yinzhengjie)> select * from dept_partition where month=''

> union

> select * from dept_partition where month=''

> union

> select * from dept_partition where month=''; #多分区联合查询,你会发现它的速度还不如select * from dept_partition;

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = yinzhengjie_20180808214447_1a70bd61-3355-4f99-ba74-de7503593798

Total jobs = 2

Launching Job 1 out of 2

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1533789743141_0001, Tracking URL = http://s101:8088/proxy/application_1533789743141_0001/

Kill Command = /soft/hadoop-2.7.3/bin/hadoop job -kill job_1533789743141_0001

Hadoop job information for Stage-1: number of mappers: 2; number of reducers: 1

2018-08-08 21:45:46,855 Stage-1 map = 0%, reduce = 0%

2018-08-08 21:46:32,103 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 6.11 sec

2018-08-08 21:47:09,769 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 8.95 sec

MapReduce Total cumulative CPU time: 8 seconds 950 msec

Ended Job = job_1533789743141_0001

Launching Job 2 out of 2

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1533789743141_0002, Tracking URL = http://s101:8088/proxy/application_1533789743141_0002/

Kill Command = /soft/hadoop-2.7.3/bin/hadoop job -kill job_1533789743141_0002

Hadoop job information for Stage-2: number of mappers: 2; number of reducers: 1

2018-08-08 21:47:41,300 Stage-2 map = 0%, reduce = 0%

2018-08-08 21:48:41,349 Stage-2 map = 0%, reduce = 0%, Cumulative CPU 5.88 sec

2018-08-08 21:48:42,776 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 7.33 sec

2018-08-08 21:49:23,133 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 10.41 sec

MapReduce Total cumulative CPU time: 10 seconds 410 msec

Ended Job = job_1533789743141_0002

MapReduce Jobs Launched:

Stage-Stage-1: Map: 2 Reduce: 1 Cumulative CPU: 8.95 sec HDFS Read: 17348 HDFS Write: 708 SUCCESS

Stage-Stage-2: Map: 2 Reduce: 1 Cumulative CPU: 10.41 sec HDFS Read: 17496 HDFS Write: 1194 SUCCESS

Total MapReduce CPU Time Spent: 19 seconds 360 msec

OK

u3.deptno u3.dname u3.loc u3.month

10 开发部门 20000 201803

10 开发部门 20000 201804

10 开发部门 20000 201805

20 运维部门 13000 201803

20 运维部门 13000 201804

20 运维部门 13000 201805

30 测试部门 8000 201803

30 测试部门 8000 201804

30 测试部门 8000 201805

40 产品部门 6000 201803

40 产品部门 6000 201804

40 产品部门 6000 201805

50 销售部门 15000 201803

50 销售部门 15000 201804

50 销售部门 15000 201805

60 财务部门 17000 201803

60 财务部门 17000 201804

60 财务部门 17000 201805

70 人事部门 16000 201803

70 人事部门 16000 201804

70 人事部门 16000 201805

Time taken: 278.849 seconds, Fetched: 21 row(s)

hive (yinzhengjie)>

分区表-查询分区表数据之单分区查询个多分区联合查询(hive (yinzhengjie)> select * from dept_partition where month='201803' union select * from dept_partition where month='201804' union select * from dept_partition where month='201805'; )

hive (yinzhengjie)> show partitions dept_partition; #查看分区表中已经有的分区数

OK

partition

month=201803

month=201804

month=201805

Time taken: 0.563 seconds, Fetched: 3 row(s)

hive (yinzhengjie)> ALTER TABLE dept_partition ADD PARTITION(month=''); #添加单个分区

OK

Time taken: 0.562 seconds

hive (yinzhengjie)> show partitions dept_partition;

OK

partition

month=201803

month=201804

month=201805

month=201806

Time taken: 0.096 seconds, Fetched: 4 row(s)

hive (yinzhengjie)> ALTER TABLE dept_partition ADD PARTITION(month='') PARTITION(month='') PARTITION(month=''); #添加多个分区

OK

Time taken: 0.22 seconds

hive (yinzhengjie)> show partitions dept_partition;

OK

partition

month=201803

month=201804

month=201805

month=201806

month=201807

month=201808

month=201809

Time taken: 0.097 seconds, Fetched: 7 row(s)

hive (yinzhengjie)>

分区表-增加分区之创建单个分区和同时创建多个分区案例展示(hive (yinzhengjie)> ALTER TABLE dept_partition ADD PARTITION(month='201807') PARTITION(month='201808') PARTITION(month='201809');)

hive (yinzhengjie)>

hive (yinzhengjie)> show partitions dept_partition; #查看当前已经有的分区数

OK

partition

month=201803

month=201804

month=201805

month=201806

month=201807

month=201808

month=201809

Time taken: 0.114 seconds, Fetched: 7 row(s)

hive (yinzhengjie)> ALTER TABLE dept_partition DROP PARTITION(month=''); #删除单个分区

Dropped the partition month=201807

OK

Time taken: 0.893 seconds

hive (yinzhengjie)> show partitions dept_partition;

OK

partition

month=201803

month=201804

month=201805

month=201806

month=201808

month=201809

Time taken: 0.083 seconds, Fetched: 6 row(s)

hive (yinzhengjie)> ALTER TABLE dept_partition DROP PARTITION(month=''),PARTITION(month=''); #同时删除多个分区

Dropped the partition month=201808

Dropped the partition month=201809

OK

Time taken: 0.364 seconds

hive (yinzhengjie)> show partitions dept_partition;

OK

partition

month=201803

month=201804

month=201805

month=201806

Time taken: 0.104 seconds, Fetched: 4 row(s)

hive (yinzhengjie)>

分区表-删除分区之删除单个分区和同时删除多个分区案例展示(hive (yinzhengjie)> ALTER TABLE dept_partition DROP PARTITION(month='201808'),PARTITION(month='201809');)

hive (yinzhengjie)> DESC FORMATTED dept_partition;

OK

col_name data_type comment

# col_name data_type comment deptno int

dname string

loc string # Partition Information #这里是分区的详细信息

# col_name data_type comment month string # Detailed Table Information

Database: yinzhengjie

Owner: yinzhengjie

CreateTime: Wed Aug 08 21:08:14 PDT 2018

LastAccessTime: UNKNOWN

Retention: 0

Location: hdfs://mycluster/user/hive/warehouse/yinzhengjie.db/dept_partition

Table Type: MANAGED_TABLE

Table Parameters:

transient_lastDdlTime 1533787694 # Storage Information

SerDe Library: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

InputFormat: org.apache.hadoop.mapred.TextInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

Compressed: No

Num Buckets: -1

Bucket Columns: []

Sort Columns: []

Storage Desc Params:

field.delim \t

serialization.format \t

Time taken: 1.813 seconds, Fetched: 33 row(s)

hive (yinzhengjie)>

分区表-查看分区表的结构(hive (yinzhengjie)> DESC FORMATTED dept_partition;)

hive (yinzhengjie)> create table users (

> id int,

> name string,

> age int

> )

> partitioned by (province string, city string)

> row format delimited fields terminated by '\t';

OK

Time taken: 1.046 seconds

hive (yinzhengjie)> show tables;

OK

tab_name

dept_partition

raw_logs

student

teacher

teacherbak

teachercopy

users

Time taken: 0.26 seconds, Fetched: 7 row(s)

hive (yinzhengjie)>

分区表-创建二级分区表语法(hive (yinzhengjie)> create table users (id int,name string, age int) partitioned by (province string, city string) row format delimited fields terminated by '\t';)

hive (yinzhengjie)> create table users (id int,name string, age int) partitioned by (province string, city string) row format delimited fields terminated by '\t'; #创建二级分区

OK

Time taken: 0.071 seconds

hive (yinzhengjie)> load data local inpath '/home/yinzhengjie/download/users.txt' into table users partition(province='hebei',city='shijiazhuang'); #加载数到擦创建的二级分区中

Loading data to table yinzhengjie.users partition (province=hebei, city=shijiazhuang)

OK

Time taken: 0.482 seconds

hive (yinzhengjie)> load data local inpath '/home/yinzhengjie/download/users.txt' into table users partition(province='shanxi',city='xian');

Loading data to table yinzhengjie.users partition (province=shanxi, city=xian)

OK

Time taken: 0.414 seconds

hive (yinzhengjie)> select * from users;

OK

users.id users.name users.age users.province users.city

1 yinzhengjie 26 hebei shijiazhuang

2 Guido van Rossum 62 hebei shijiazhuang

3 Martin Odersky 60 hebei shijiazhuang

4 Rasmus Lerdorf 50 hebei shijiazhuang

1 yinzhengjie 26 shanxi xian

2 Guido van Rossum 62 shanxi xian

3 Martin Odersky 60 shanxi xian

4 Rasmus Lerdorf 50 shanxi xian

Time taken: 0.101 seconds, Fetched: 8 row(s)

hive (yinzhengjie)> select * from users where province='hebei'; #查询分区表中仅含有province='hebei'的数据

OK

users.id users.name users.age users.province users.city

1 yinzhengjie 26 hebei shijiazhuang

2 Guido van Rossum 62 hebei shijiazhuang

3 Martin Odersky 60 hebei shijiazhuang

4 Rasmus Lerdorf 50 hebei shijiazhuang

Time taken: 1.775 seconds, Fetched: 4 row(s)

hive (yinzhengjie)>

分区表-加载数据到二级分区表中(hive (yinzhengjie)> load data local inpath '/home/yinzhengjie/download/users.txt' into table users partition(province='hebei',city='shijiazhuang');)

hive (yinzhengjie)> dfs -mkdir -p /user/hive/warehouse/yinzhengjie.db/users/province=hebei/city=handan; #在hdfs上创建目录

hive (yinzhengjie)>

hive (yinzhengjie)> dfs -put /home/yinzhengjie/download/users.txt /user/hive/warehouse/yinzhengjie.db/users/province=hebei/city=handan; #将本地文件的数据上传到hdfs上

hive (yinzhengjie)>

hive (yinzhengjie)> select * from users where province='hebei' and city='handan'; #很显然,查看数据是没有的

OK

users.id users.name users.age users.province users.city

Time taken: 0.304 seconds

hive (yinzhengjie)>

hive (yinzhengjie)> msck repair table users; #手动执行修复命令

OK

Partitions not in metastore: users:province=hebei/city=handan

Repair: Added partition to metastore users:province=hebei/city=handan

Time taken: 0.487 seconds, Fetched: 2 row(s)

hive (yinzhengjie)> select * from users where province='hebei' and city='handan'; #再次查看数据,发现已经是有数据的

OK

users.id users.name users.age users.province users.city

1 yinzhengjie 26 hebei handan

2 Guido van Rossum 62 hebei handan

3 Martin Odersky 60 hebei handan

4 Rasmus Lerdorf 50 hebei handan

Time taken: 0.156 seconds, Fetched: 4 row(s)

hive (yinzhengjie)>

分区表-把数据直接上传到分区目录上,让分区表和数据产生关联的方式一:上传数据后修复(hive (yinzhengjie)> msck repair table users;)

hive (yinzhengjie)> dfs -mkdir -p /user/hive/warehouse/yinzhengjie.db/users/province=shanxi/city=ankang;

hive (yinzhengjie)> dfs -put /home/yinzhengjie/download/users.txt /user/hive/warehouse/yinzhengjie.db/users/province=shanxi/city=ankang;

hive (yinzhengjie)> select * from users where province='shanxi' and city='ankang'; #查询数据,此时数据是没有查到的

OK

users.id users.name users.age users.province users.city

Time taken: 0.112 seconds

hive (yinzhengjie)>

hive (yinzhengjie)> ALTER TABLE users add partition(province='shanxi',city='ankang'); #上传数据后添加分区

OK

Time taken: 0.14 seconds