[k8s]nginx-ingress配置4/7层测试

基本原理

default-backend提供了2个功能:

1. 404报错页面

2. healthz页面

# Any image is permissable as long as:

# 1. It serves a 404 page at /

# 2. It serves 200 on a /healthz endpoint

创建svc,外面访问80 映射到容器的8080.

deploy+svc

kubectl create -f default-backend.yaml

nginx-ingress搭建

参考: https://github.com/kubernetes/ingress-nginx/blob/master/deploy/README.md

curl https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/namespace.yaml \

| kubectl apply -f -

curl https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/default-backend.yaml \

| kubectl apply -f -

curl https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/configmap.yaml \

| kubectl apply -f -

curl https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/tcp-services-configmap.yaml \

| kubectl apply -f -

curl https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/udp-services-configmap.yaml \

| kubectl apply -f -

curl https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/without-rbac.yaml \

| kubectl apply -f -

默认使用的镜像

quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.9.0

gcr.io/google_containers/defaultbackend:1.4

docker pull lanny/gcr.io_google_containers_defaultbackend_1.4:v1.4

docker tag lanny/gcr.io_google_containers_defaultbackend_1.4:v1.4 gcr.io/google_containers/defaultbackend:1.4

贴上ingress的yaml

主要修改点:

- 通过18080访问状态页面(ingress-controller的nginx.conf决定)

http://192.168.x,x:18080/nginx_status

- ingress-controller需要开启 hostNetwork: true

便于暴漏ingress的80端口和其他ingress-controller的nginx.conf暴漏的端口

namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

default-backend.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: default-http-backend

labels:

app: default-http-backend

namespace: ingress-nginx

spec:

replicas: 1

template:

metadata:

labels:

app: default-http-backend

spec:

terminationGracePeriodSeconds: 60

containers:

- name: default-http-backend

# Any image is permissable as long as:

# 1. It serves a 404 page at /

# 2. It serves 200 on a /healthz endpoint

image: gcr.io/google_containers/defaultbackend:1.4

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

ports:

- containerPort: 8080

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi

---

apiVersion: v1

kind: Service

metadata:

name: default-http-backend

namespace: ingress-nginx

labels:

app: default-http-backend

spec:

ports:

- port: 80

targetPort: 8080

selector:

app: default-http-backend

without-rbac.yaml

这个yaml官网已经更新了, 多了个

sysctl -w net.core.somaxconn=32768; sysctl -w net.ipv4.ip_local_port_range="1024 65535"

而我这里还没更新.

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app: ingress-nginx

template:

metadata:

labels:

app: ingress-nginx

annotations:

prometheus.io/port: '10254'

prometheus.io/scrape: 'true'

spec:

hostNetwork: true

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.9.0

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --annotations-prefix=nginx.ingress.kubernetes.io

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

ingress-controller的本质是:

/nginx-ingress-controller 加启动参数

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --annotations-prefix=nginx.ingress.kubernetes.io

tcp-services-configmap.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

udp-services-configmap.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

启动nginx测试ingress的http 7层负载

kubectl run --image=nginx nginx --replicas=2

kubectl expose deployment nginx --port=80 ## 这里是svc端口,默认和容器的端口一致

nginx-ingress.conf

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: app-nginx-ingress

namespace: default

spec:

rules:

- host: mynginx.maotai.com

http:

paths:

- path: /

backend:

serviceName: nginx

servicePort: 80

注意: ingress虽然调用的是svc,貌似转发是client--nginx--svc--pod; 实际上ingress监控svc 自动将svc下的podip填充到nginx.conf.转发是client--nginx--pod

测试4层负载

参考: https://github.com/kubernetes/ingress-nginx/blob/master/docs/user-guide/exposing-tcp-udp-services.md

udp-services-configmap.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

data:

53: "kube-system/kube-dns:53"

修改后,apply即可,nginx-ingress可以热更新

kubectl apply -f udp-services-configmap.yaml

$ host -t A nginx.default.svc.cluster.local 192.168.14.132

Using domain server:

Name: 192.168.x.x

Address: 192.168.x.x#53

Aliases:

nginx.default.svc.cluster.local has address 10.254.160.155

另一个tcp的示例

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

data:

2200: "default/gitlab:22"

3306: "kube-public/mysql:3306"

2202: "kube-public/centos:22"

2203: "kube-public/mongodb:27017"

以下是nginx-ingress镜像的dockerfile.进这个容器可以看到

Dockerfile

FROM quay.io/kubernetes-ingress-controller/nginx-amd64:0.30

RUN clean-install \

diffutils \

dumb-init

# Create symlinks to redirect nginx logs to stdout and stderr docker log collector

# This only works if nginx is started with CMD or ENTRYPOINT

RUN mkdir -p /var/log/nginx \

&& ln -sf /dev/stdout /var/log/nginx/access.log \

&& ln -sf /dev/stderr /var/log/nginx/error.log

COPY . /

ENTRYPOINT ["/usr/bin/dumb-init"]

CMD ["/nginx-ingress-controller"]

默认启动后nginx-ingres的nginx.conf

root@n1:/etc/nginx# cat nginx.conf

daemon off;

worker_processes 4;

pid /run/nginx.pid;

worker_rlimit_nofile 15360;

worker_shutdown_timeout 10s ;

events {

multi_accept on;

worker_connections 16384;

use epoll;

}

http {

real_ip_header X-Forwarded-For;

real_ip_recursive on;

set_real_ip_from 0.0.0.0/0;

geoip_country /etc/nginx/GeoIP.dat;

geoip_city /etc/nginx/GeoLiteCity.dat;

geoip_proxy_recursive on;

vhost_traffic_status_zone shared:vhost_traffic_status:10m;

vhost_traffic_status_filter_by_set_key $geoip_country_code country::*;

sendfile on;

aio threads;

aio_write on;

tcp_nopush on;

tcp_nodelay on;

log_subrequest on;

reset_timedout_connection on;

keepalive_timeout 75s;

keepalive_requests 100;

client_header_buffer_size 1k;

client_header_timeout 60s;

large_client_header_buffers 4 8k;

client_body_buffer_size 8k;

client_body_timeout 60s;

http2_max_field_size 4k;

http2_max_header_size 16k;

types_hash_max_size 2048;

server_names_hash_max_size 1024;

server_names_hash_bucket_size 32;

map_hash_bucket_size 64;

proxy_headers_hash_max_size 512;

proxy_headers_hash_bucket_size 64;

variables_hash_bucket_size 128;

variables_hash_max_size 2048;

underscores_in_headers off;

ignore_invalid_headers on;

include /etc/nginx/mime.types;

default_type text/html;

brotli on;

brotli_comp_level 4;

brotli_types application/xml+rss application/atom+xml application/javascript application/x-javascript application/json application/rss+xml application/vnd.ms-fontobject application/x-font-ttf application/x-web-app-manifest+json application/xhtml+xml application/xml font/opentype image/svg+xml image/x-icon text/css text/plain text/x-component;

gzip on;

gzip_comp_level 5;

gzip_http_version 1.1;

gzip_min_length 256;

gzip_types application/atom+xml application/javascript application/x-javascript application/json application/rss+xml application/vnd.ms-fontobject application/x-font-ttf application/x-web-app-manifest+json application/xhtml+xml application/xml font/opentype image/svg+xml image/x-icon text/css text/plain text/x-component;

gzip_proxied any;

gzip_vary on;

# Custom headers for response

server_tokens on;

# disable warnings

uninitialized_variable_warn off;

# Additional available variables:

# $namespace

# $ingress_name

# $service_name

log_format upstreaminfo '$the_real_ip - [$the_real_ip] - $remote_user [$time_local] "$request" $status $body_bytes_sent "$http_referer" "$http_user_agent" $request_length $request_time [$proxy_upstream_name] $upstream_addr $upstream_response_length $upstream_response_time $upstream_status';

map $request_uri $loggable {

default 1;

}

access_log /var/log/nginx/access.log upstreaminfo if=$loggable;

error_log /var/log/nginx/error.log notice;

resolver 192.168.14.2 valid=30s;

# Retain the default nginx handling of requests without a "Connection" header

map $http_upgrade $connection_upgrade {

default upgrade;

''

close;

}

map $http_x_forwarded_for $the_real_ip {

default $remote_addr;

}

# trust http_x_forwarded_proto headers correctly indicate ssl offloading

map $http_x_forwarded_proto $pass_access_scheme {

default $http_x_forwarded_proto;

''

$scheme;

}

map $http_x_forwarded_port $pass_server_port {

default $http_x_forwarded_port;

''

$server_port;

}

map $http_x_forwarded_host $best_http_host {

default $http_x_forwarded_host;

''

$this_host;

}

map $pass_server_port $pass_port {

443 443;

default $pass_server_port;

}

# Obtain best http host

map $http_host $this_host {

default $http_host;

''

$host;

}

server_name_in_redirect off;

port_in_redirect off;

ssl_protocols TLSv1.2;

# turn on session caching to drastically improve performance

ssl_session_cache builtin:1000 shared:SSL:10m;

ssl_session_timeout 10m;

# allow configuring ssl session tickets

ssl_session_tickets on;

# slightly reduce the time-to-first-byte

ssl_buffer_size 4k;

# allow configuring custom ssl ciphers

ssl_ciphers 'ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256';

ssl_prefer_server_ciphers on;

ssl_ecdh_curve auto;

proxy_ssl_session_reuse on;

upstream upstream-default-backend {

# Load balance algorithm; empty for round robin, which is the default

least_conn;

keepalive 32;

server 10.2.98.3:8080 max_fails=0 fail_timeout=0;

}

## start server _

server {

server_name _ ;

listen 80 default_server reuseport backlog=511;

listen [::]:80 default_server reuseport backlog=511;

set $proxy_upstream_name "-";

listen 443 default_server reuseport backlog=511 ssl http2;

listen [::]:443 default_server reuseport backlog=511 ssl http2;

# PEM sha: 479f4653ff7d901e313895dbaafbbe64b0805346

ssl_certificate /ingress-controller/ssl/default-fake-certificate.pem;

ssl_certificate_key /ingress-controller/ssl/default-fake-certificate.pem;

more_set_headers "Strict-Transport-Security: max-age=15724800; includeSubDomains;";

location / {

set $proxy_upstream_name "upstream-default-backend";

set $namespace "";

set $ingress_name "";

set $service_name "";

port_in_redirect off;

client_max_body_size "1m";

proxy_set_header Host $best_http_host;

# Pass the extracted client certificate to the backend

proxy_set_header ssl-client-cert "";

proxy_set_header ssl-client-verify "";

proxy_set_header ssl-client-dn "";

# Allow websocket connections

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection $connection_upgrade;

proxy_set_header X-Real-IP $the_real_ip;

proxy_set_header X-Forwarded-For $the_real_ip;

proxy_set_header X-Forwarded-Host $best_http_host;

proxy_set_header X-Forwarded-Port $pass_port;

proxy_set_header X-Forwarded-Proto $pass_access_scheme;

proxy_set_header X-Original-URI $request_uri;

proxy_set_header X-Scheme $pass_access_scheme;

# Pass the original X-Forwarded-For

proxy_set_header X-Original-Forwarded-For $http_x_forwarded_for;

# mitigate HTTPoxy Vulnerability

# https://www.nginx.com/blog/mitigating-the-httpoxy-vulnerability-with-nginx/

proxy_set_header Proxy "";

# Custom headers to proxied server

proxy_connect_timeout 5s;

proxy_send_timeout 60s;

proxy_read_timeout 60s;

proxy_redirect off;

proxy_buffering off;

proxy_buffer_size "4k";

proxy_buffers 4 "4k";

proxy_request_buffering "on";

proxy_http_version 1.1;

proxy_cookie_domain off;

proxy_cookie_path off;

# In case of errors try the next upstream server before returning an error

proxy_next_upstream error timeout invalid_header http_502 http_503 http_504;

proxy_pass http://upstream-default-backend;

}

# health checks in cloud providers require the use of port 80

location /healthz {

access_log off;

return 200;

}

# this is required to avoid error if nginx is being monitored

# with an external software (like sysdig)

location /nginx_status {

allow 127.0.0.1;

allow ::1;

deny all;

access_log off;

stub_status on;

}

}

## end server _

# default server, used for NGINX healthcheck and access to nginx stats

server {

# Use the port 18080 (random value just to avoid known ports) as default port for nginx.

# Changing this value requires a change in:

# https://github.com/kubernetes/ingress-nginx/blob/master/controllers/nginx/pkg/cmd/controller/nginx.go

listen 18080 default_server reuseport backlog=511;

listen [::]:18080 default_server reuseport backlog=511;

set $proxy_upstream_name "-";

location /healthz {

access_log off;

return 200;

}

location /nginx_status {

set $proxy_upstream_name "internal";

vhost_traffic_status_display;

vhost_traffic_status_display_format html;

}

location / {

set $proxy_upstream_name "upstream-default-backend";

proxy_pass http://upstream-default-backend;

}

}

}

stream {

log_format log_stream [$time_local] $protocol $status $bytes_sent $bytes_received $session_time;

access_log /var/log/nginx/access.log log_stream;

error_log /var/log/nginx/error.log;

# TCP services

# UDP services

}

多路径ingress

参考: https://github.com/kubernetes-helm/monocular/blob/master/deployment/monocular/templates/ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: stultified-snail-monocular

annotations:

ingress.kubernetes.io/rewrite-target: "/"

kubernetes.io/ingress.class: "nginx"

labels:

app: stultified-snail-monocular

chart: "monocular-0.5.0"

release: "stultified-snail"

heritage: "Tiller"

spec:

rules:

- http:

paths:

- backend:

serviceName: stultified-snail-monocular-ui

servicePort: 80

path: /

- backend:

serviceName: stultified-snail-monocular-api

servicePort: 80

path: /api/

host:

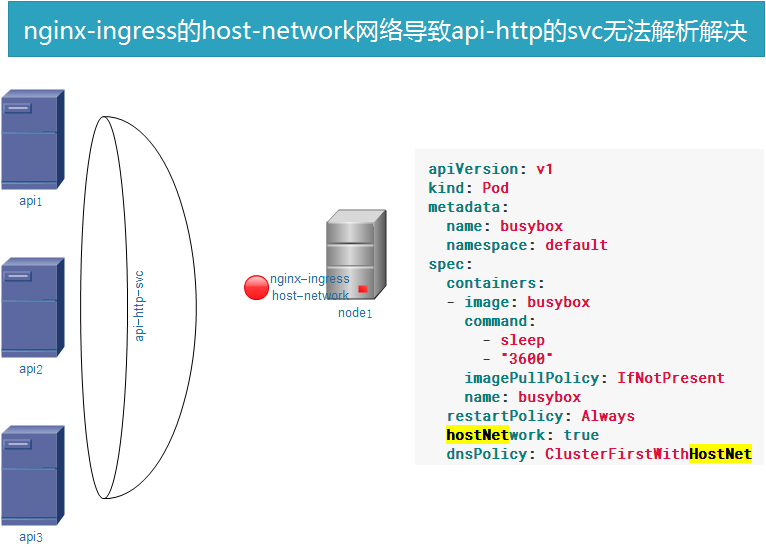

DNS Policy导致hostnetwork网络问题

参考: https://kubernetes.io/docs/concepts/services-networking/dns-pod-service/

遇到个坑: nginx-ingress是hostnetwork模式, 而这种模式的pod的/etc/resolve.conf的dns是继承宿主机的(相当于dokcer run --net=host,共享宿主机网络协议栈,因此也继承了网卡dns),导致访问集群里的 api svc无法解析

By default, DNS policy for a pod is ‘ClusterFirst’. So pods running with hostNetwork cannot resolve DNS names. To have DNS options set along with hostNetwork, you should specify DNS policy explicitly to ‘ClusterFirstWithHostNet’. Update the busybox.yaml as following:

nginx-ingress生产中使用方法(因为我没加证书,所以只能手动引导ingress连http的api)

args:

- /nginx-ingress-controller

- --apiserver-host=http://kube-api-http.kube-public

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

- --tcp-services-configmap=$(POD_NAMESPACE)/nginx-tcp-ingress-configmap

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

containers:

- image: busybox

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

name: busybox

restartPolicy: Always

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

使用mysql测试nginx的4层负载

mysql的deploy和4层端口ingress创建

[root@m1 yaml]# cat mysql/mysql-deploy.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: mysql

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

[root@m1 yaml]# cat ingress/tcp-services-configmap.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

data:

3306: "default/mysql:3306"

注:

1.这里发现apply还有,ingress的nginx.conf配置没发生变化,因为我重启过了启动,重新建了下ingress正常了.

2.ingress创建的端口只在ingress-controller的节点上监听.

nginx配置参考

[root@m1 yaml]# cat ingress/tcp-services-configmap.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

data:

3306: "default/mysql:3306"

nginx其他功能参考

错误日志记录

日志json格式

stub_status & 开启认证

404错误页配置,并重定向

某些后缀文件拒绝访问(default.conf)

配置include(简化)

nginx日志json格式

log-format-upstream: '{ "time": "$time_iso8601", "remote_addr": "$proxy_protocol_addr",

"x-forward-for": "$proxy_add_x_forwarded_for", "request_id": "$request_id", "remote_user":

"$remote_user", "bytes_sent": $bytes_sent, "request_time": $request_time, "status":

$status, "vhost": "$host", "request_proto": "$server_protocol", "path": "$uri",

"request_query": "$args", "request_length": $request_length, "duration": $request_time,

"method": "$request_method", "http_referrer": "$http_referer", "http_user_agent":

"$http_user_agent" }'

| 字段名 | 字段解释 |

|---|---|

| $remote_addr, $http_x_forwarded_for | 记录客户端IP地址 |

| $remote_user | 记录客户端用户名称 |

| $request | 记录请求的URL和HTTP协议 |

| $status | 记录请求状态 |

| $body_bytes_sent | 发送给客户端的字节数,不包括响应头的大小; 该变量与Apache模块mod_log_config里的“%B”参数兼容。 |

| $bytes_sent | 发送给客户端的总字节数。 |

| $connection | 连接的序列号。 |

| $connection_requests | 当前通过一个连接获得的请求数量。 |

| $msec | 日志写入时间。单位为秒,精度是毫秒。 |

| $pipe | 如果请求是通过HTTP流水线(pipelined)发送,pipe值为“p”,否则为“.”。 |

| $http_referer | 记录从哪个页面链接访问过来的 |

| $http_user_agent | 记录客户端浏览器相关信息 |

| $request_length | 请求的长度(包括请求行,请求头和请求正文)。 |

| $request_time | 请求处理时间,单位为秒,精度毫秒; 从读入客户端的第一个字节开始,直到把最后一个字符发送给客户端后进行日志写入为止。 |

| $time_iso8601 | ISO8601标准格式下的本地时间。 |

| $time_local | 通用日志格式下的本地时间。 |

nginx快速测试

mkdir -p /data/nginx-html

echo "maotai" > /data/nginx-html/index.html

docker run -d \

--net=host \

--restart=always \

-v /etc/nginx/nginx.conf:/etc/nginx/nginx.conf:ro \

-v /etc/localtime:/etc/localtime:ro \

-v /data/nginx-html:/usr/share/nginx/html \

--name nginx \

nginx

nginx4层负载和7层负载示例

单个端口映射负载:一对多

cat > nginx.conf <<EOF

error_log stderr notice;

worker_processes auto;

events {

multi_accept on;

use epoll;

worker_connections 1024;

}

stream {

upstream kube_apiserver {

least_conn;

server 192.168.8.161:6443;

server 192.168.8.162:6443;

server 192.168.8.163:6443;

}

server {

listen 127.0.0.1:6443;

proxy_pass kube_apiserver;

proxy_timeout 10m;

proxy_connect_timeout 1s;

}

}

EOF

单个端口映射:一对一

cat > nginx.conf <<EOF

error_log stderr notice;

worker_processes auto;

events {

multi_accept on;

use epoll;

worker_connections 1024;

}

stream {

log_format log_stream [$time_local] $protocol $status $bytes_sent $bytes_received $session_time;

access_log /var/log/nginx/access.log log_stream;

error_log /var/log/nginx/error.log;

# TCP services

# UDP services

upstream udp-53-kube-system-kube-dns-53 {

server 10.2.54.9:53;

}

server {

listen 53 udp;

listen [::]:53 udp;

proxy_responses 1;

proxy_timeout 600s;

proxy_pass udp-53-kube-system-kube-dns-53;

}

}

EOF

一个tcp&一个udp端口映射

stream {

log_format log_stream [$time_local] $protocol $status $bytes_sent $bytes_received $session_time;

access_log /var/log/nginx/access.log log_stream;

error_log /var/log/nginx/error.log;

# TCP services

upstream tcp-3306-default-mysql-3306 {

server 10.2.30.2:3306;

}

server {

listen 3306;

listen [::]:3306;

proxy_timeout 600s;

proxy_pass tcp-3306-default-mysql-3306;

}

# UDP services

upstream udp-53-kube-system-kube-dns-53 {

server 10.2.54.9:53;

}

server {

listen 53 udp;

listen [::]:53 udp;

proxy_responses 1;

proxy_timeout 600s;

proxy_pass udp-53-kube-system-kube-dns-53;

}

}

nginx的proxy-pass7层负载配置

user nginx nginx;

worker_processes auto;

worker_rlimit_nofile 65535;

error_log /usr/local/nginxlogs/error.log;

# pid logs/nginx.pid;

events {

use epoll;

worker_connections 51200;

}

http {

include mime.types;

default_type application/octet-stream;

log_format main '$remote_addr $remote_user [$time_local] "$request" $http_host '

'$status $upstream_status $body_bytes_sent "$http_referer" '

'"$http_user_agent" $ssl_protocol $ssl_cipher $upstream_addr '

'$request_time $upstream_response_time';

server_info off;

server_tag off;

server_name_in_redirect off;

access_log /usr/local/tengine-2.1.2/logs/access.log main;

client_max_body_size 80m;

client_header_buffer_size 16k;

large_client_header_buffers 4 16k;

sendfile on;

tcp_nopush on;

keepalive_timeout 65;

server_tokens on;

gzip on;

gzip_min_length 1k;

gzip_buffers 4 16k;

gzip_proxied any;

gzip_http_version 1.1;

gzip_comp_level 3;

gzip_types text/plain application/x-javascript text/css application/xml;

gzip_vary on;

upstream kube-api-vip {

least_conn;

server 192.168.x.20:8080;

server 192.168.x.21:8080;

server 192.168.x.22:8080;

}

server {

listen 80;

server_name kube-api-vip.maotai.net;

proxy_connect_timeout 1s;

# proxy_read_timeout 600;

# proxy_send_timeout 600;

proxy_buffer_size 128k;

proxy_buffers 4 256k;

proxy_busy_buffers_size 256k;

location / {

proxy_next_upstream error timeout invalid_header http_500 http_503 http_404 http_502 http_504;

proxy_pass http://kube-api-vip;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

}

混合:端口映射和7层映射放在一起(这是个ingress的例子)

root@n2:/# cat /etc/nginx/nginx.conf

daemon off;

worker_processes 4;

pid /run/nginx.pid;

worker_rlimit_nofile 15360;

worker_shutdown_timeout 10s ;

events {

multi_accept on;

worker_connections 16384;

use epoll;

}

http {

real_ip_header X-Forwarded-For;

real_ip_recursive on;

set_real_ip_from 0.0.0.0/0;

geoip_country /etc/nginx/GeoIP.dat;

geoip_city /etc/nginx/GeoLiteCity.dat;

geoip_proxy_recursive on;

vhost_traffic_status_zone shared:vhost_traffic_status:10m;

vhost_traffic_status_filter_by_set_key $geoip_country_code country::*;

sendfile on;

aio threads;

aio_write on;

tcp_nopush on;

tcp_nodelay on;

log_subrequest on;

reset_timedout_connection on;

keepalive_timeout 75s;

keepalive_requests 100;

client_header_buffer_size 1k;

client_header_timeout 60s;

large_client_header_buffers 4 8k;

client_body_buffer_size 8k;

client_body_timeout 60s;

http2_max_field_size 4k;

http2_max_header_size 16k;

types_hash_max_size 2048;

server_names_hash_max_size 1024;

server_names_hash_bucket_size 64;

map_hash_bucket_size 64;

proxy_headers_hash_max_size 512;

proxy_headers_hash_bucket_size 64;

variables_hash_bucket_size 128;

variables_hash_max_size 2048;

underscores_in_headers off;

ignore_invalid_headers on;

include /etc/nginx/mime.types;

default_type text/html;

brotli on;

brotli_comp_level 4;

brotli_types application/xml+rss application/atom+xml application/javascript application/x-javascript application/json application/rss+xml application/vnd.ms-fontobject application/x-font-ttf application/x-web-app-manifest+json application/xhtml+xml application/xml font/opentype image/svg+xml image/x-icon text/css text/plain text/x-component;

gzip on;

gzip_comp_level 5;

gzip_http_version 1.1;

gzip_min_length 256;

gzip_types application/atom+xml application/javascript application/x-javascript application/json application/rss+xml application/vnd.ms-fontobject application/x-font-ttf application/x-web-app-manifest+json application/xhtml+xml application/xml font/opentype image/svg+xml image/x-icon text/css text/plain text/x-component;

gzip_proxied any;

gzip_vary on;

# Custom headers for response

server_tokens on;

# disable warnings

uninitialized_variable_warn off;

# Additional available variables:

# $namespace

# $ingress_name

# $service_name

log_format upstreaminfo '$the_real_ip - [$the_real_ip] - $remote_user [$time_local] "$request" $status $body_bytes_sent "$http_referer" "$http_user_agent" $request_length $request_time [$proxy_upstream_name] $upstream_addr $upstream_response_length $upstream_response_time $upstream_status';

map $request_uri $loggable {

default 1;

}

access_log /var/log/nginx/access.log upstreaminfo if=$loggable;

error_log /var/log/nginx/error.log notice;

resolver 192.168.14.2 valid=30s;

# Retain the default nginx handling of requests without a "Connection" header

map $http_upgrade $connection_upgrade {

default upgrade;

'' close;

}

map $http_x_forwarded_for $the_real_ip {

default $remote_addr;

}

# trust http_x_forwarded_proto headers correctly indicate ssl offloading

map $http_x_forwarded_proto $pass_access_scheme {

default $http_x_forwarded_proto;

'' $scheme;

}

map $http_x_forwarded_port $pass_server_port {

default $http_x_forwarded_port;

'' $server_port;

}

map $http_x_forwarded_host $best_http_host {

default $http_x_forwarded_host;

'' $this_host;

}

map $pass_server_port $pass_port {

443 443;

default $pass_server_port;

}

# Obtain best http host

map $http_host $this_host {

default $http_host;

'' $host;

}

server_name_in_redirect off;

port_in_redirect off;

ssl_protocols TLSv1.2;

# turn on session caching to drastically improve performance

ssl_session_cache builtin:1000 shared:SSL:10m;

ssl_session_timeout 10m;

# allow configuring ssl session tickets

ssl_session_tickets on;

# slightly reduce the time-to-first-byte

ssl_buffer_size 4k;

# allow configuring custom ssl ciphers

ssl_ciphers 'ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256';

ssl_prefer_server_ciphers on;

ssl_ecdh_curve auto;

proxy_ssl_session_reuse on;

upstream kube-public-spring-web {

# Load balance algorithm; empty for round robin, which is the default

least_conn;

keepalive 32;

server 10.2.54.4:8080 max_fails=0 fail_timeout=0;

}

upstream upstream-default-backend {

# Load balance algorithm; empty for round robin, which is the default

least_conn;

keepalive 32;

server 10.2.30.3:8080 max_fails=0 fail_timeout=0;

}

## start server _

server {

server_name _ ;

listen 80 default_server reuseport backlog=511;

listen [::]:80 default_server reuseport backlog=511;

set $proxy_upstream_name "-";

listen 443 default_server reuseport backlog=511 ssl http2;

listen [::]:443 default_server reuseport backlog=511 ssl http2;

# PEM sha: 20103470e60aa51135afee9244c7c831559f04b8

ssl_certificate /ingress-controller/ssl/default-fake-certificate.pem;

ssl_certificate_key /ingress-controller/ssl/default-fake-certificate.pem;

more_set_headers "Strict-Transport-Security: max-age=15724800; includeSubDomains;";

location / {

set $proxy_upstream_name "upstream-default-backend";

set $namespace "";

set $ingress_name "";

set $service_name "";

port_in_redirect off;

client_max_body_size "1m";

proxy_set_header Host $best_http_host;

# Pass the extracted client certificate to the backend

proxy_set_header ssl-client-cert "";

proxy_set_header ssl-client-verify "";

proxy_set_header ssl-client-dn "";

# Allow websocket connections

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection $connection_upgrade;

proxy_set_header X-Real-IP $the_real_ip;

proxy_set_header X-Forwarded-For $the_real_ip;

proxy_set_header X-Forwarded-Host $best_http_host;

proxy_set_header X-Forwarded-Port $pass_port;

proxy_set_header X-Forwarded-Proto $pass_access_scheme;

proxy_set_header X-Original-URI $request_uri;

proxy_set_header X-Scheme $pass_access_scheme;

# Pass the original X-Forwarded-For

proxy_set_header X-Original-Forwarded-For $http_x_forwarded_for;

# mitigate HTTPoxy Vulnerability

# https://www.nginx.com/blog/mitigating-the-httpoxy-vulnerability-with-nginx/

proxy_set_header Proxy "";

# Custom headers to proxied server

proxy_connect_timeout 5s;

proxy_send_timeout 60s;

proxy_read_timeout 60s;

proxy_redirect off;

proxy_buffering off;

proxy_buffer_size "4k";

proxy_buffers 4 "4k";

proxy_request_buffering "on";

proxy_http_version 1.1;

proxy_cookie_domain off;

proxy_cookie_path off;

# In case of errors try the next upstream server before returning an error

proxy_next_upstream error timeout invalid_header http_502 http_503 http_504;

proxy_pass http://upstream-default-backend;

}

# health checks in cloud providers require the use of port 80

location /healthz {

access_log off;

return 200;

}

# this is required to avoid error if nginx is being monitored

# with an external software (like sysdig)

location /nginx_status {

allow 127.0.0.1;

allow ::1;

deny all;

access_log off;

stub_status on;

}

}

## end server _

## start server spring.maotai.com

server {

server_name spring.maotai.com ;

listen 80;

listen [::]:80;

set $proxy_upstream_name "-";

location / {

set $proxy_upstream_name "kube-public-spring-web";

set $namespace "kube-public";

set $ingress_name "spring";

set $service_name "spring";

port_in_redirect off;

client_max_body_size "1m";

proxy_set_header Host $best_http_host;

# Pass the extracted client certificate to the backend

proxy_set_header ssl-client-cert "";

proxy_set_header ssl-client-verify "";

proxy_set_header ssl-client-dn "";

# Allow websocket connections

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection $connection_upgrade;

proxy_set_header X-Real-IP $the_real_ip;

proxy_set_header X-Forwarded-For $the_real_ip;

proxy_set_header X-Forwarded-Host $best_http_host;

proxy_set_header X-Forwarded-Port $pass_port;

proxy_set_header X-Forwarded-Proto $pass_access_scheme;

proxy_set_header X-Original-URI $request_uri;

proxy_set_header X-Scheme $pass_access_scheme;

# Pass the original X-Forwarded-For

proxy_set_header X-Original-Forwarded-For $http_x_forwarded_for;

# mitigate HTTPoxy Vulnerability

# https://www.nginx.com/blog/mitigating-the-httpoxy-vulnerability-with-nginx/

proxy_set_header Proxy "";

# Custom headers to proxied server

proxy_connect_timeout 5s;

proxy_send_timeout 60s;

proxy_read_timeout 60s;

proxy_redirect off;

proxy_buffering off;

proxy_buffer_size "4k";

proxy_buffers 4 "4k";

proxy_request_buffering "on";

proxy_http_version 1.1;

proxy_cookie_domain off;

proxy_cookie_path off;

# In case of errors try the next upstream server before returning an error

proxy_next_upstream error timeout invalid_header http_502 http_503 http_504;

proxy_pass http://kube-public-spring-web;

}

}

## end server spring.maotai.com

# default server, used for NGINX healthcheck and access to nginx stats

server {

# Use the port 18080 (random value just to avoid known ports) as default port for nginx.

# Changing this value requires a change in:

# https://github.com/kubernetes/ingress-nginx/blob/master/controllers/nginx/pkg/cmd/controller/nginx.go

listen 18080 default_server reuseport backlog=511;

listen [::]:18080 default_server reuseport backlog=511;

set $proxy_upstream_name "-";

location /healthz {

access_log off;

return 200;

}

location /nginx_status {

set $proxy_upstream_name "internal";

vhost_traffic_status_display;

vhost_traffic_status_display_format html;

}

location / {

set $proxy_upstream_name "upstream-default-backend";

proxy_pass http://upstream-default-backend;

}

}

}

stream {

log_format log_stream [$time_local] $protocol $status $bytes_sent $bytes_received $session_time;

access_log /var/log/nginx/access.log log_stream;

error_log /var/log/nginx/error.log;

# TCP services

upstream tcp-3306-default-mysql-3306 {

server 10.2.30.2:3306;

}

server {

listen 3306;

listen [::]:3306;

proxy_timeout 600s;

proxy_pass tcp-3306-default-mysql-3306;

}

# UDP services

upstream udp-53-kube-system-kube-dns-53 {

server 10.2.54.9:53;

}

server {

listen 53 udp;

listen [::]:53 udp;

proxy_responses 1;

proxy_timeout 600s;

proxy_pass udp-53-kube-system-kube-dns-53;

}

nginx-ingress-vts里backend显示,但是server zone不显示

原来是本地ie浏览器连接里配了代理导致.

[k8s]nginx-ingress配置4/7层测试的更多相关文章

- k8s nginx ingress配置TLS

在没有配置任何nginx下,k8s的nginx默认只支持TLS1.2,不支持TLS1.0和TLS1.1 默认的 nginx-config(部分可能叫 nginx-configuration)的配置如下 ...

- Kubernetes 部署 Nginx Ingress Controller 之 nginxinc/kubernetes-ingress

更新:这里用的是 nginxinc/kubernetes-ingress ,还有个 kubernetes/ingress-nginx ,它们的区别见 Differences Between nginx ...

- Nginx Ingress 高并发实践

概述 Nginx Ingress Controller 基于 Nginx 实现了 Kubernetes Ingress API,Nginx 是公认的高性能网关,但如果不对其进行一些参数调优,就不能充分 ...

- [转帖]在 k8s 中通过 Ingress 配置域名访问

在 k8s 中通过 Ingress 配置域名访问 https://juejin.im/post/5db8da4b6fb9a0204520b310 在上篇文章中我们已经使用 k8s 部署了第一个应用,此 ...

- 见异思迁:K8s 部署 Nginx Ingress Controller 之 kubernetes/ingress-nginx

前天才发现,区区一个 nginx ingress controller 竟然2个不同的实现.一个叫 kubernetes/ingress-nginx ,是由 kubernetes 社区维护的,对应的容 ...

- 记一次压力测试和对nginx/tomcat配置的调整

原文地址:还没找到 是一个web系统,前端使用nginx做为反向代理,处理https,并将请求转发给后端的tomcat服务. 压力测试工具选择了jmeter. 首先简单介绍一下jmeter. 它是ap ...

- 高性能Web服务器Nginx的配置与部署研究(7)核心模块之主模块的非测试常用指令

1. error_log 含义:指定存储错误日志的文件 语法:error_log <file> [debug|info|notice|warn|error|crit] 缺省:${prefi ...

- Kubernetes 使用 ingress 配置 https 集群(十五)

目录 一.背景 1.1 需求 1.2 Ingress 1.3 环境介绍 二.安装部署 2.1.创建后端 Pod 应用 2.2 创建后端 Pod Service 2.3.创建 ingress 资源 2. ...

- k8s系列---ingress资源和ingress-controller

https://www.cnblogs.com/zhangeamon/p/7007076.html http://blog.itpub.net/28916011/viewspace-2214747/ ...

随机推荐

- AM335x启动

参考文件: 1.TI.Reference_Manual_1.pdf http://pan.baidu.com/s/1c1BJNtm 2.TI_AM335X.pdf http://pan.baidu.c ...

- MySql:Table 'database.TABLE_ONE' doesn't exist

1. 问题描述 由于最近使用本地的MySQL奔溃了,在修改管理员密码时,不慎修改错误,导致无法重新写会,甚至按照MySQL官网手册都无法修改正确,遂放弃修改root密码,直接将一个未有数据的纯净版My ...

- sass和less

一.相同点 sass和less具有变量.作用域.混合.嵌套.继承.运算符.颜色函数.导入和注释等基本特性,而且以“变量”.“混合”.“嵌套”.“继承”和“颜色函数”为五大基本特性. sass和less ...

- 项目冲刺Fifth

Fifth Sprint 1.各个成员今日完成的任务 蔡振翼:编写博客,了解php 谢孟轩:无 林凯:优化登录判断逻辑,熟悉相关php及mysql数据库技术的使用 肖志豪:帮助组员 吴文清:实现管理员 ...

- 潭州课堂25班:Ph201805201 爬虫基础 第十一课 点触验证码 (课堂笔记)

打开 网易盾 http://dun.163.com/trial/picture-click ——在线体验——图中点选 打码平台 ——超级鹰 http://www.chaojiying.com/ ...

- __NSCFNumber isEqualToString:]: unrecognized selector sent to instance 0xb000000000000003

出现这个报错的原因是:拿数字与字符串进行对比了. 检查两边的数据格式是否一致 如果不一致,可以使用[nsstring stringwithformate:@"%d",xx]包装一下 ...

- Windows10系统重置网络设置

使用Windows10系统户很可能会遇到网络异常,连接不上网的情况? 如此,简易方法可以尝试下.重置网络,教程如下: 1.//按下WIN+X(或右键点击开始按钮),然后选择“命令提示符(管理员)”; ...

- 毫秒转时间(java.js)

SimpleDateFormat sdf = new SimpleDateFormat( "yyyy-MM-dd HH:mm:ss"); GregorianCalendar gc ...

- C++泛型编程(2)--通过排序和查找元素理解迭代器

许多C++开源库(stl,opencv,ros和blas等)都使用了大量的泛型编程的思想,如果不理解这些思想,将很难看懂代码,而<泛型编程与STL>一书对理解泛型编程思想非常的有帮助,这里 ...

- PHP 计算两个时间戳之间相差的时间

//功能:计算两个时间戳之间相差的日时分秒 //$begin_time 开始时间戳 //$end_time 结束时间戳 function timediff($begin_time,$end_time) ...