Solr集群(即SolrCloud)搭建与使用

1、什么是SolrCloud

SolrCloud(solr 云)是Solr提供的分布式搜索方案,当你需要大规模,容错,分布式索引和检索能力时使用 SolrCloud。

当一个系统的索引数据量少的时候是不需要使用SolrCloud的,当索引量很大,搜索请求并发很高,这时需要使用SolrCloud来满足这些需求。SolrCloud是基于Solr和Zookeeper的分布式搜索方案,它的主要思想是使用Zookeeper作为集群的配置信息中心。

它有几个特色功能:

1)集中式的配置信息。

2)自动容错。

3)近实时搜索。

4)查询时自动负载均衡。

注意:一般使用zookeeper,就是将他当作一个注册中心使用。SolrCloud使用zookeeper是使用其的管理集群的,请求过来,先连接zookeeper,然后再看看分发到那台solr机器上面,决定了那台服务器进行搜索的,对Solr配置文件进行集中管理。

2、zookeeper是个什么玩意?

顾名思义zookeeper就是动物园管理员,他是用来管hadoop(大象)、Hive(蜜蜂)、pig(小猪)的管理员, Apache Hbase和 Apache Solr 的分布式集群都用到了zookeeper;Zookeeper:是一个分布式的、开源的程序协调服务,是hadoop项目下的一个子项目。

3、SolrCloud结构

SolrCloud为了降低单机的处理压力,需要由多台服务器共同来完成索引和搜索任务。实现的思路是将索引数据进行Shard(分片)拆分,每个分片由多台的服务器共同完成,当一个索引或搜索请求过来时会分别从不同的Shard的服务器中操作索引。

SolrCloud需要Solr基于Zookeeper部署,Zookeeper是一个集群管理软件,由于SolrCloud需要由多台服务器组成,由zookeeper来进行协调管理。

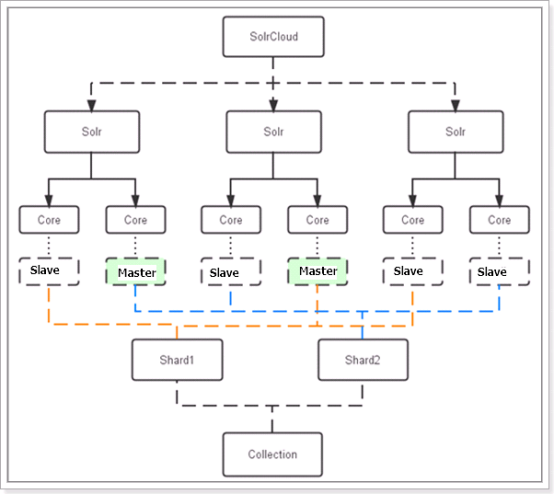

下图是一个SolrCloud应用的例子:

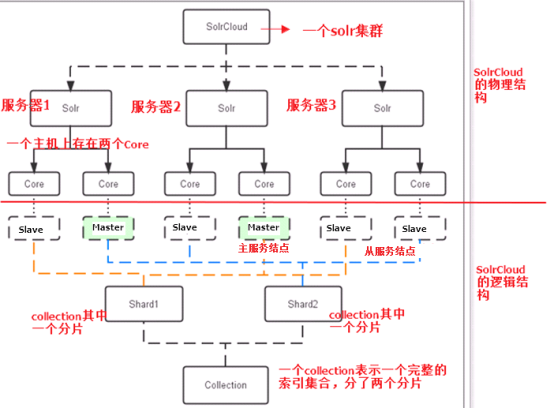

对上图进行图解,如下图所示:

)、物理结构

三个Solr实例( 每个实例包括两个Core),组成一个SolrCloud。

)、逻辑结构

索引集合包括两个Shard(shard1和shard2),shard1和shard2分别由三个Core组成,其中一个Leader两个Replication,Leader是由zookeeper选举产生,zookeeper控制每个shard上三个Core的索引数据一致,解决高可用问题。

用户发起索引请求分别从shard1和shard2上获取,解决高并发问题。

a、collection

Collection在SolrCloud集群中是一个逻辑意义上的完整的索引结构。它常常被划分为一个或多个Shard(分片),它们使用相同的配置信息。

比如:针对商品信息搜索可以创建一个collection。

collection=shard1+shard2+....+shardX

b、Core

每个Core是Solr中一个独立运行单位,提供 索引和搜索服务。一个shard需要由一个Core或多个Core组成。由于collection由多个shard组成所以collection一般由多个core组成。

c、Master或Slave

Master是master-slave结构中的主结点(通常说主服务器),Slave是master-slave结构中的从结点(通常说从服务器或备服务器)。同一个Shard下master和slave存储的数据是一致的,这是为了达到高可用目的。

d、Shard

Collection的逻辑分片。每个Shard被化成一个或者多个replication,通过选举确定哪个是Leader。

注意:collection就是一个完整的索引库,分片存储,有两片,两片的内容不一样的。一片存储在三个节点,一主两从,他们之间的内容就是一样的。

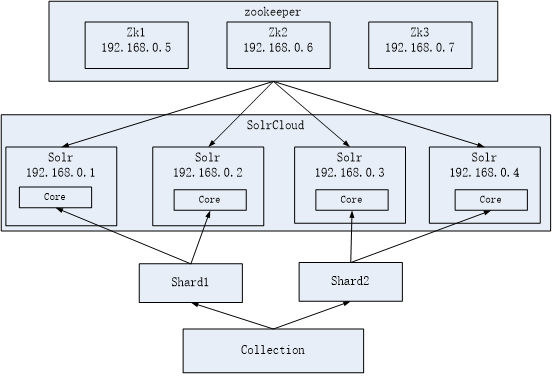

如下图所示:

4、SolrCloud搭建

本教程的这套安装是单机版的安装,所以采用伪集群的方式进行安装,如果是真正的生成环境,将伪集群的ip改下就可以了,步骤是一样的。

SolrCloud结构图如下:

环境准备,请安装好自己的jdk。

注意,我搭建的是伪集群,一个虚拟机,分配了1G内存,然后搭建的集群。

5、zookeeper集群安装。

第一步:解压zookeeper,tar -zxvf zookeeper-3.4.6.tar.gz将zookeeper-3.4.6拷贝到/usr/local/solr-cloud下,复制三份分别并将目录名改为zookeeper1、zookeeper2、zookeeper3。

# 首先将zookeeper进行解压缩操作。

[root@localhost package]# tar -zxvf zookeeper-3.4..tar.gz -C /home/hadoop/soft/

# 然后创建一个solr-cloud目录。

[root@localhost ~]# cd /usr/local/

[root@localhost local]# ls

bin etc games include lib libexec sbin share solr src

[root@localhost local]# mkdir solr-cloud

[root@localhost local]# ls

bin etc games include lib libexec sbin share solr solr-cloud src

[root@localhost local]#

# 复制三份分别并将目录名改为zookeeper1、zookeeper2、zookeeper3。

[root@localhost soft]# cp -r zookeeper-3.4./ /usr/local/solr-cloud/zookeeper1

[root@localhost soft]# cd /usr/local/solr-cloud/

[root@localhost solr-cloud]# ls

zookeeper1

[root@localhost solr-cloud]# cp -r zookeeper1/ zookeeper2

[root@localhost solr-cloud]# cp -r zookeeper1/ zookeeper3

第二步:进入zookeeper1文件夹,创建data目录。并在data目录中创建一个myid文件内容为"1"(echo 1 >> data/myid)。

[root@localhost solr-cloud]# ls

zookeeper1 zookeeper2 zookeeper3

[root@localhost solr-cloud]# cd zookeeper1

[root@localhost zookeeper1]# ls

bin CHANGES.txt contrib docs ivy.xml LICENSE.txt README_packaging.txt recipes zookeeper-3.4..jar zookeeper-3.4..jar.md5

build.xml conf dist-maven ivysettings.xml lib NOTICE.txt README.txt src zookeeper-3.4..jar.asc zookeeper-3.4..jar.sha1

[root@localhost zookeeper1]# mkdir data

[root@localhost zookeeper1]# cd data/

[root@localhost data]# ls

[root@localhost data]# echo >> myid

[root@localhost data]# cat myid

第三步:进入conf文件夹,把zoo_sample.cfg改名为zoo.cfg。

[root@localhost zookeeper1]# ls

bin CHANGES.txt contrib dist-maven ivysettings.xml lib NOTICE.txt README.txt src zookeeper-3.4..jar.asc zookeeper-3.4..jar.sha1

build.xml conf data docs ivy.xml LICENSE.txt README_packaging.txt recipes zookeeper-3.4..jar zookeeper-3.4..jar.md5

[root@localhost zookeeper1]# cd conf/

[root@localhost conf]# ls

configuration.xsl log4j.properties zoo_sample.cfg

[root@localhost conf]# mv zoo_sample.cfg zoo.cfg

[root@localhost conf]# ls

configuration.xsl log4j.properties zoo.cfg

[root@localhost conf]#

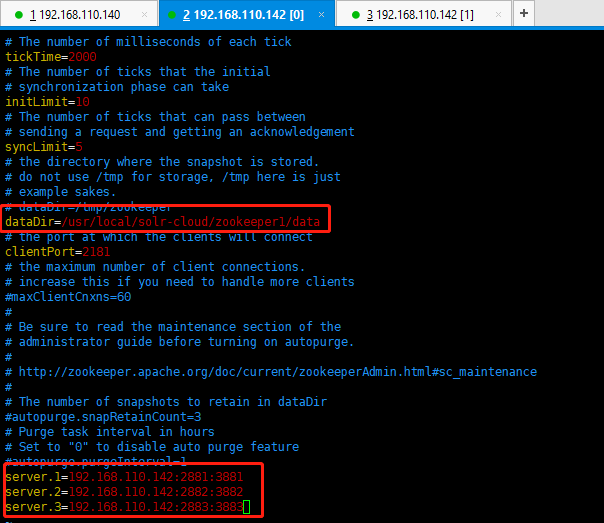

第四步:修改zoo.cfg。

修改:dataDir=/usr/local/solr-cloud/zookeeper1/data

注意:clientPort=2181(zookeeper2中为2182、zookeeper3中为2183)

可以在末尾添加这三项配置(注意:2881和3881分别是zookeeper之间通信的端口号、zookeeper之间选举使用的端口号。2181是客户端连接的端口号,别搞错了哦):

server.1=192.168.110.142:2881:3881

server.2=192.168.110.142:2882:3882

server.3=192.168.110.142:2883:3883

第五步:对zookeeper2、zookeeper3中的设置做第二步至第四步修改。

zookeeper2修改如下所示:

a)、myid内容为2

b)、dataDir=/usr/local/solr-cloud/zookeeper2/data

c)、clientPort=2182

Zookeeper3修改如下所示:

a)、myid内容为3

b)、dataDir=/usr/local/solr-cloud/zookeeper3/data

c)、clientPort=2183

[root@localhost conf]# cd ../../zookeeper2

[root@localhost zookeeper2]# ls

bin CHANGES.txt contrib docs ivy.xml LICENSE.txt README_packaging.txt recipes zookeeper-3.4..jar zookeeper-3.4..jar.md5

build.xml conf dist-maven ivysettings.xml lib NOTICE.txt README.txt src zookeeper-3.4..jar.asc zookeeper-3.4..jar.sha1

[root@localhost zookeeper2]# mkdir data

[root@localhost zookeeper2]# cd data/

[root@localhost data]# ls

[root@localhost data]# echo >> myid

[root@localhost data]# ls

myid

[root@localhost data]# cat myid [root@localhost data]# ls

myid

[root@localhost data]# cd ..

[root@localhost zookeeper2]# ls

bin CHANGES.txt contrib dist-maven ivysettings.xml lib NOTICE.txt README.txt src zookeeper-3.4..jar.asc zookeeper-3.4..jar.sha1

build.xml conf data docs ivy.xml LICENSE.txt README_packaging.txt recipes zookeeper-3.4..jar zookeeper-3.4..jar.md5

[root@localhost zookeeper2]# cd conf/

[root@localhost conf]# ls

configuration.xsl log4j.properties zoo_sample.cfg

[root@localhost conf]# mv zoo_sample.cfg zoo.cfg

[root@localhost conf]# ls

configuration.xsl log4j.properties zoo.cfg

[root@localhost conf]# vim zoo.cfg

[root@localhost conf]# cd ../..

[root@localhost solr-cloud]# ls

zookeeper1 zookeeper2 zookeeper3

[root@localhost solr-cloud]# cd zookeeper3

[root@localhost zookeeper3]# ls

bin CHANGES.txt contrib docs ivy.xml LICENSE.txt README_packaging.txt recipes zookeeper-3.4..jar zookeeper-3.4..jar.md5

build.xml conf dist-maven ivysettings.xml lib NOTICE.txt README.txt src zookeeper-3.4..jar.asc zookeeper-3.4..jar.sha1

[root@localhost zookeeper3]# mkdir data

[root@localhost zookeeper3]# ls

bin CHANGES.txt contrib dist-maven ivysettings.xml lib NOTICE.txt README.txt src zookeeper-3.4..jar.asc zookeeper-3.4..jar.sha1

build.xml conf data docs ivy.xml LICENSE.txt README_packaging.txt recipes zookeeper-3.4..jar zookeeper-3.4..jar.md5

[root@localhost zookeeper3]# cd data/

[root@localhost data]# ls

[root@localhost data]# echo >> myid

[root@localhost data]# ls

myid

[root@localhost data]# cd ../..

[root@localhost solr-cloud]# ls

zookeeper1 zookeeper2 zookeeper3

[root@localhost solr-cloud]# cd zookeeper3

[root@localhost zookeeper3]# ls

bin CHANGES.txt contrib dist-maven ivysettings.xml lib NOTICE.txt README.txt src zookeeper-3.4..jar.asc zookeeper-3.4..jar.sha1

build.xml conf data docs ivy.xml LICENSE.txt README_packaging.txt recipes zookeeper-3.4..jar zookeeper-3.4..jar.md5

[root@localhost zookeeper3]# cd conf/

[root@localhost conf]# ls

configuration.xsl log4j.properties zoo_sample.cfg

[root@localhost conf]# mv zoo_sample.cfg zoo.cfg

[root@localhost conf]# ls

configuration.xsl log4j.properties zoo.cfg

[root@localhost conf]# vim zoo.cfg

[root@localhost conf]#

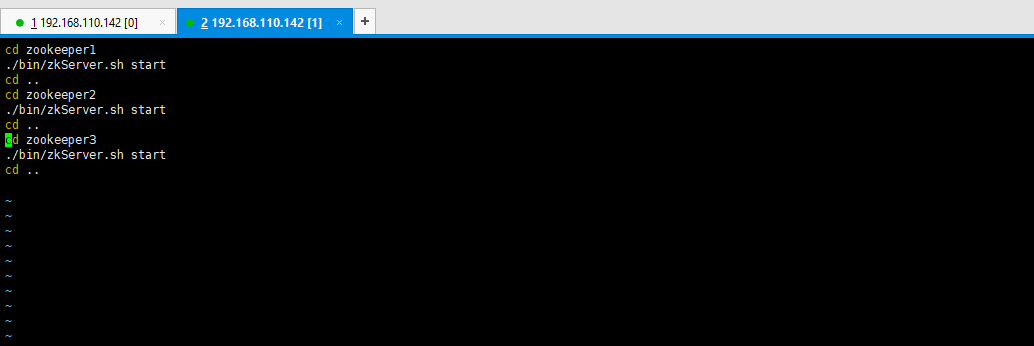

第六步:启动三个zookeeper。

/usr/local/solr-cloud/zookeeper1/bin/zkServer.sh start

/usr/local/solr-cloud/zookeeper2/bin/zkServer.sh start

/usr/local/solr-cloud/zookeeper3/bin/zkServer.sh start

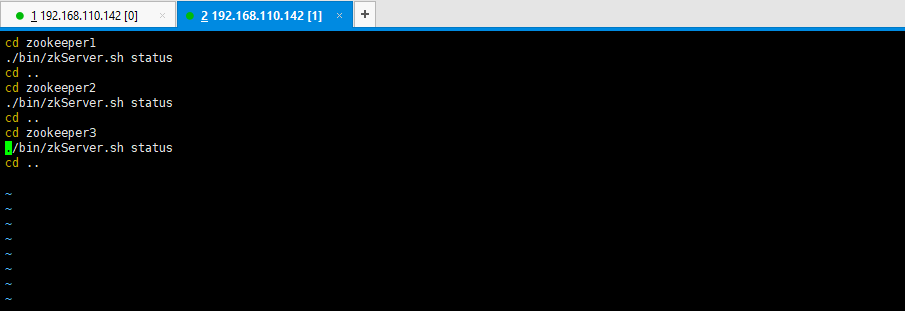

查看集群状态:

/usr/local/solr-cloud/zookeeper1/bin/zkServer.sh status

/usr/local/solr-cloud/zookeeper2/bin/zkServer.sh status

/usr/local/solr-cloud/zookeeper3/bin/zkServer.sh status

[root@localhost ~]# /usr/local/solr-cloud/zookeeper1/bin/zkServer.sh start

JMX enabled by default

Using config: /usr/local/solr-cloud/zookeeper1/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@localhost ~]# /usr/local/solr-cloud/zookeeper2/bin/zkServer.sh start

JMX enabled by default

Using config: /usr/local/solr-cloud/zookeeper2/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@localhost ~]# /usr/local/solr-cloud/zookeeper3/bin/zkServer.sh start

JMX enabled by default

Using config: /usr/local/solr-cloud/zookeeper3/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@localhost ~]# /usr/local/solr-cloud/zookeeper1/bin/zkServer.sh status

JMX enabled by default

Using config: /usr/local/solr-cloud/zookeeper1/bin/../conf/zoo.cfg

Mode: follower

[root@localhost ~]# /usr/local/solr-cloud/zookeeper2/bin/zkServer.sh status

JMX enabled by default

Using config: /usr/local/solr-cloud/zookeeper2/bin/../conf/zoo.cfg

Mode: leader

[root@localhost ~]# /usr/local/solr-cloud/zookeeper3/bin/zkServer.sh status

JMX enabled by default

Using config: /usr/local/solr-cloud/zookeeper3/bin/../conf/zoo.cfg

Mode: follower

[root@localhost ~]#

第七步:开启zookeeper用到的端口,或者直接关闭防火墙。

service iptables stop

可以编写简单的启动脚本,如下所示:

[root@localhost solr-cloud]# vim start-zookeeper.sh

[root@localhost solr-cloud]# chmod u+x start-zookeeper.sh

[root@localhost solr-cloud]# ll

total

-rwxr--r--. root root Sep : start-zookeeper.sh

drwxr-xr-x. root root Sep : zookeeper1

drwxr-xr-x. root root Sep : zookeeper2

drwxr-xr-x. root root Sep : zookeeper3

[root@localhost solr-cloud]#

查看启动状态的脚本信息如下所示:

6、tomcat安装。 (注意:solrCloud部署依赖zookeeper,需要先启动每一台zookeeper服务器。)

注意:使用之前搭建好的solr单机版,这里为了节省时间,直接拷贝之前的solr单机版,然后修改配置文件即可。

https://www.cnblogs.com/biehongli/p/11443347.html

第一步:将apache-tomcat-7.0.47.tar.gz解压,tar -zxvf apache-tomcat-7.0.47.tar.gz。

[root@localhost solr-cloud]# tar -zxvf apache-tomcat-7.0..tar.gz

第二步:把解压后的tomcat复制到/usr/local/solr-cloud/目录下复制四份。

/usr/local/solr-cloud/tomcat1

/usr/local/solr-cloud/tomcat2

/usr/local/solr-cloud/tomcat3

/usr/local/solr-cloud/tomcat4

[root@localhost package]# cp -r apache-tomcat-7.0. /usr/local/solr-cloud/tomcat01

[root@localhost package]# cp -r apache-tomcat-7.0. /usr/local/solr-cloud/tomcat02

[root@localhost package]# cp -r apache-tomcat-7.0. /usr/local/solr-cloud/tomcat03

[root@localhost package]# cp -r apache-tomcat-7.0. /usr/local/solr-cloud/tomcat04

[root@localhost package]# cd /usr/local/solr-cloud/

[root@localhost solr-cloud]# ls

start-zookeeper.sh status-zookeeper.sh stop-zookeeper.sh tomcat01 tomcat02 tomcat03 tomcat04 zookeeper1 zookeeper2 zookeeper3

[root@localhost solr-cloud]#

第三步:修改tomcat的server.xml,把其中的端口后都加一。保证两个tomcat可以正常运行不发生端口冲突。

[root@localhost solr-cloud]# vim tomcat01/conf/server.xml

[root@localhost solr-cloud]# vim tomcat02/conf/server.xml

[root@localhost solr-cloud]# vim tomcat03/conf/server.xml

[root@localhost solr-cloud]# vim tomcat04/conf/server.xml

tomcat01修改的端口分别是8005修改为8105,8080修改为8180,8009修改为8189。其他tomcat第二位依次累加1即可。

<?xml version='1.0' encoding='utf-8'?>

<!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<!-- Note: A "Server" is not itself a "Container", so you may not

define subcomponents such as "Valves" at this level.

Documentation at /docs/config/server.html

-->

<Server port="" shutdown="SHUTDOWN">

<!-- Security listener. Documentation at /docs/config/listeners.html

<Listener className="org.apache.catalina.security.SecurityListener" />

-->

<!--APR library loader. Documentation at /docs/apr.html -->

<Listener className="org.apache.catalina.core.AprLifecycleListener" SSLEngine="on" />

<!--Initialize Jasper prior to webapps are loaded. Documentation at /docs/jasper-howto.html -->

<Listener className="org.apache.catalina.core.JasperListener" />

<!-- Prevent memory leaks due to use of particular java/javax APIs-->

<Listener className="org.apache.catalina.core.JreMemoryLeakPreventionListener" />

<Listener className="org.apache.catalina.mbeans.GlobalResourcesLifecycleListener" />

<Listener className="org.apache.catalina.core.ThreadLocalLeakPreventionListener" /> <!-- Global JNDI resources

Documentation at /docs/jndi-resources-howto.html

-->

<GlobalNamingResources>

<!-- Editable user database that can also be used by

UserDatabaseRealm to authenticate users

-->

<Resource name="UserDatabase" auth="Container"

type="org.apache.catalina.UserDatabase"

description="User database that can be updated and saved"

factory="org.apache.catalina.users.MemoryUserDatabaseFactory"

pathname="conf/tomcat-users.xml" />

</GlobalNamingResources> <!-- A "Service" is a collection of one or more "Connectors" that share

a single "Container" Note: A "Service" is not itself a "Container",

so you may not define subcomponents such as "Valves" at this level.

Documentation at /docs/config/service.html

-->

<Service name="Catalina"> <!--The connectors can use a shared executor, you can define one or more named thread pools-->

<!--

<Executor name="tomcatThreadPool" namePrefix="catalina-exec-"

maxThreads="" minSpareThreads=""/>

--> <!-- A "Connector" represents an endpoint by which requests are received

and responses are returned. Documentation at :

Java HTTP Connector: /docs/config/http.html (blocking & non-blocking)

Java AJP Connector: /docs/config/ajp.html

APR (HTTP/AJP) Connector: /docs/apr.html

Define a non-SSL HTTP/1.1 Connector on port

-->

<Connector port="" protocol="HTTP/1.1"

connectionTimeout=""

redirectPort="" />

<!-- A "Connector" using the shared thread pool-->

<!--

<Connector executor="tomcatThreadPool"

port="" protocol="HTTP/1.1"

connectionTimeout=""

redirectPort="" />

-->

<!-- Define a SSL HTTP/1.1 Connector on port

This connector uses the JSSE configuration, when using APR, the

connector should be using the OpenSSL style configuration

described in the APR documentation -->

<!--

<Connector port="" protocol="HTTP/1.1" SSLEnabled="true"

maxThreads="" scheme="https" secure="true"

clientAuth="false" sslProtocol="TLS" />

--> <!-- Define an AJP 1.3 Connector on port -->

<Connector port="" protocol="AJP/1.3" redirectPort="" /> <!-- An Engine represents the entry point (within Catalina) that processes

every request. The Engine implementation for Tomcat stand alone

analyzes the HTTP headers included with the request, and passes them

on to the appropriate Host (virtual host).

Documentation at /docs/config/engine.html --> <!-- You should set jvmRoute to support load-balancing via AJP ie :

<Engine name="Catalina" defaultHost="localhost" jvmRoute="jvm1">

-->

<Engine name="Catalina" defaultHost="localhost"> <!--For clustering, please take a look at documentation at:

/docs/cluster-howto.html (simple how to)

/docs/config/cluster.html (reference documentation) -->

<!--

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"/>

--> <!-- Use the LockOutRealm to prevent attempts to guess user passwords

via a brute-force attack -->

<Realm className="org.apache.catalina.realm.LockOutRealm">

<!-- This Realm uses the UserDatabase configured in the global JNDI

resources under the key "UserDatabase". Any edits

that are performed against this UserDatabase are immediately

available for use by the Realm. -->

<Realm className="org.apache.catalina.realm.UserDatabaseRealm"

resourceName="UserDatabase"/>

</Realm> <Host name="localhost" appBase="webapps"

unpackWARs="true" autoDeploy="true"> <!-- SingleSignOn valve, share authentication between web applications

Documentation at: /docs/config/valve.html -->

<!--

<Valve className="org.apache.catalina.authenticator.SingleSignOn" />

--> <!-- Access log processes all example.

Documentation at: /docs/config/valve.html

Note: The pattern used is equivalent to using pattern="common" -->

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="localhost_access_log." suffix=".txt"

pattern="%h %l %u %t "%r" %s %b" /> </Host>

</Engine>

</Service>

</Server>

tomcat01修改的端口分别是8005修改为8105,8080修改为8180,8009修改为8189。其他tomcat第二位依次累加1即可。

7、开始部署solr。将之前的solr部署到四台tomcat下面。

[root@localhost solr-cloud]# cp -r ../solr/tomcat/webapps/solr-4.10. tomcat01/webapps/

[root@localhost solr-cloud]# cp -r ../solr/tomcat/webapps/solr-4.10. tomcat02/webapps/

[root@localhost solr-cloud]# cp -r ../solr/tomcat/webapps/solr-4.10. tomcat03/webapps/

[root@localhost solr-cloud]# cp -r ../solr/tomcat/webapps/solr-4.10. tomcat04/webapps/

然后将单机版的solrhome复制四份,分别是solrhome01,solrhome02,solrhome03,solrhome04。

[root@localhost solr-cloud]# cp -r ../solr/solrhome/ solrhome01

[root@localhost solr-cloud]# cp -r ../solr/solrhome/ solrhome02

[root@localhost solr-cloud]# cp -r ../solr/solrhome/ solrhome03

[root@localhost solr-cloud]# cp -r ../solr/solrhome/ solrhome04

[root@localhost solr-cloud]# ls

solrhome01 solrhome02 solrhome03 solrhome04 start-zookeeper.sh status-zookeeper.sh stop-zookeeper.sh tomcat01 tomcat02 tomcat03 tomcat04 zookeeper1 zookeeper2 zookeeper3

[root@localhost solr-cloud]#

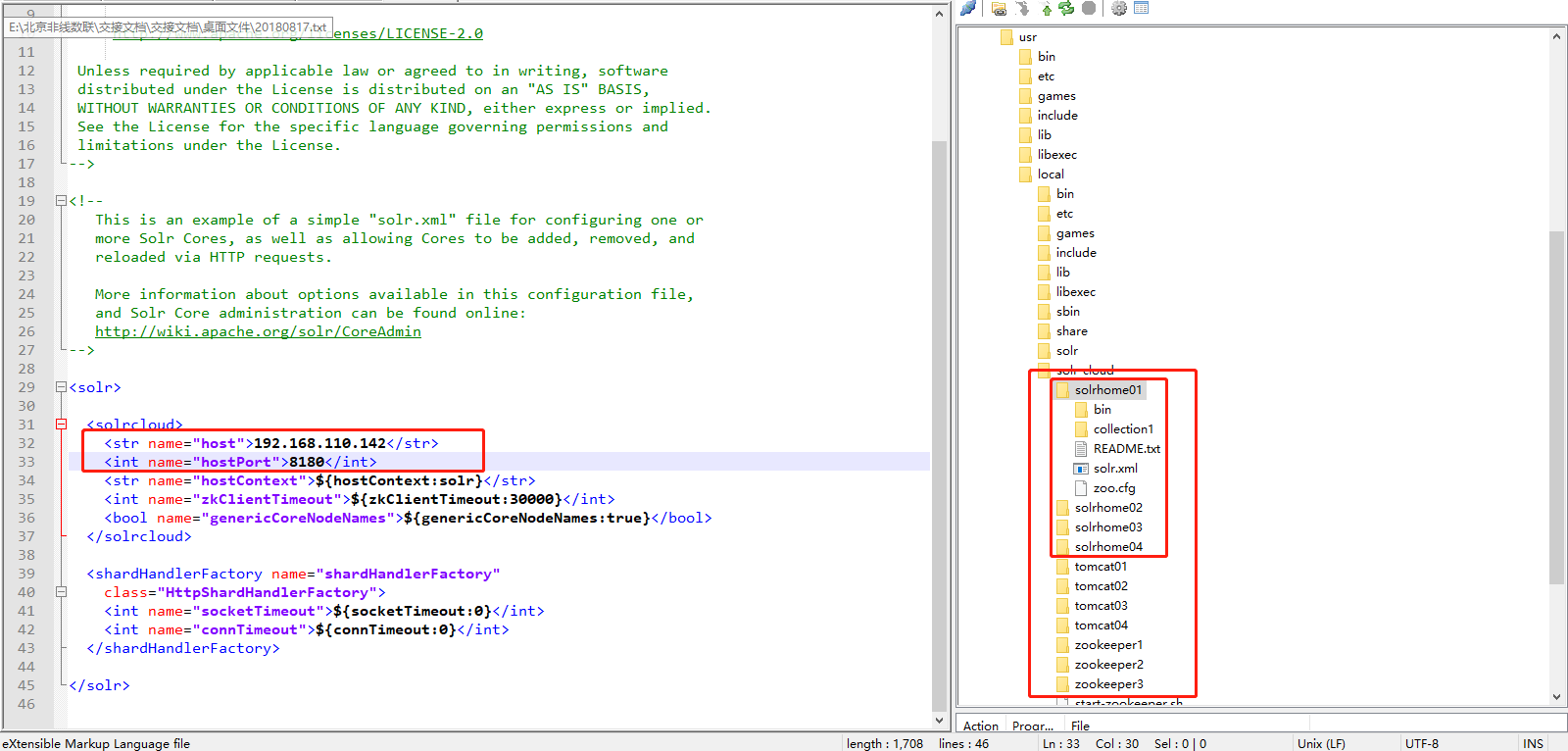

然后开始编辑solrhome01,solrhome02,solrhome03,solrhome04。下面的solr.xml配置文件(修改每个solrhome的solr.xml文件)。让tomcat01和solrhome01对应上,其他依次类推,让tomcat02和solrhome02对应上,让tomcat03和solrhome03对应上,让tomcat04和solrhome04对应上。

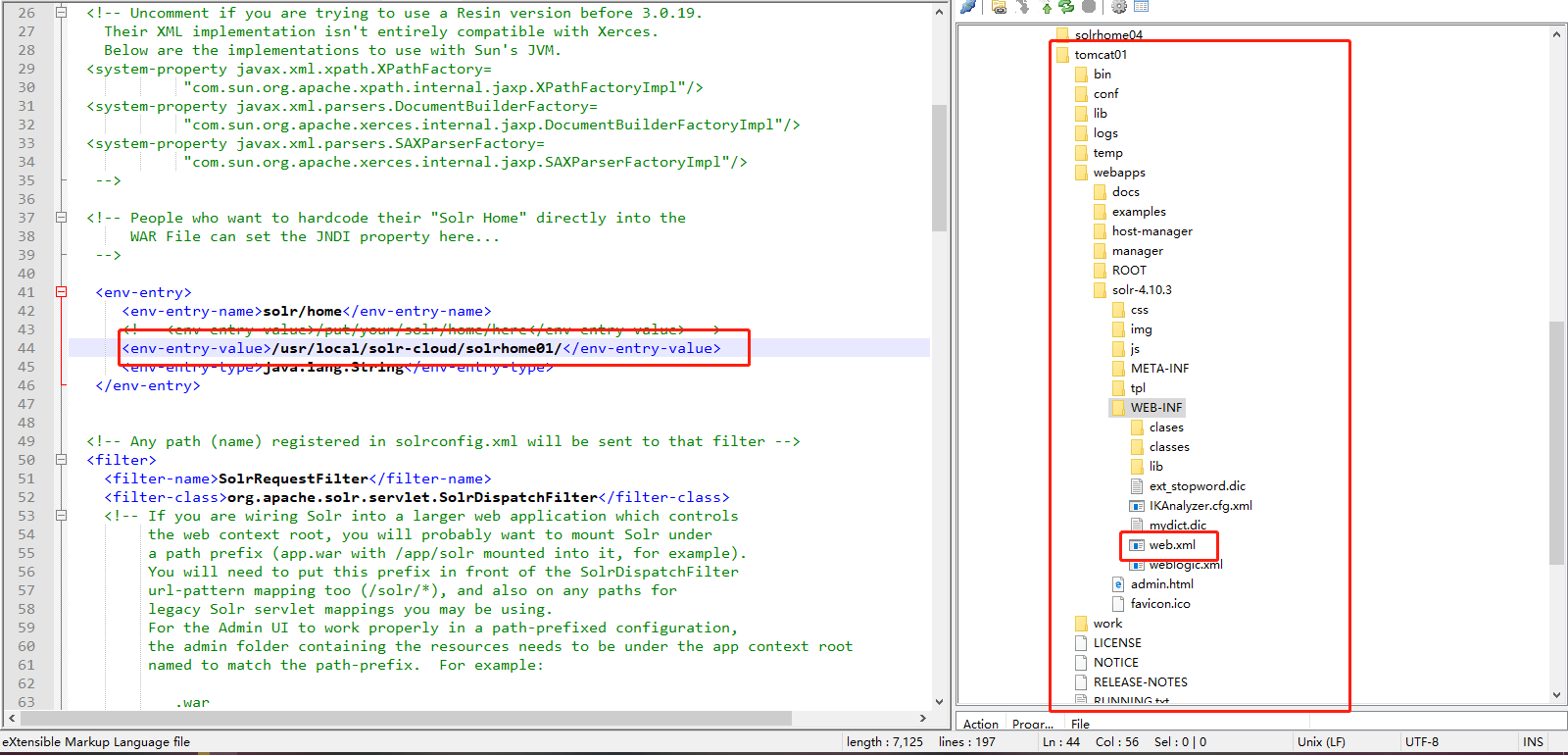

然后将solr和solrhome之间建立关系。

[root@localhost solr-cloud]# vim tomcat01/webapps/solr-4.10./WEB-INF/web.xml

[root@localhost solr-cloud]# vim tomcat02/webapps/solr-4.10./WEB-INF/web.xml

[root@localhost solr-cloud]# vim tomcat03/webapps/solr-4.10./WEB-INF/web.xml

[root@localhost solr-cloud]# vim tomcat04/webapps/solr-4.10./WEB-INF/web.xml

如下图所示:

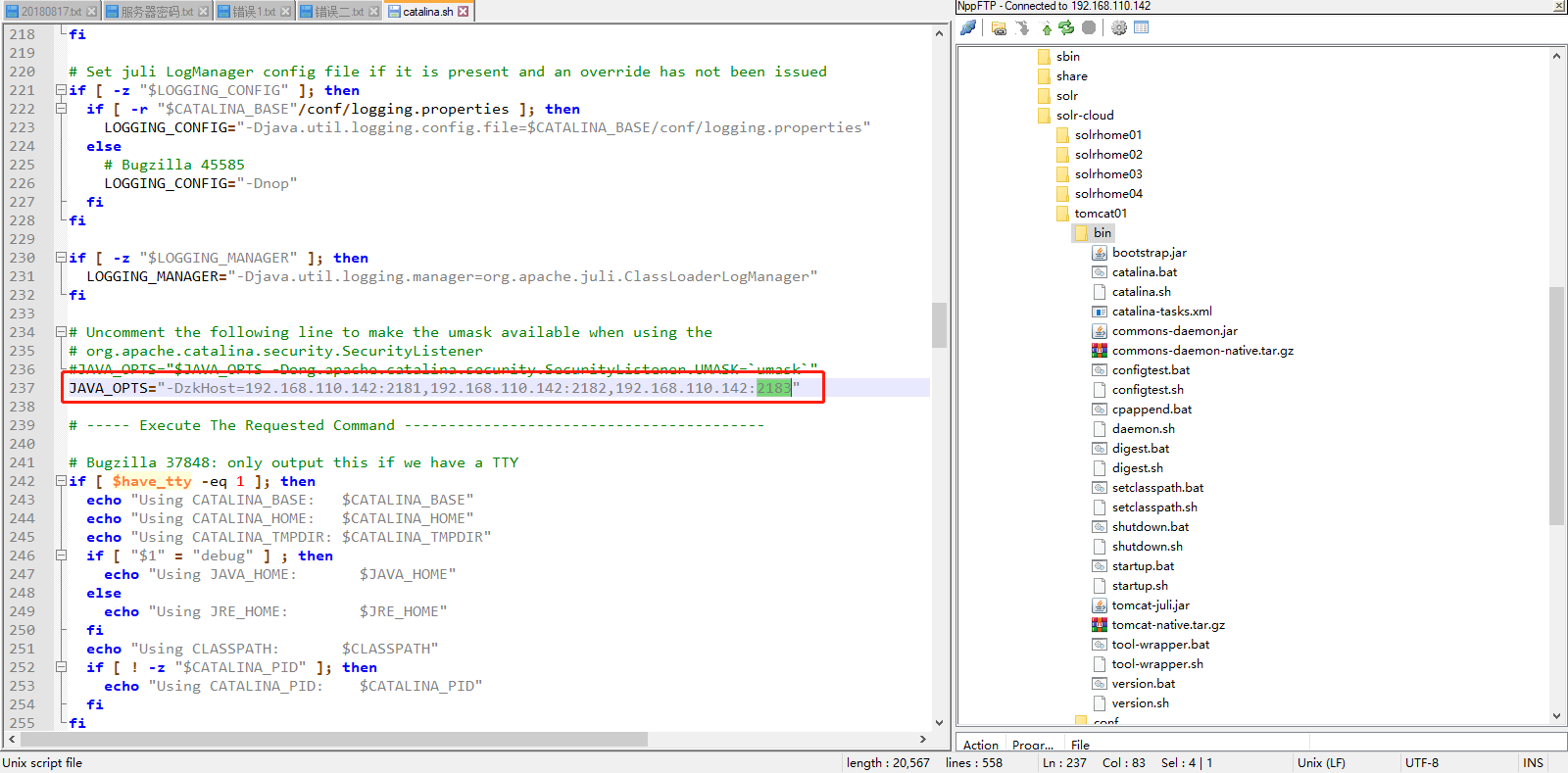

然后将tomcat和zookeeper建立关系。每一台solr和zookeeper关联。

修改每一个tomcat的bin目录下catalina.sh文件中加入DzkHost指定zookeeper服务器地址: JAVA_OPTS="-DzkHost=192.168.110.142:2181,192.168.110.142:2182,192.168.110.142:2183"

注意:可以使用vim的查找功能查找到JAVA_OPTS的定义的位置,然后添加。

[root@localhost solr-cloud]# vim tomcat01/bin/catalina.sh

[root@localhost solr-cloud]# vim tomcat02/bin/catalina.sh

[root@localhost solr-cloud]# vim tomcat03/bin/catalina.sh

[root@localhost solr-cloud]# vim tomcat04/bin/catalina.sh

现在,每个部署在tomcat下面的solr都有自己的独立的solrhome。在solr集群环境下, solrhome下面的配置文件应该只有一份,所以这里将配置文件上传到zookeeper,让zookeeper进行管理配置文件。将solrhome下面的conf目录上传到zookeeper。使用solr自带的工具执行,将cong目录上传到zookeeper。

注意:由于zookeeper统一管理solr的配置文件(主要是schema.xml、solrconfig.xml), solrCloud各各节点使用zookeeper管理的配置文件。./zkcli.sh此命令在solr-4.10.3/example/scripts/cloud-scripts/目录下。

[root@localhost solrhome01]# cd /home/hadoop/soft/

[root@localhost soft]# ls

apache-tomcat-7.0. jdk1..0_55 solr-4.10. zookeeper-3.4.

[root@localhost soft]# cd solr-4.10./

[root@localhost solr-4.10.]# ls

bin CHANGES.txt contrib dist docs example licenses LICENSE.txt LUCENE_CHANGES.txt NOTICE.txt README.txt SYSTEM_REQUIREMENTS.txt

[root@localhost solr-4.10.]# cd example/

[root@localhost example]# ls

contexts etc example-DIH exampledocs example-schemaless lib logs multicore README.txt resources scripts solr solr-webapp start.jar webapps

[root@localhost example]# cd scripts/

[root@localhost scripts]# ls

cloud-scripts map-reduce

[root@localhost scripts]# cd cloud-scripts/

[root@localhost cloud-scripts]# ls

log4j.properties zkcli.bat zkcli.sh

[root@localhost cloud-scripts]# ./zkcli.sh -zkhost 192.168.110.142:,192.168.110.142:,192.168.110.142: -cmd upconfig -confdir /usr/local/solr-cloud/solrhome01/collection1/conf -confname myconf

然后登陆zookeeper服务器查询配置文件,查看是否上传成功。上传成功以后所有节点共用这一份配置文件的。

[root@localhost cloud-scripts]# cd /usr/local/solr-cloud/

[root@localhost solr-cloud]# ls

solrhome01 solrhome02 solrhome03 solrhome04 start-zookeeper.sh status-zookeeper.sh stop-zookeeper.sh tomcat01 tomcat02 tomcat03 tomcat04 zookeeper1 zookeeper2 zookeeper3

[root@localhost solr-cloud]# cd zookeeper1/

[root@localhost zookeeper1]# ls

bin CHANGES.txt contrib dist-maven ivysettings.xml lib NOTICE.txt README.txt src zookeeper-3.4..jar.asc zookeeper-3.4..jar.sha1

build.xml conf data docs ivy.xml LICENSE.txt README_packaging.txt recipes zookeeper-3.4..jar zookeeper-3.4..jar.md5 zookeeper.out

[root@localhost zookeeper1]# cd bin/

[root@localhost bin]# ls

README.txt zkCleanup.sh zkCli.cmd zkCli.sh zkEnv.cmd zkEnv.sh zkServer.cmd zkServer.sh

[root@localhost bin]# ./zkCli.sh

Connecting to localhost:

-- ::, [myid:] - INFO [main:Environment@] - Client environment:zookeeper.version=3.4.-, built on // : GMT

-- ::, [myid:] - INFO [main:Environment@] - Client environment:host.name=localhost

-- ::, [myid:] - INFO [main:Environment@] - Client environment:java.version=1.7.0_55

-- ::, [myid:] - INFO [main:Environment@] - Client environment:java.vendor=Oracle Corporation

-- ::, [myid:] - INFO [main:Environment@] - Client environment:java.home=/home/hadoop/soft/jdk1..0_55/jre

-- ::, [myid:] - INFO [main:Environment@] - Client environment:java.class.path=/usr/local/solr-cloud/zookeeper1/bin/../build/classes:/usr/local/solr-cloud/zookeeper1/bin/../build/lib/*.jar:/usr/local/solr-cloud/zookeeper1/bin/../lib/slf4j-log4j12-1.6.1.jar:/usr/local/solr-cloud/zookeeper1/bin/../lib/slf4j-api-1.6.1.jar:/usr/local/solr-cloud/zookeeper1/bin/../lib/netty-3.7.0.Final.jar:/usr/local/solr-cloud/zookeeper1/bin/../lib/log4j-1.2.16.jar:/usr/local/solr-cloud/zookeeper1/bin/../lib/jline-0.9.94.jar:/usr/local/solr-cloud/zookeeper1/bin/../zookeeper-3.4.6.jar:/usr/local/solr-cloud/zookeeper1/bin/../src/java/lib/*.jar:/usr/local/solr-cloud/zookeeper1/bin/../conf:

2019-09-13 20:06:46,776 [myid:] - INFO [main:Environment@100] - Client environment:java.library.path=/usr/java/packages/lib/i386:/lib:/usr/lib

2019-09-13 20:06:46,777 [myid:] - INFO [main:Environment@100] - Client environment:java.io.tmpdir=/tmp

2019-09-13 20:06:46,782 [myid:] - INFO [main:Environment@100] - Client environment:java.compiler=<NA>

2019-09-13 20:06:46,783 [myid:] - INFO [main:Environment@100] - Client environment:os.name=Linux

2019-09-13 20:06:46,798 [myid:] - INFO [main:Environment@100] - Client environment:os.arch=i386

2019-09-13 20:06:46,798 [myid:] - INFO [main:Environment@100] - Client environment:os.version=2.6.32-358.el6.i686

2019-09-13 20:06:46,798 [myid:] - INFO [main:Environment@100] - Client environment:user.name=root

2019-09-13 20:06:46,798 [myid:] - INFO [main:Environment@100] - Client environment:user.home=/root

2019-09-13 20:06:46,798 [myid:] - INFO [main:Environment@100] - Client environment:user.dir=/usr/local/solr-cloud/zookeeper1/bin

2019-09-13 20:06:46,803 [myid:] - INFO [main:ZooKeeper@438] - Initiating client connection, connectString=localhost:2181 sessionTimeout=30000 watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@932892

Welcome to ZooKeeper!

2019-09-13 20:06:46,983 [myid:] - INFO [main-SendThread(localhost:2181):ClientCnxn$SendThread@975] - Opening socket connection to server localhost/0:0:0:0:0:0:0:1:2181. Will not attempt to authenticate using SASL (unknown error)

JLine support is enabled

2019-09-13 20:06:47,071 [myid:] - INFO [main-SendThread(localhost:2181):ClientCnxn$SendThread@852] - Socket connection established to localhost/0:0:0:0:0:0:0:1:2181, initiating session

2019-09-13 20:06:47,163 [myid:] - INFO [main-SendThread(localhost:2181):ClientCnxn$SendThread@1235] - Session establishment complete on server localhost/0:0:0:0:0:0:0:1:2181, sessionid = 0x16d2d6bfd280001, negotiated timeout = 30000 WATCHER:: WatchedEvent state:SyncConnected type:None path:null

[zk: localhost:2181(CONNECTED) 0] ls /

[configs, zookeeper]

[zk: localhost:2181(CONNECTED) 1] ls /configs

[myconf]

[zk: localhost:2181(CONNECTED) 2] ls /configs/myconf

[admin-extra.menu-top.html, currency.xml, protwords.txt, mapping-FoldToASCII.txt, _schema_analysis_synonyms_english.json, _rest_managed.json, solrconfig.xml, _schema_analysis_stopwords_english.json, stopwords.txt, lang, spellings.txt, mapping-ISOLatin1Accent.txt, admin-extra.html, xslt, scripts.conf, synonyms.txt, update-script.js, velocity, elevate.xml, admin-extra.menu-bottom.html, schema.xml, clustering]

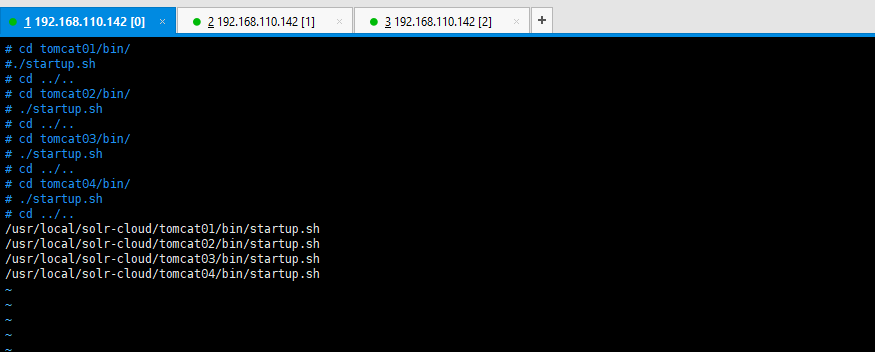

然后将所有的tomcat启动即可。然后也写一个批处理启动tomcat的脚本。

[root@localhost solr-cloud]# vim start-tomcat.sh

[root@localhost solr-cloud]# ls

solrhome01 solrhome02 solrhome03 solrhome04 start-tomcat.sh start-zookeeper.sh status-zookeeper.sh stop-zookeeper.sh tomcat01 tomcat02 tomcat03 tomcat04 zookeeper1 zookeeper2 zookeeper3

[root@localhost solr-cloud]# chmod u+x start-tomcat.sh

[root@localhost solr-cloud]#

如下图所示:

然后启动你的脚本文件:

[root@localhost solr-cloud]# ./start-tomcat.sh

Using CATALINA_BASE: /usr/local/solr-cloud/tomcat01

Using CATALINA_HOME: /usr/local/solr-cloud/tomcat01

Using CATALINA_TMPDIR: /usr/local/solr-cloud/tomcat01/temp

Using JRE_HOME: /home/hadoop/soft/jdk1..0_55

Using CLASSPATH: /usr/local/solr-cloud/tomcat01/bin/bootstrap.jar:/usr/local/solr-cloud/tomcat01/bin/tomcat-juli.jar

Using CATALINA_BASE: /usr/local/solr-cloud/tomcat02

Using CATALINA_HOME: /usr/local/solr-cloud/tomcat02

Using CATALINA_TMPDIR: /usr/local/solr-cloud/tomcat02/temp

Using JRE_HOME: /home/hadoop/soft/jdk1..0_55

Using CLASSPATH: /usr/local/solr-cloud/tomcat02/bin/bootstrap.jar:/usr/local/solr-cloud/tomcat02/bin/tomcat-juli.jar

Using CATALINA_BASE: /usr/local/solr-cloud/tomcat03

Using CATALINA_HOME: /usr/local/solr-cloud/tomcat03

Using CATALINA_TMPDIR: /usr/local/solr-cloud/tomcat03/temp

Using JRE_HOME: /home/hadoop/soft/jdk1..0_55

Using CLASSPATH: /usr/local/solr-cloud/tomcat03/bin/bootstrap.jar:/usr/local/solr-cloud/tomcat03/bin/tomcat-juli.jar

Using CATALINA_BASE: /usr/local/solr-cloud/tomcat04

Using CATALINA_HOME: /usr/local/solr-cloud/tomcat04

Using CATALINA_TMPDIR: /usr/local/solr-cloud/tomcat04/temp

Using JRE_HOME: /home/hadoop/soft/jdk1..0_55

Using CLASSPATH: /usr/local/solr-cloud/tomcat04/bin/bootstrap.jar:/usr/local/solr-cloud/tomcat04/bin/tomcat-juli.jar

然后查看启动的状况,如下所示:

[root@localhost solr-cloud]# jps

Bootstrap

Bootstrap

Jps

QuorumPeerMain

QuorumPeerMain

QuorumPeerMain

Bootstrap

Bootstrap

[root@localhost solr-cloud]# ps -aux | grep tomcat

Warning: bad syntax, perhaps a bogus '-'? See /usr/share/doc/procps-3.2./FAQ

root 14.3 10.0 pts/ Sl : : /home/hadoop/soft/jdk1..0_55/bin/java -Djava.util.logging.config.file=/usr/local/solr-cloud/tomcat01/conf/logging.properties -Djava.util.logging.manager=org.apache.juli.ClassLoaderLogManager -DzkHost=192.168.110.142:,192.168.110.142:,192.168.110.142: -Djava.endorsed.dirs=/usr/local/solr-cloud/tomcat01/endorsed -classpath /usr/local/solr-cloud/tomcat01/bin/bootstrap.jar:/usr/local/solr-cloud/tomcat01/bin/tomcat-juli.jar -Dcatalina.base=/usr/local/solr-cloud/tomcat01 -Dcatalina.home=/usr/local/solr-cloud/tomcat01 -Djava.io.tmpdir=/usr/local/solr-cloud/tomcat01/temp org.apache.catalina.startup.Bootstrap start

root 14.3 10.2 pts/ Sl : : /home/hadoop/soft/jdk1..0_55/bin/java -Djava.util.logging.config.file=/usr/local/solr-cloud/tomcat02/conf/logging.properties -Djava.util.logging.manager=org.apache.juli.ClassLoaderLogManager -DzkHost=192.168.110.142:,192.168.110.142:,192.168.110.142: -Djava.endorsed.dirs=/usr/local/solr-cloud/tomcat02/endorsed -classpath /usr/local/solr-cloud/tomcat02/bin/bootstrap.jar:/usr/local/solr-cloud/tomcat02/bin/tomcat-juli.jar -Dcatalina.base=/usr/local/solr-cloud/tomcat02 -Dcatalina.home=/usr/local/solr-cloud/tomcat02 -Djava.io.tmpdir=/usr/local/solr-cloud/tomcat02/temp org.apache.catalina.startup.Bootstrap start

root 14.5 10.4 pts/ Sl : : /home/hadoop/soft/jdk1..0_55/bin/java -Djava.util.logging.config.file=/usr/local/solr-cloud/tomcat03/conf/logging.properties -Djava.util.logging.manager=org.apache.juli.ClassLoaderLogManager -DzkHost=192.168.110.142:,192.168.110.142:,192.168.110.142: -Djava.endorsed.dirs=/usr/local/solr-cloud/tomcat03/endorsed -classpath /usr/local/solr-cloud/tomcat03/bin/bootstrap.jar:/usr/local/solr-cloud/tomcat03/bin/tomcat-juli.jar -Dcatalina.base=/usr/local/solr-cloud/tomcat03 -Dcatalina.home=/usr/local/solr-cloud/tomcat03 -Djava.io.tmpdir=/usr/local/solr-cloud/tomcat03/temp org.apache.catalina.startup.Bootstrap start

root 14.8 10.6 pts/ Sl : : /home/hadoop/soft/jdk1..0_55/bin/java -Djava.util.logging.config.file=/usr/local/solr-cloud/tomcat04/conf/logging.properties -Djava.util.logging.manager=org.apache.juli.ClassLoaderLogManager -DzkHost=192.168.110.142:,192.168.110.142:,192.168.110.142: -Djava.endorsed.dirs=/usr/local/solr-cloud/tomcat04/endorsed -classpath /usr/local/solr-cloud/tomcat04/bin/bootstrap.jar:/usr/local/solr-cloud/tomcat04/bin/tomcat-juli.jar -Dcatalina.base=/usr/local/solr-cloud/tomcat04 -Dcatalina.home=/usr/local/solr-cloud/tomcat04 -Djava.io.tmpdir=/usr/local/solr-cloud/tomcat04/temp org.apache.catalina.startup.Bootstrap start

root 0.0 0.0 pts/ S+ : : grep tomcat

[root@localhost solr-cloud]#

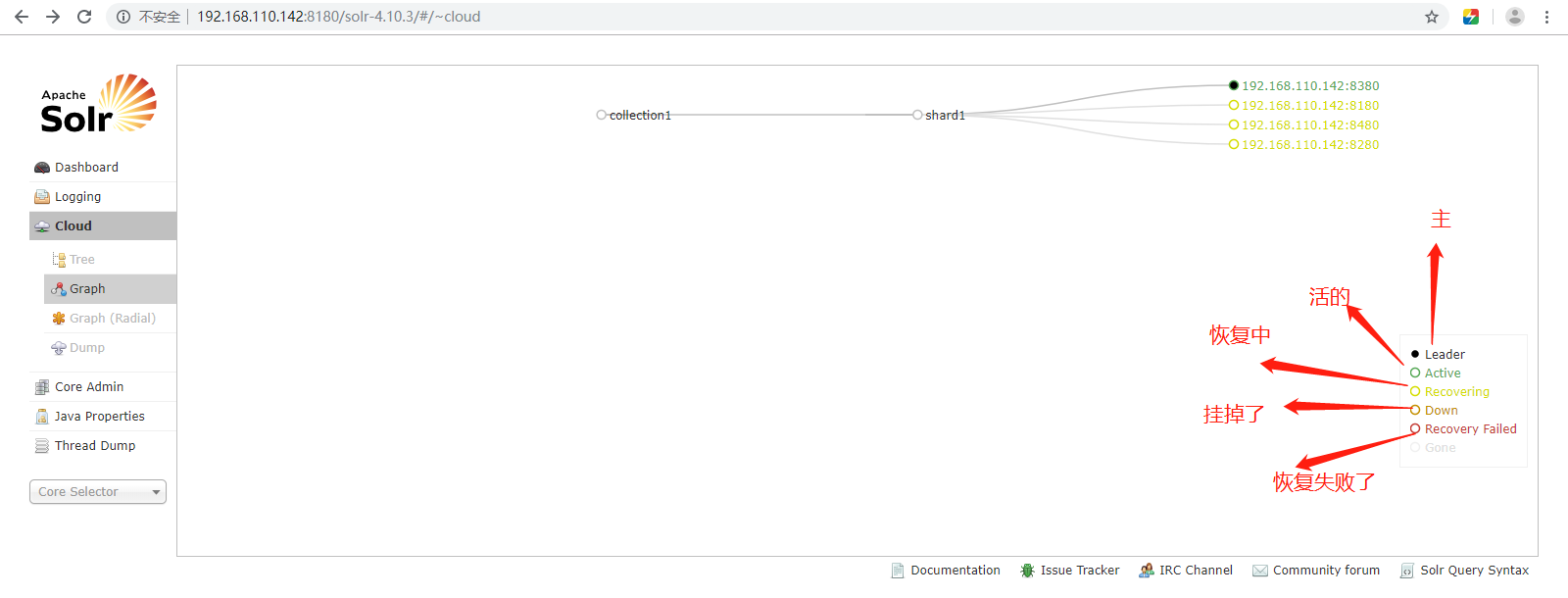

启动以后可以访问一下,看看启动的状况。你可以可以查看启动日志的。我的这个应该是没有启动成功的。然后检查一下原因。

上图中的collection1集群只有一片,可以通过下边的方法配置新的集群。

如果集群中有四个solr节点创建新集群collection2,将集群分为两片,每片两个副本。

做到这里报了一堆莫名奇妙的错误,百度了很久,没有找到具体原因,就是起不来,可能是分配1G的内存太小了,我调到1.5G内存,然后将单机版solr,集群版solr,全部删除了,然后重新搭建,才成功了。如下图所示,这玩意,还是建议多研究,搭建的时候仔细点,不然一不小心就错了。

这内存显示都快使用完了,分配的1.5G内存,那之前的1G内存肯定是不够用了。

网络图如下所示:

注意:访问任意一台solr,左侧菜单出现Cloud。

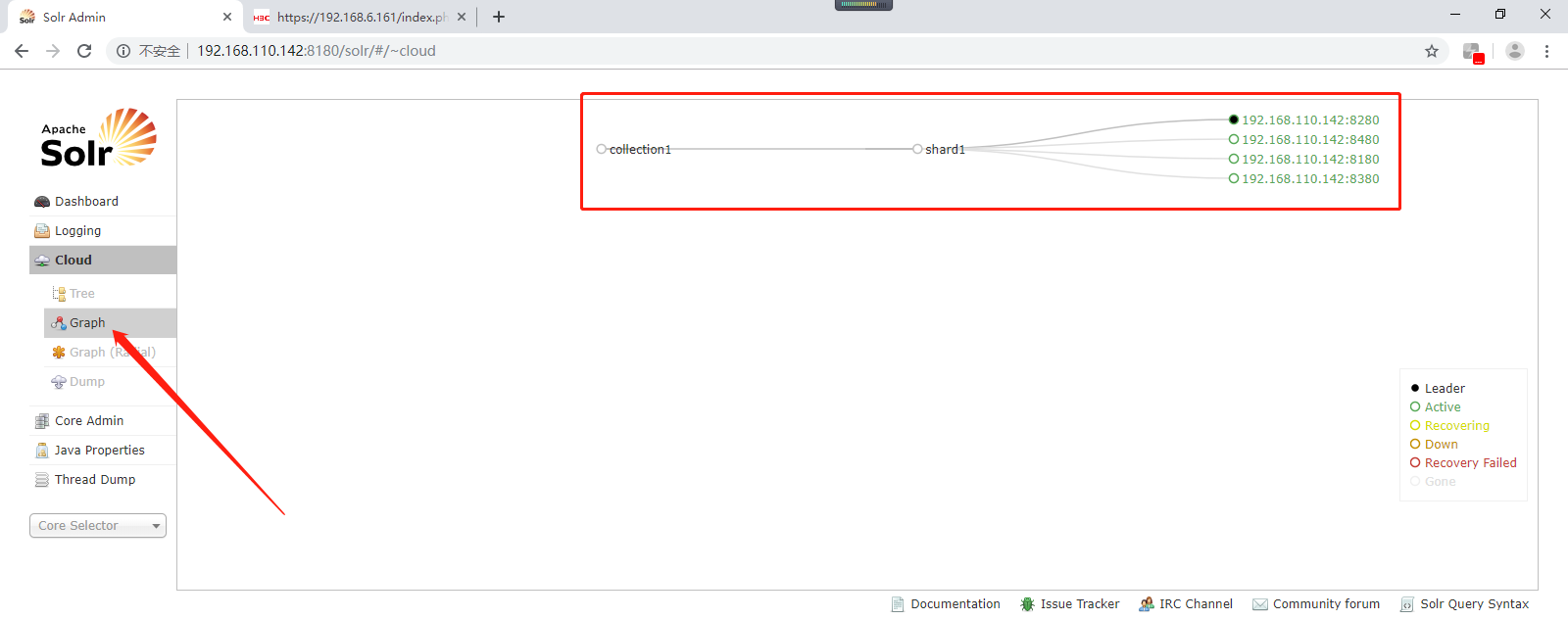

上图中的collection1集群只有一片,可以通过下边的方法配置新的集群。

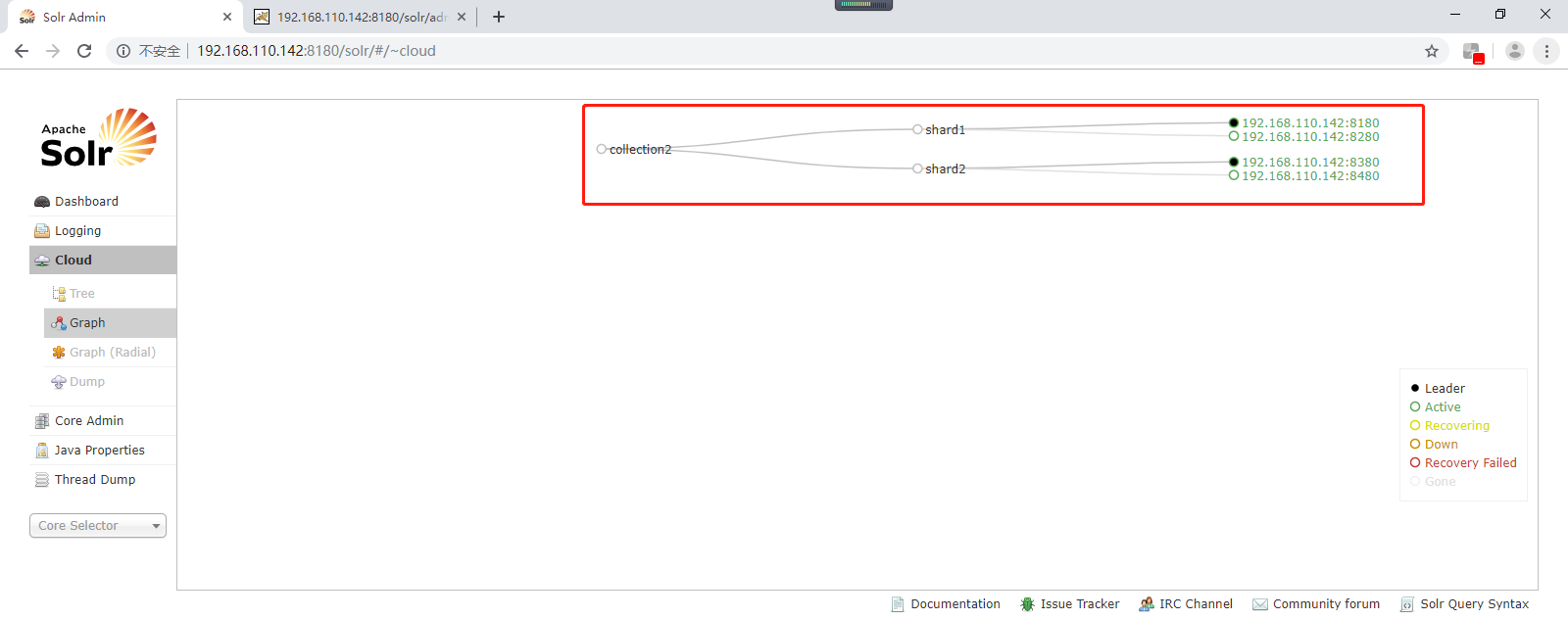

创建collection的命令如下所示(注意:如果集群中有四个solr节点创建新集群collection2,将集群分为两片,每片两个副本):

http://192.168.110.142:8180/solr/admin/collections?action=CREATE&name=collection2&numShards=2&replicationFactor=2

刷新页面如下所示:

创建成功以后,就是一个collection分成了两片,一主一备(192.168.110.142:8180、192.168.110.142:8280,依次类比即可)。

删除collection的命令如下所示:http://192.168.110.142:8180/solr/admin/collections?action=DELETE&name=collection1

那么之前的collection1可以删除或者自己留着也行的。

提示tips:启动solrCloud注意,启动solrCloud需要先启动solrCloud依赖的所有zookeeper服务器,再启动每台solr服务器。

Solr集群(即SolrCloud)搭建与使用的更多相关文章

- solr 集群(SolrCloud 分布式集群部署步骤)

SolrCloud 分布式集群部署步骤 安装软件包准备 apache-tomcat-7.0.54 jdk1.7 solr-4.8.1 zookeeper-3.4.5 注:以上软件都是基于 Linux ...

- Solr 11 - Solr集群模式的部署(基于Solr 4.10.4搭建SolrCloud)

目录 1 SolrCloud结构说明 2 环境的安装 2.1 环境说明 2.2 部署并启动ZooKeeper集群 2.3 部署Solr单机服务 2.4 添加Solr的索引库 3 部署Solr集群服务( ...

- (转)淘淘商城系列——Solr集群搭建

http://blog.csdn.net/yerenyuan_pku/article/details/72957201 我们之前做的搜索使用的是Solr的单机版来实现的,正是由于我们现在商品数据量不多 ...

- Solr集群环境搭建

一.准备工作 首先保证已经安装JDK工具包: [root@localhost opt]# java -version java version "1.8.0_144" Java(T ...

- solrcloud集群版的搭建

说在前面的话 之前我们了解到了solr的搭建,我们的solr是搭建在tomcat上面的,由于tomcat并不能过多的承受访问的压力,因此就带来了solrcloud的时代.也就是solr集群. 本次配置 ...

- solr集群solrCloud的搭建

上一章讲了solr单机版的搭建,本章将讲解sole集群的搭建.solr集群的搭建需要使用到zookeeper,搭建参见zookeeper集群的安装 一.solr实例的搭建 1. tomcat安装 这里 ...

- 分布式搜索之搭建Solrcloud(Solr集群)

Solrcloud介绍: SolrCloud(solr集群)是Solr提供的分布式搜索方案. 当你需要大规模,容错,分布式索引和检索能力时使用SolrCloud. 当索引量很大,搜索请求并发很高时,同 ...

- solr集群SolrCloud(solr+zookeeper)windows搭建

SolrCloud是什么 参考 solrCloud官网介绍 http://lucene.apache.org/solr/guide/6_6/solrcloud.html Apache Solr 可以设 ...

- 搭建Solr集群的推荐方案

之前介绍过2篇SolrCloud的部署流程,第一个是使用安装脚本的方式进行抽取安装,启动比较方便,但是会创建多个目录,感觉比较乱:第二个是官方教程上提供的方法,使用比较简单,直接释放压缩包即可,并且启 ...

随机推荐

- C#简单的枚举及结构

using System; namespace program { enum WeekDays { a, b, c = ,//11 赋值以后就变成11,不赋值就是2 d, e, f, g }//不能输 ...

- StreamWriter StreamReader

private void WriteLoginJsonData(object jsonData) { using (FileStream writerFileStream = new FileStre ...

- java基础(9):类、封装

1. 面向对象 1.1 理解什么是面向过程.面向对象 面向过程与面向对象都是我们编程中,编写程序的一种思维方式. 面向过程的程序设计方式,是遇到一件事时,思考“我该怎么做”,然后一步步实现的过程. 例 ...

- CarTool 使用,获取图片资源

程序:gitHub: 项目地址 使用方法: 1.拿到资源包 在itunes里找到喜欢的应用,然后下载,直接将app拖到桌面.得到一个一个ipa资源包,如图 2.将资源包改成zip格式 3.解压zip资 ...

- Swift相比OC语言有哪些优点

Swift相比OC语言有哪些优点 1.自动做类型推断 2.可以保证类型使用安全 Swif类型说明符 --Swift增加了Tuple表示元组类型 --Swift增加了Optional表示可选类型 常量一 ...

- emmet的用法

emmet 是一个提高前端开发效率的一个工具.emmet允许在html.xml.和css等文档中输入缩写,然后按tab键自动展开为完整的代码片段. 一.Sublime Text 3 安装插件Emmet ...

- tmux:终端复用神器

一.简介与安装 今天无意间从同事那里知道有 tmux 这种神器,tmux(terminal multiplexer)是Linux上的终端复用神器,可从一个屏幕上管理多个终端(准确说是伪终端).使用该工 ...

- JDBC API浅析

使用java开发数据库应用程序一般都需要用到四个接口:Driver.Connection.Statement.ResultSet 1.Driver接口用于加载驱动程序 2.Connection接口用于 ...

- 苏州市java岗位的薪资状况(1)

8月份已经正式离职,这两个月主要在做新书校对工作.9月份陆续投了几份简历,参加了两次半面试,第一次是家做办公自动化的公司,开的薪水和招聘信息严重不符,感觉实在是在浪费时间,你说你给不了那么多为什还往上 ...

- Pwnable-blukat

ssh blukat@pwnable.kr -p2222 (pw: guest) 连接上去看看c的源码 #include <stdio.h> #include <string.h&g ...