Curiosity-Driven Learning through Next State Prediction

Curiosity-Driven Learning through Next State Prediction

2019-10-19 20:43:17

This paper is from: https://medium.com/data-from-the-trenches/curiosity-driven-learning-through-next-state-prediction-f7f4e2f592fa

In the last few years, we’ve seen a lot of breakthroughs in reinforcement learning (RL). From 2013 with the first deep learning model to successfully learn a policy directly from pixel input using reinforcement learning to the OpenAI Dexterity project in 2019, we live in an exciting moment in RL research.

Today, we’ll learn about curiosity-driven learning methods, one of the most promising series of strategies in deep reinforcement learning. Thanks to this method, we were able to successfully train an agent that wins the first level of Super Mario Bros with only curiosity as a reward.

Remember that RL is based on thereward hypothesis, which is the idea that each goal can be described as the maximization of the rewards. However, the current problem with extrinsic rewards (i.e., rewards given by the environment) is that this function is hard coded by a human, which is not scalable to real world problems (such as designing a good reward function for autonomous vehicles).

The idea of curiosity-driven learning is to build a reward function that is intrinsic to the agent (that is generated by the agent itself). In this sense, the agent will act as a self-learner since it will be the student, but also its own feedback master.

Sound crazy? Yes, probably. But that’s the genius idea that was re-introduced in the 2017 paperCuriosity-Driven Exploration by Self-Supervised Prediction. The results were then improved with the second paperLarge-Scale Study of Curiosity-Driven Learning. Why re-introduced? Because curiosity has been a subject of research in RL since the 90s with the amazing work of Mr. J. Schmidhuber, which you can readhere.

They discovered that curiosity-driven learning agents perform as well as if they had extrinsic rewards and that they were able to generalize better with unexplored environments.

Two Major Problems in Modern RL

Modern RL suffers from two problems:

First, the sparse rewards or non-existing rewards problem: that is, most rewards do not contain information, and hence are set to zero. However, as rewards act as feedback for RL agents, if they don’t receive any, their knowledge of which action is appropriate (or not) cannot change.

Thanks to the reward, our agent knows that this action at that state was good

For instance, in Vizdoom “DoomMyWayHome,” your agent is only rewarded if it finds the vest. However, the vest is far away from your starting point, so most of your rewards will be zero.

A big thanks to Felix Steger for this illustration

Therefore, if our agent does not receive useful feedback (dense rewards), it will take much longer to learn an optimal policy.

The second big problem is that the extrinsic reward function is handmade — that is, in each environment, a human has to implement a reward function. But how we can scale that in big and complex environments?

So What Is Curiosity?

Therefore, a solution to these problems is to develop a reward function that is intrinsic to the agent, i.e., generated by the agent itself. This intrinsic reward mechanism is known as curiosity because it explores states that are novel/unfamiliar. In order to achieve that, our agent will receive a high reward when exploring new trajectories.

This reward design is based on how human plays — some suppose we have an intrinsic desire to explore environments and discover new things! There are different ways to calculate this intrinsic reward, and we’ll focus on this article on curiosity through next-state prediction.

Curiosity Through Prediction-Based Surprise (or Next-State Prediction)

Curiosity is an intrinsic reward that is equal to the error of our agent of predicting the next state, given the current state and action taken. More formally, we can define this as:

Why? Because the idea of curiosity is to encourage our agent to perform actions that reduce the uncertainty in the agent’s ability to predict the consequences of its own actions (uncertainty will be higher in areas where the agent has spent less time, or in areas with complex dynamics).

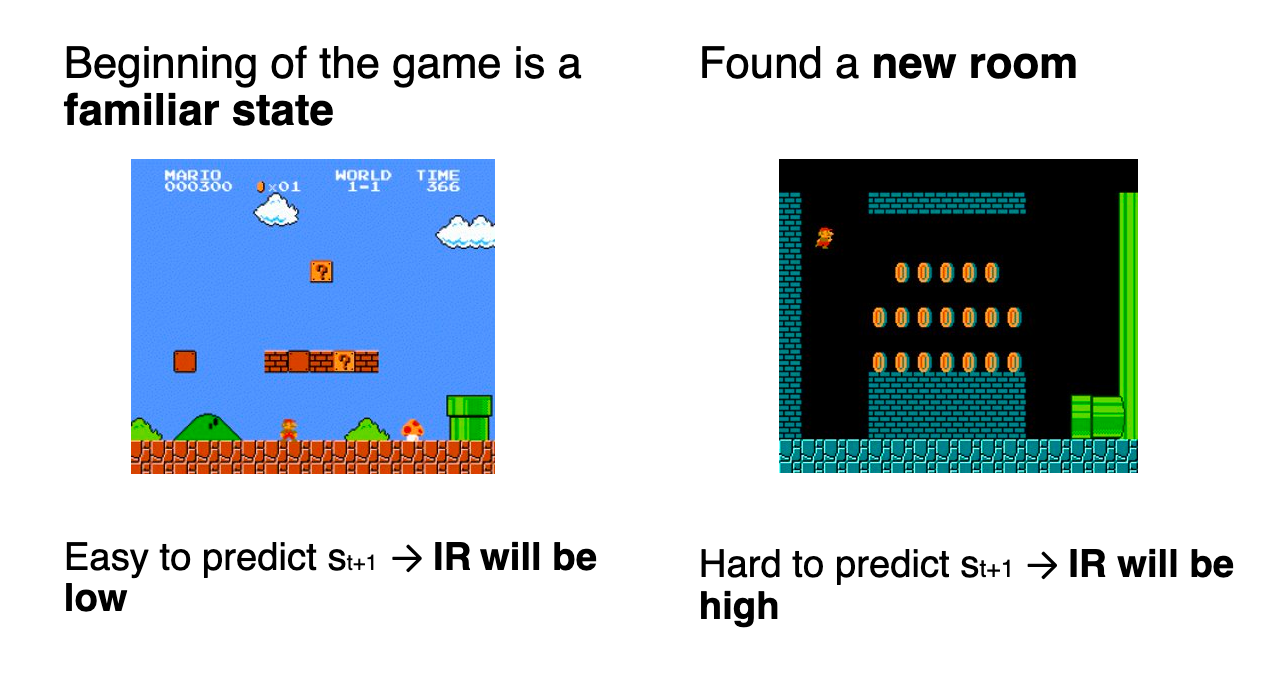

Let’s break it down further. Say you play Super Mario Bros:

- If you spend a lot of time in the beginning of the game (which is not new), the agent will be able to accurately predict what the next state will be, so the reward will be low.

- On the other hand, if you discover a new room, our agent will be very bad in predicting the next state, so the agent will be pushed to explore this room.

Consequently, measuring error requires building a model of environmental dynamics that predicts the next state given the current state and the action. The question that we can ask here is: how we can calculate this error?

To calculate curiosity, we will use a module introduced in the first paper called Intrinsic Curiosity module.

The Need for a Good Feature Space

Before diving into the description of the module, we must ask ourselves: how can our agent predict the next state given our current state and our action?

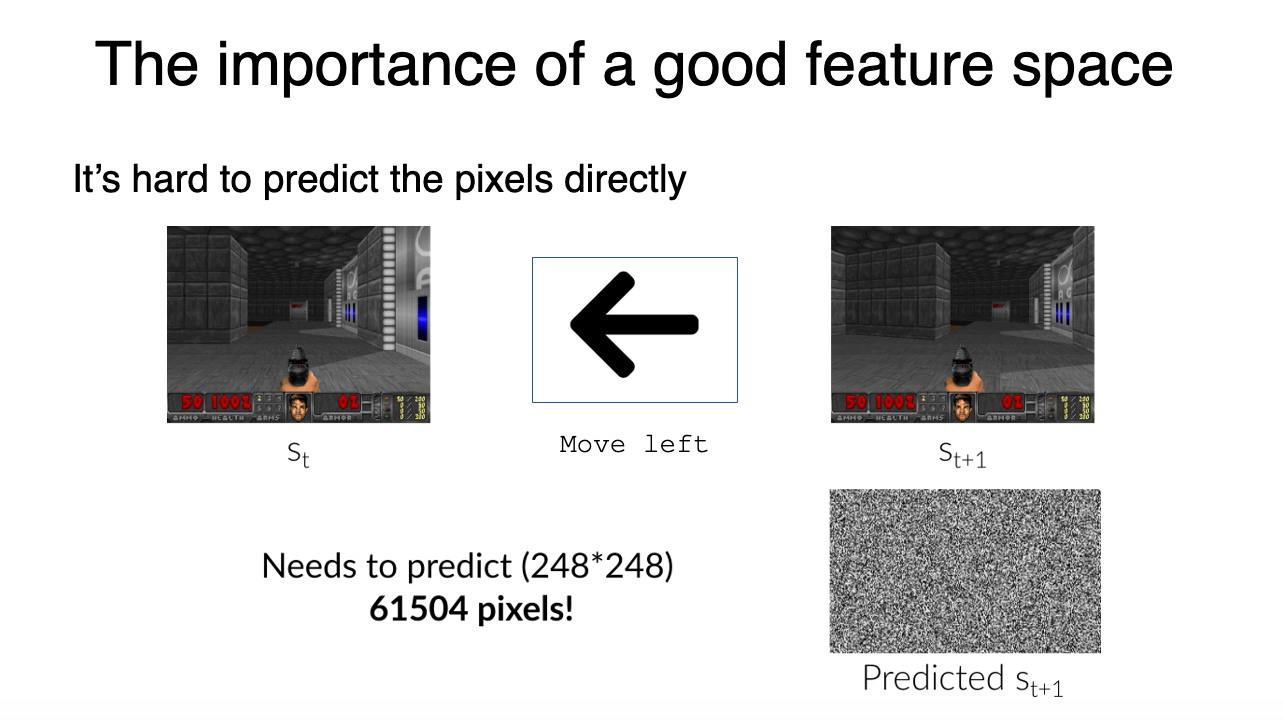

But we can’t predict s(t+1) by predicting the next frame as we usually do. Why?

First, because it’s hard to build a model that is able to predict high-dimension continuous state space, such as an image. It’s hard to predict the pixels directly, but now imagine you’re in Doom, and you move left — you need to predict 248*248 = 61504 pixels!

It means that in order to generate curiosity, we can’t predict the pixels directly and need to use a better feature representation by projecting the raw pixels space into a feature space that will hopefully only keep the relevant information elements that can be leveraged by our agent.

Three rules are defined in the original paper for a good representation feature space:

- Model things that can be controlled by the agent.

- Model things that can’t be controlled by the agent but can affect the agent.

- Don’t model things that are not controlled by the agent and have no effect on it.

The second reason we can’t predict s(t+1) by predicting the next frame as we usually do? It might just not be the right thing to do:

Imagine you need to study the movement of the tree leaves in a breeze. First of all, it’s already hard enough to model breeze, so predicting the pixel location of each leaves at each time step is even harder.

So instead of making predictions in the raw sensory space (pixels), we need to transform the raw sensory input (array of pixels) into a feature space with only relevant information.

Let’s take this example: your agent is a self driving car. If we want to create a good feature representation, we need to model:

The yellow boxes are the important elements.

A good feature representation would be our car (controlled by our agent) and the other cars (we can’t control it, but that can affect the agent), but we don’t need to model the leaves (it doesn’t affect the agent, and we can’t control it). By only keeping this information, we will have a feature representation with less noise.

The desired embedding space should:

- Be compact in terms of dimensional (remove irrelevant parts of the observation space).

- Preserve sufficient information about the observation.

- Be stable, because non-stationary rewards (rewards that decrease through time since curiosity decreases through time) make it difficult for reinforcement agents to learn.

In order to calculate the predicted next state and the real feature representation of the next state, we can use an Intrinsic Curiosity Module (ICM).

The ICM Module

The ICM Module is the system that helps us to generate curiosity. It’s composed of two neural networks; each of them has an important task.

The Inverse Model that Generates the Feature Representation of State and Next State

The Inverse Model (g), aims at predicting the action â(t), and in doing so, it learns an internal feature representation of the state and next state, denoted by Φ(s(t)) and Φ(s(t+1)).

You may ask, why do we need to predict the action if we want Φ(s(t)) and Φ(s(t+1))? Remember that we want our state representations to only consider elements that can affect our agent or that it can control.

Since this neural network is only used to predict the action, it has no incentive to represent within its feature embedding space the factors of variation in an environment that does not affect the agent itself.

Lastly, the inverse model loss is given by the cross entropy between our predicted action â(t) and the real action a(t):

The Forward model that predicts the feature representation of the next state

The Forward Model (f), predicts the feature representation of next state hat_Φ(s(t+1)) given Φ(s(t)) and a(t).

The forward model takes as input Φ(s(t)) and at and predict the feature representation Φ(s(t+1)) of s(t+1)

Forward model loss function

Consequently, curiosity will be a real number, i.e., the L2 normed difference between our predicted feature vector of the next state hat_Φ(s(t+1)) (predicted by the Forward Model) and the real feature vector of the next state Φ(s(t+1)) (generated by the Inverse Model).

Finally, the overall optimization problem of this module is a composition of Inverse Loss and Forward Loss.

And we provide the prediction error of the forward dynamics model to the agent as an intrinsic reward to encourage its curiosity.

That was a lot of information and mathematics!

To recap:

- Because of extrinsic rewards implementation and sparse rewards problems, we want to create a reward that is intrinsic to the agent.

- To do that, we created curiosity, which is the agent’s error in predicting the consequence of its action given its current state.

- Using curiosity will push our agent to favor transitions with high prediction error (which will be higher in areas where the agent has spent less time, or in areas with complex dynamics) and consequently better explore our environment.

- But because we can’t predict the next state by predicting the next frame (too complicated), we use a better feature representation that will keep only elements that can be controlled by our agent or affect our agent.

- To generate curiosity, we use the Intrinsic Curiosity module that is composed of two models: the Inverse Model, which is used to learn the feature representation of state and next state, and the Forward Dynamics model, used to generate the predicted feature representation of the next state.

- Curiosity will be equal to the difference between hat_Φ(s(t+1))(Forward Dynamics model) and Φ(s(t+1)) (Inverse Dynamics model).

ICM with Super Mario Bros

We wanted to see if our agent was able to play Super Mario Bros using only curiosity as reward. In order to do that, we used the official implementation of Deepak Pathak et al.

We trained our agent on 32 parallel environments for 15 hours on a small NVIDIA GeForce 940M GPU.

We can see in this video that our agent was able to beat the first level of Super Mario Bros without extrinsic rewards. This proves the fact that curiosity is a good reward to train our agent.

That’s all for today! Now that you understand the theory, you should read the two papers’ experiment resultsCuriosity-driven Exploration by Self-supervised Prediction and Large-Scale Study of Curiosity-Driven Learning.

Next time, we’ll learn about random network distillation and how it solves a major problem of ICM: the procrastinating agents.

data from the trenches

the nitty gritty of data science by the experts @ dataiku

Curiosity-Driven Learning through Next State Prediction的更多相关文章

- 【软件分析与挖掘】Multiple kernel ensemble learning for software defect prediction

摘要: 利用软件中的历史缺陷数据来建立分类器,进行软件缺陷的检测. 多核学习(Multiple kernel learning):把历史缺陷数据映射到高维特征空间,使得数据能够更好地表达: 集成学习( ...

- Understanding dopamine and reinforcement learning: The dopamine reward prediction error hypothesis

郑重声明:原文参见标题,如有侵权,请联系作者,将会撤销发布! Abstract 在中脑多巴胺能神经元的研究中取得了许多最新进展.要了解这些进步以及它们之间的相互关系,需要对作为解释框架并指导正在进行的 ...

- ICLR 2013 International Conference on Learning Representations深度学习论文papers

ICLR 2013 International Conference on Learning Representations May 02 - 04, 2013, Scottsdale, Arizon ...

- 100 Most Popular Machine Learning Video Talks

100 Most Popular Machine Learning Video Talks 26971 views, 1:00:45, Gaussian Process Basics, David ...

- Machine Learning and Data Mining(机器学习与数据挖掘)

Problems[show] Classification Clustering Regression Anomaly detection Association rules Reinforcemen ...

- [C5] Andrew Ng - Structuring Machine Learning Projects

About this Course You will learn how to build a successful machine learning project. If you aspire t ...

- Self-Supervised Representation Learning

Self-Supervised Representation Learning 2019-11-11 21:12:14 This blog is copied from: https://lilia ...

- (转)Applications of Reinforcement Learning in Real World

Applications of Reinforcement Learning in Real World 2018-08-05 18:58:04 This blog is copied from: h ...

- 【论文笔记】多任务学习(Multi-Task Learning)

1. 前言 多任务学习(Multi-task learning)是和单任务学习(single-task learning)相对的一种机器学习方法.在机器学习领域,标准的算法理论是一次学习一个任务,也就 ...

随机推荐

- Workerman MySQL组件Connection用法总结

一.初始化连接 $db = new \Workerman\MySQL\Connection('host', 'port', 'user', 'password', 'db_name'); 二.获取所有 ...

- CSS 标签显示模式

标签的类型(显示模式) HTML标签一般分为块标签和行内标签两种类型,它们也称块元素和行内元素. 一.块级元素(block-level) 每个块元素通常都会独自占据一整行或多整行,可以对其设置宽度.高 ...

- 5 dex文件

Dex文件中数据结构 类型 含义 u1 等同于uint8_t,表示1字节无符号数 u2 等同于uint16_t,表示2字节的无符号数 u4 等同于uint32_t,表示4字节的无符号数 u8 等同于u ...

- OCR4:Tesseract 4

Tesseract OCR 该软件包包含一个OCR引擎 - libtesseract和一个命令行程序 - tesseract. Tesseract 4增加了一个基于OCR引擎的新神经网络(LSTM ...

- Ansible入门笔记(2)之常用模块

目录 Ansible常用模块 1.1.Ansible Ad-hoc 1.2.Ansible的基础命令 1.3.常用模块 Ansible常用模块 1.1.Ansible Ad-hoc 什么事ad-hoc ...

- 将linux和uboot集成到Android编译框架中

span::selection, .CodeMirror-line > span > span::selection { background: #d7d4f0; }.CodeMirror ...

- Centos 实战-MySQL定时全量备份(1)

/usr/bin/mysqldump -uroot -p123456 --lock-all-tables --flush-logs test > /home/backup.sql 如上一段代码所 ...

- 移动端 1px 像素边框问题的解决方案(Border.css)

前言 关于什么是移动端1像素边框问题,先上两张图,大家就明白了. 解决方案 将以下代码放在border.css文件中,然后引入 常用className border:整个盒子都有边框 border-t ...

- 利用反射与dom4j读取javabean生成对应XML

项目中需要自定义生成一个xml,要把Javabean中的属性拼接一个xml,例如要生成以下xml <?xml version="1.0" encoding="gb2 ...

- Numpy | 02 Ndarray 对象

NumPy 最重要的一个特点是其 N 维数组对象 ndarray,它是一系列同类型数据的集合,以 0 下标为开始进行集合中元素的索引. ndarray 对象是用于存放同类型元素的多维数组. ndarr ...