Andrew Ng机器学习 四:Neural Networks Learning

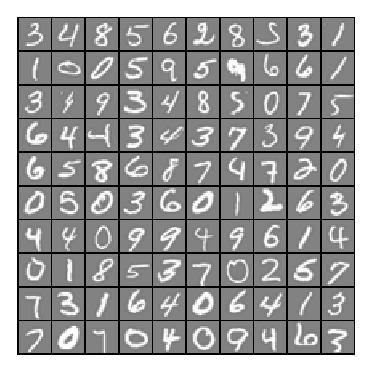

背景:跟上一讲一样,识别手写数字,给一组数据集ex4data1.mat,,每个样例都为灰度化为20*20像素,也就是每个样例的维度为400,加载这组数据后,我们会有5000*400的矩阵X(5000个样例),5000*1的矩阵y(表示每个样例所代表的数据)。现在让你拟合出一个模型,使得这个模型能很好的预测其它手写的数字。

(注意:我们用10代表0(矩阵y也是这样),因为Octave的矩阵没有0行)

一:神经网络( Neural Networks)

神经网络脚本ex4.m:

%% Machine Learning Online Class - Exercise Neural Network Learning % Instructions

% ------------

%

% This file contains code that helps you get started on the

% linear exercise. You will need to complete the following functions

% in this exericse:

%

% sigmoidGradient.m

% randInitializeWeights.m

% nnCostFunction.m

%

% For this exercise, you will not need to change any code in this file,

% or any other files other than those mentioned above.

% %% Initialization

clear ; close all; clc %% Setup the parameters you will use for this exercise

input_layer_size = ; % 20x20 Input Images of Digits

hidden_layer_size = ; % hidden units

num_labels = ; % labels, from to

% (note that we have mapped "" to label ) %% =========== Part : Loading and Visualizing Data =============

% We start the exercise by first loading and visualizing the dataset.

% You will be working with a dataset that contains handwritten digits.

% % Load Training Data

fprintf('Loading and Visualizing Data ...\n') load('ex4data1.mat');

m = size(X, ); % Randomly select data points to display

sel = randperm(size(X, ));

sel = sel(:); displayData(X(sel, :)); fprintf('Program paused. Press enter to continue.\n');

pause; %% ================ Part : Loading Parameters ================

% In this part of the exercise, we load some pre-initialized

% neural network parameters. fprintf('\nLoading Saved Neural Network Parameters ...\n') % Load the weights into variables Theta1(25x401) and Theta2(10x26)

load('ex4weights.mat'); % Unroll parameters

nn_params = [Theta1(:) ; Theta2(:)]; %% ================ Part : Compute Cost (Feedforward) ================

% To the neural network, you should first start by implementing the

% feedforward part of the neural network that returns the cost only. You

% should complete the code in nnCostFunction.m to return cost. After

% implementing the feedforward to compute the cost, you can verify that

% your implementation is correct by verifying that you get the same cost

% as us for the fixed debugging parameters.

%

% We suggest implementing the feedforward cost *without* regularization

% first so that it will be easier for you to debug. Later, in part , you

% will get to implement the regularized cost.

%

fprintf('\nFeedforward Using Neural Network ...\n') % Weight regularization parameter (we set this to here).

lambda = ; J = nnCostFunction(nn_params, input_layer_size, hidden_layer_size, ...

num_labels, X, y, lambda); fprintf(['Cost at parameters (loaded from ex4weights): %f '...

'\n(this value should be about 0.287629)\n'], J); fprintf('\nProgram paused. Press enter to continue.\n');

pause; %% =============== Part : Implement Regularization ===============

% Once your cost function implementation is correct, you should now

% continue to implement the regularization with the cost.

% fprintf('\nChecking Cost Function (w/ Regularization) ... \n') % Weight regularization parameter (we set this to here).

lambda = ; J = nnCostFunction(nn_params, input_layer_size, hidden_layer_size, ...

num_labels, X, y, lambda); fprintf(['Cost at parameters (loaded from ex4weights): %f '...

'\n(this value should be about 0.383770)\n'], J); fprintf('Program paused. Press enter to continue.\n');

pause; %% ================ Part : Sigmoid Gradient ================

% Before you start implementing the neural network, you will first

% implement the gradient for the sigmoid function. You should complete the

% code in the sigmoidGradient.m file.

% fprintf('\nEvaluating sigmoid gradient...\n') g = sigmoidGradient([- -0.5 0.5 ]);

fprintf('Sigmoid gradient evaluated at [-1 -0.5 0 0.5 1]:\n ');

fprintf('%f ', g);

fprintf('\n\n'); fprintf('Program paused. Press enter to continue.\n');

pause; %% ================ Part : Initializing Pameters ================

% In this part of the exercise, you will be starting to implment a two

% layer neural network that classifies digits. You will start by

% implementing a function to initialize the weights of the neural network

% (randInitializeWeights.m) fprintf('\nInitializing Neural Network Parameters ...\n') initial_Theta1 = randInitializeWeights(input_layer_size, hidden_layer_size);

initial_Theta2 = randInitializeWeights(hidden_layer_size, num_labels); % Unroll parameters

initial_nn_params = [initial_Theta1(:) ; initial_Theta2(:)]; %% =============== Part : Implement Backpropagation ===============

% Once your cost matches up with ours, you should proceed to implement the

% backpropagation algorithm for the neural network. You should add to the

% code you've written in nnCostFunction.m to return the partial

% derivatives of the parameters.

%

fprintf('\nChecking Backpropagation... \n'); % Check gradients by running checkNNGradients

checkNNGradients; fprintf('\nProgram paused. Press enter to continue.\n');

pause; %% =============== Part : Implement Regularization ===============

% Once your backpropagation implementation is correct, you should now

% continue to implement the regularization with the cost and gradient.

% fprintf('\nChecking Backpropagation (w/ Regularization) ... \n') % Check gradients by running checkNNGradients

lambda = ;

checkNNGradients(lambda); % Also output the costFunction debugging values

debug_J = nnCostFunction(nn_params, input_layer_size, ...

hidden_layer_size, num_labels, X, y, lambda); fprintf(['\n\nCost at (fixed) debugging parameters (w/ lambda = %f): %f ' ...

'\n(for lambda = 3, this value should be about 0.576051)\n\n'], lambda, debug_J); fprintf('Program paused. Press enter to continue.\n');

pause; %% =================== Part : Training NN ===================

% You have now implemented all the code necessary to train a neural

% network. To train your neural network, we will now use "fmincg", which

% is a function which works similarly to "fminunc". Recall that these

% advanced optimizers are able to train our cost functions efficiently as

% long as we provide them with the gradient computations.

%

fprintf('\nTraining Neural Network... \n') % After you have completed the assignment, change the MaxIter to a larger

% value to see how more training helps.

options = optimset('MaxIter', ); % You should also try different values of lambda

lambda = ; % Create "short hand" for the cost function to be minimized

costFunction = @(p) nnCostFunction(p, ...

input_layer_size, ...

hidden_layer_size, ...

num_labels, X, y, lambda); % Now, costFunction is a function that takes in only one argument (the

% neural network parameters)

[nn_params, cost] = fmincg(costFunction, initial_nn_params, options); % Obtain Theta1 and Theta2 back from nn_params

Theta1 = reshape(nn_params(:hidden_layer_size * (input_layer_size + )), ...

hidden_layer_size, (input_layer_size + )); Theta2 = reshape(nn_params(( + (hidden_layer_size * (input_layer_size + ))):end), ...

num_labels, (hidden_layer_size + )); fprintf('Program paused. Press enter to continue.\n');

pause; %% ================= Part : Visualize Weights =================

% You can now "visualize" what the neural network is learning by

% displaying the hidden units to see what features they are capturing in

% the data. fprintf('\nVisualizing Neural Network... \n') displayData(Theta1(:, :end)); fprintf('\nProgram paused. Press enter to continue.\n');

pause; %% ================= Part : Implement Predict =================

% After training the neural network, we would like to use it to predict

% the labels. You will now implement the "predict" function to use the

% neural network to predict the labels of the training set. This lets

% you compute the training set accuracy. pred = predict(Theta1, Theta2, X); fprintf('\nTraining Set Accuracy: %f\n', mean(double(pred == y)) * );

ex4.m

1,通过可视化数据,可以看到如下图所示:

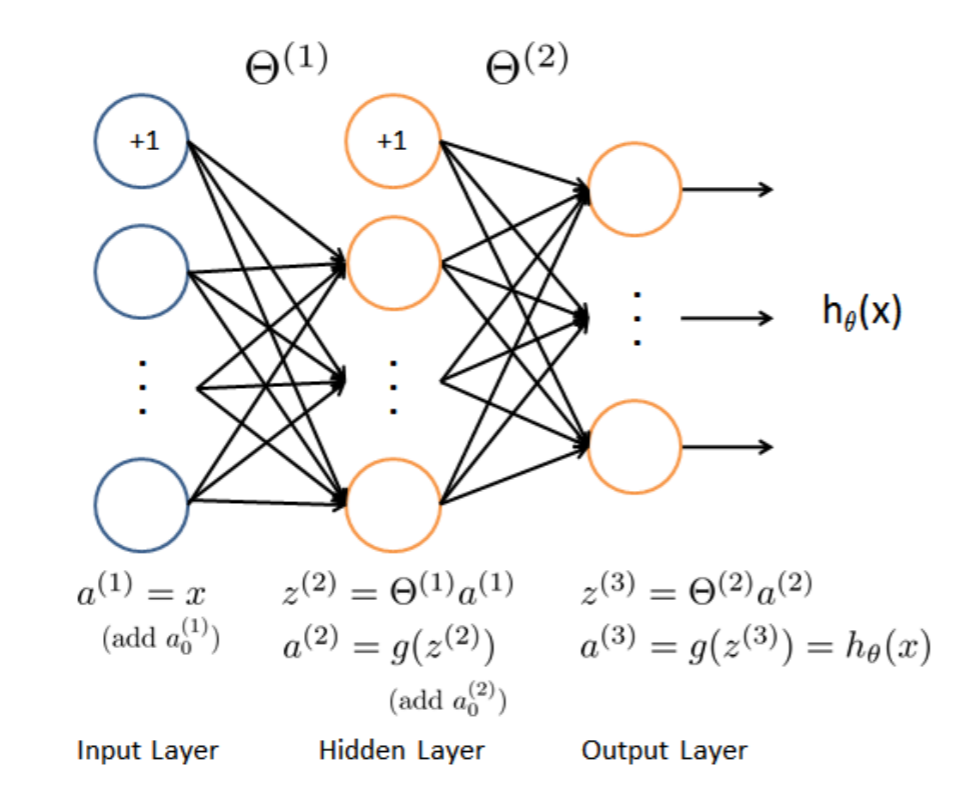

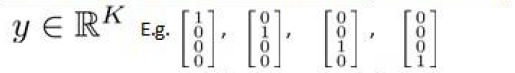

2,前向传播代价函数(Feedforward and cost function)

$J(\Theta)=-\frac{1}{m}\sum_{i=1}^{m}\sum_{k=1}^{K}[y^{(i)}_k(log(h_\Theta(x^{(i)}))_k)+(1-y^{(i)}_k)log(1-(h_{\Theta}(x^{(i)}))_k)]$

$+\frac{\lambda }{2m}\sum_{l=1}^{L-1}\sum_{i=1}^{s_l}\sum_{j=1}^{s_l+1}(\Theta_{ji}^{l})^{2}$

注意:$(h_\Theta(x^{(i)}))_k=a^{(3)}_k$,第k个输出单元。

该代价函数正则化时忽略偏差项,最里层的循环$

Andrew Ng机器学习 四:Neural Networks Learning的更多相关文章

- (原创)Stanford Machine Learning (by Andrew NG) --- (week 5) Neural Networks Learning

本栏目内容来自Andrew NG老师的公开课:https://class.coursera.org/ml/class/index 一般而言, 人工神经网络与经典计算方法相比并非优越, 只有当常规方法解 ...

- (原创)Stanford Machine Learning (by Andrew NG) --- (week 4) Neural Networks Representation

Andrew NG的Machine learning课程地址为:https://www.coursera.org/course/ml 神经网络一直被认为是比较难懂的问题,NG将神经网络部分的课程分为了 ...

- [C4] Andrew Ng - Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization

About this Course This course will teach you the "magic" of getting deep learning to work ...

- 斯坦福大学公开课机器学习: neural networks learning - autonomous driving example(通过神经网络实现自动驾驶实例)

使用神经网络来实现自动驾驶,也就是说使汽车通过学习来自己驾驶. 下图是通过神经网络学习实现自动驾驶的图例讲解: 左下角是汽车所看到的前方的路况图像.左上图,可以看到一条水平的菜单栏(数字4所指示方向) ...

- 【原】Coursera—Andrew Ng机器学习—课程笔记 Lecture 9_Neural Networks learning

神经网络的学习(Neural Networks: Learning) 9.1 代价函数 Cost Function 参考视频: 9 - 1 - Cost Function (7 min).mkv 假设 ...

- Andrew Ng机器学习课程笔记(四)之神经网络

Andrew Ng机器学习课程笔记(四)之神经网络 版权声明:本文为博主原创文章,转载请指明转载地址 http://www.cnblogs.com/fydeblog/p/7365730.html 前言 ...

- Andrew Ng机器学习课程11之使用machine learning的建议

Andrew Ng机器学习课程11之使用machine learning的建议 声明:引用请注明出处http://blog.csdn.net/lg1259156776/ 2015-9-28 艺少

- 【原】Coursera—Andrew Ng机器学习—编程作业 Programming Exercise 4—反向传播神经网络

课程笔记 Coursera—Andrew Ng机器学习—课程笔记 Lecture 9_Neural Networks learning 作业说明 Exercise 4,Week 5,实现反向传播 ba ...

- Machine Learning - 第5周(Neural Networks: Learning)

The Neural Network is one of the most powerful learning algorithms (when a linear classifier doesn't ...

随机推荐

- MyBatis插入记录时返回主键id的方法

有时候插入记录之后需要使用到插入记录的主键,通常是再查询一次来获取主键,但是MyBatis插入记录时可以设置成返回主键id,简化操作,方法大致有两种. 对应实体类: public class User ...

- 使用EF 4.1的DbContext的方法大全

简述:EF4.1包括Code First和DbContext API.DbContext API为EF提供更多的工作方式:Code First,Database First和Model First. ...

- ArrayPool数组池、Span<T>结构

数组(ArrayPool数组池.Span<T>结构) 目录 前言 简单的数组.多维数组.锯齿数组 Array类 ArrayPool数组池 Span Span介绍 Span切片 使用Span ...

- celery无法启动的问题 SyntaxError: invalid syntax

遇到了celery无法启动的问题,报错:SyntaxError: invalid syntax ,这是因为我使用的python版本为最新3.7.3 ,而async已经作为关键字而存在了 在 celer ...

- LeetCode 201. 数字范围按位与(Bitwise AND of Numbers Range)

201. 数字范围按位与 201. Bitwise AND of Numbers Range 题目描述 给定范围 [m, n],其中 0 <= m <= n <= 214748364 ...

- MySQL中主键id不连贯重置处理办法

MySQL中有时候会出现主键字段不连续,或者顺序乱了,想重置从1开始自增,下面处理方法 先删除原有主键,再新增新主键字段就好了 #删除原有自增主键 ALTER TABLE appraiser_info ...

- BZOJ3791 作业(DP)

题意: 给出一个长度为n的01序列: 你可以进行K次操作,操作有两种: 1.将一个区间的所有1作业写对,并且将0作业写错: 2.将一个区间的所有0作业写对,并且将1作业写错: 求K次操作后最多写对了多 ...

- ansible-playbook的简单传参方式

基本语法: ansible-playbook -v -i myhost test.yml -e "name=xiaoming" // -v 是看运行细节.要更细节 ...

- Scratch编程:打猎(十)

“ 上节课的内容全部掌握了吗?反复练习了没有,编程最好的学习方法就是练习.练习.再练习.一定要记得多动手.多动脑筋哦~~” 01 — 游戏介绍 这节我们实现一个消灭猎物的射击游戏. 02 — 设计思路 ...

- quartz2.3.0(七)调度器中断任务执行,手动处理任务中断事件

job任务类 package org.quartz.examples.example7; import java.util.Date; import org.slf4j.Logger; import ...