离线安装Cloudera Manager 5和CDH5(最新版5.9.3) 完全教程(七)界面安装

一、安装过程

1.1 登录

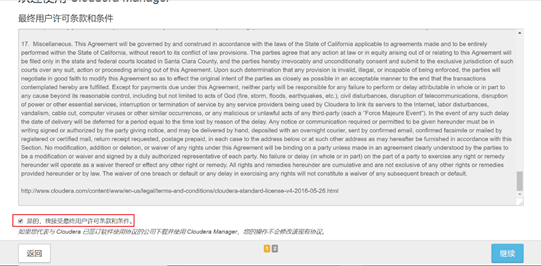

1.2 接受许可协议

1.3 选择免费版本

1.4 选择下一步

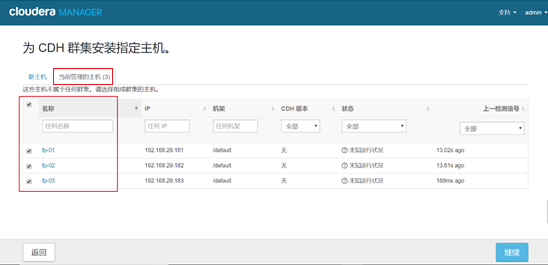

1.5 选择当前管理的主机

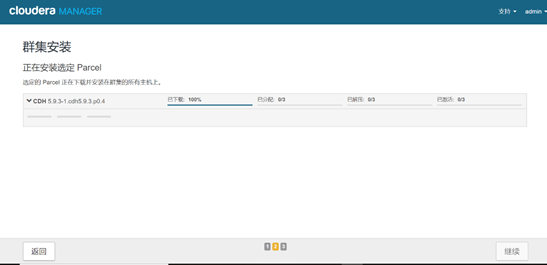

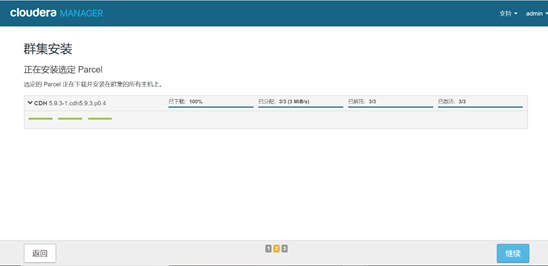

1.6 选择使用Parcel安装,选择CDH版本,点击继续

1.7 等待安装

此处安装需要等待一段时间,请耐心等待,安装过程可能需要30分钟时间,这和物理机器的磁盘读写速度和机器性能有关,如果中断请继续之前的步骤重新操作,下图是安装成功界面

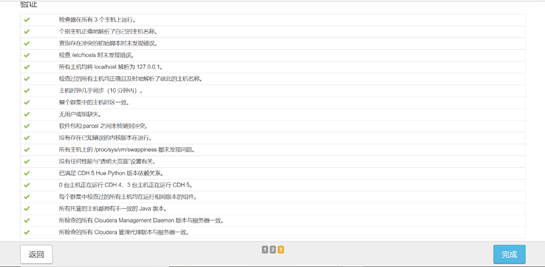

1.8 集群检测

检测全部通过

1.9 选择自定义服务,选择要安装的组件

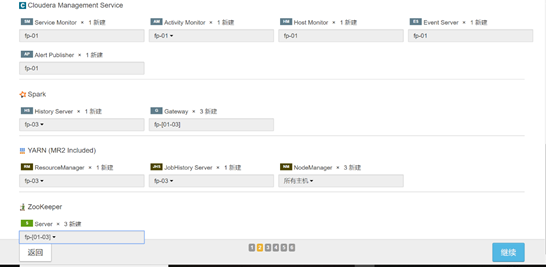

1.10 分配角色

1.11 数据库设置

选择对应的数据库,点击测试连接,通过之后,继续

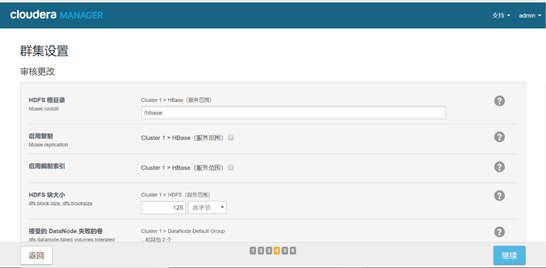

1.12 集群设置

使用默认设置即可

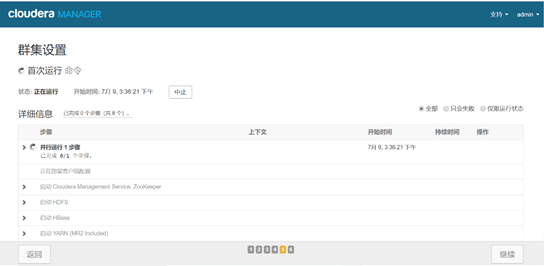

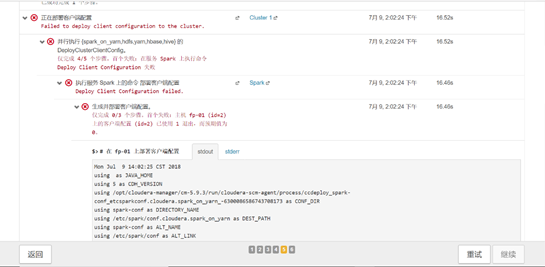

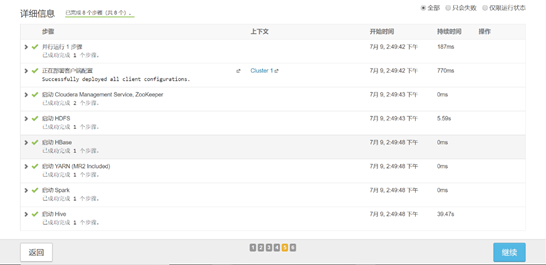

1.13 首次安装组件

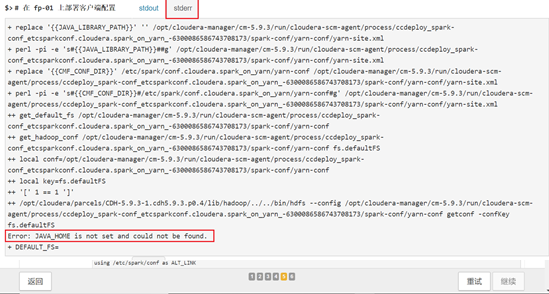

1.14 安装Spark报错

查看stderr查看报错信息,发现找不到JAVA_HOME

解决方法:需要每个节点都操作

在以下文件中手工添加JAVA_HOME

[root@master soft]# cd /opt/cloudera-manager/cm-5.9./lib64/cmf/service/client/

[root@master client]# vi deploy-cc.sh

保存之后

[root@master client]# cat /etc/environment

点击重试

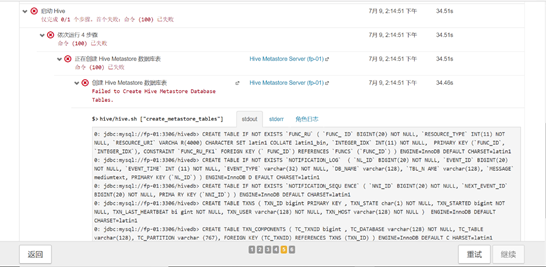

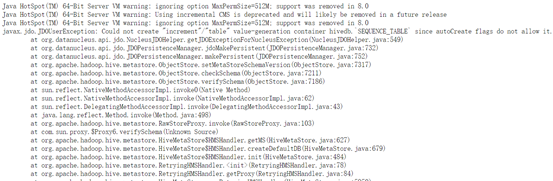

1.15 安装Hive报错

查看stderr查看报错信息,发现hive初始化失败

处理过程:

(1) 拷贝jdbc驱动包

[root@master ~]# cp /root/soft/mysql-connector-java-5.1.-bin.jar /opt/cloudera/parcels/CDH-5.9.-.cdh5.9.3.p0./lib/hive/lib/

点击重试,仍旧报错

点击查看完整日志

点击链接

在搜索框中搜索hive.metastore.schema.verification,把勾选去掉,保存更改,返回安装界面点击重试

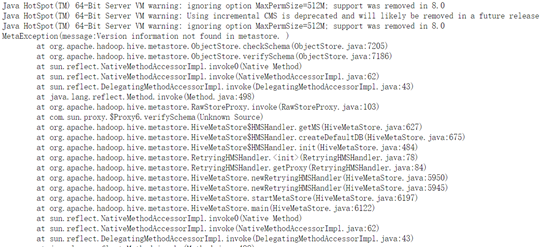

继续报错,查看完整日志

Java HotSpot(TM) -Bit Server VM warning: ignoring option MaxPermSize=512M; support was removed in 8.0

Java HotSpot(TM) -Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

Java HotSpot(TM) -Bit Server VM warning: ignoring option MaxPermSize=512M; support was removed in 8.0

javax.jdo.JDOUserException: Could not create "increment"/"table" value-generation container hivedb.`SEQUENCE_TABLE` since autoCreate flags do not allow it.

at org.datanucleus.api.jdo.NucleusJDOHelper.getJDOExceptionForNucleusException(NucleusJDOHelper.java:)

at org.datanucleus.api.jdo.JDOPersistenceManager.jdoMakePersistent(JDOPersistenceManager.java:)

at org.datanucleus.api.jdo.JDOPersistenceManager.makePersistent(JDOPersistenceManager.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.setMetaStoreSchemaVersion(ObjectStore.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.checkSchema(ObjectStore.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.verifySchema(ObjectStore.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:)

at com.sun.proxy.$Proxy6.verifySchema(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.startMetaStore(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.main(HiveMetaStore.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at org.apache.hadoop.util.RunJar.run(RunJar.java:)

at org.apache.hadoop.util.RunJar.main(RunJar.java:)

NestedThrowablesStackTrace:

Could not create "increment"/"table" value-generation container hivedb.`SEQUENCE_TABLE` since autoCreate flags do not allow it.

org.datanucleus.exceptions.NucleusUserException: Could not create "increment"/"table" value-generation container hivedb.`SEQUENCE_TABLE` since autoCreate flags do not allow it.

at org.datanucleus.store.rdbms.valuegenerator.TableGenerator.createRepository(TableGenerator.java:)

at org.datanucleus.store.rdbms.valuegenerator.AbstractRDBMSGenerator.obtainGenerationBlock(AbstractRDBMSGenerator.java:)

at org.datanucleus.store.valuegenerator.AbstractGenerator.obtainGenerationBlock(AbstractGenerator.java:)

at org.datanucleus.store.valuegenerator.AbstractGenerator.next(AbstractGenerator.java:)

at org.datanucleus.store.rdbms.RDBMSStoreManager.getStrategyValueForGenerator(RDBMSStoreManager.java:)

at org.datanucleus.store.AbstractStoreManager.getStrategyValue(AbstractStoreManager.java:)

at org.datanucleus.ExecutionContextImpl.newObjectId(ExecutionContextImpl.java:)

at org.datanucleus.state.JDOStateManager.setIdentity(JDOStateManager.java:)

at org.datanucleus.state.JDOStateManager.initialiseForPersistentNew(JDOStateManager.java:)

at org.datanucleus.state.ObjectProviderFactoryImpl.newForPersistentNew(ObjectProviderFactoryImpl.java:)

at org.datanucleus.ExecutionContextImpl.newObjectProviderForPersistentNew(ExecutionContextImpl.java:)

at org.datanucleus.ExecutionContextImpl.persistObjectInternal(ExecutionContextImpl.java:)

at org.datanucleus.ExecutionContextImpl.persistObjectWork(ExecutionContextImpl.java:)

at org.datanucleus.ExecutionContextImpl.persistObject(ExecutionContextImpl.java:)

at org.datanucleus.ExecutionContextThreadedImpl.persistObject(ExecutionContextThreadedImpl.java:)

at org.datanucleus.api.jdo.JDOPersistenceManager.jdoMakePersistent(JDOPersistenceManager.java:)

at org.datanucleus.api.jdo.JDOPersistenceManager.makePersistent(JDOPersistenceManager.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.setMetaStoreSchemaVersion(ObjectStore.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.checkSchema(ObjectStore.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.verifySchema(ObjectStore.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:)

at com.sun.proxy.$Proxy6.verifySchema(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.startMetaStore(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.main(HiveMetaStore.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at org.apache.hadoop.util.RunJar.run(RunJar.java:)

at org.apache.hadoop.util.RunJar.main(RunJar.java:)

Exception in thread "main" javax.jdo.JDOUserException: Could not create "increment"/"table" value-generation container hivedb.`SEQUENCE_TABLE` since autoCreate flags do not allow it.

at org.datanucleus.api.jdo.NucleusJDOHelper.getJDOExceptionForNucleusException(NucleusJDOHelper.java:)

at org.datanucleus.api.jdo.JDOPersistenceManager.jdoMakePersistent(JDOPersistenceManager.java:)

at org.datanucleus.api.jdo.JDOPersistenceManager.makePersistent(JDOPersistenceManager.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.setMetaStoreSchemaVersion(ObjectStore.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.checkSchema(ObjectStore.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.verifySchema(ObjectStore.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:)

at com.sun.proxy.$Proxy6.verifySchema(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.startMetaStore(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.main(HiveMetaStore.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at org.apache.hadoop.util.RunJar.run(RunJar.java:)

at org.apache.hadoop.util.RunJar.main(RunJar.java:)

NestedThrowablesStackTrace:

Could not create "increment"/"table" value-generation container hivedb.`SEQUENCE_TABLE` since autoCreate flags do not allow it.

org.datanucleus.exceptions.NucleusUserException: Could not create "increment"/"table" value-generation container hivedb.`SEQUENCE_TABLE` since autoCreate flags do not allow it.

at org.datanucleus.store.rdbms.valuegenerator.TableGenerator.createRepository(TableGenerator.java:)

at org.datanucleus.store.rdbms.valuegenerator.AbstractRDBMSGenerator.obtainGenerationBlock(AbstractRDBMSGenerator.java:)

at org.datanucleus.store.valuegenerator.AbstractGenerator.obtainGenerationBlock(AbstractGenerator.java:)

at org.datanucleus.store.valuegenerator.AbstractGenerator.next(AbstractGenerator.java:)

at org.datanucleus.store.rdbms.RDBMSStoreManager.getStrategyValueForGenerator(RDBMSStoreManager.java:)

at org.datanucleus.store.AbstractStoreManager.getStrategyValue(AbstractStoreManager.java:)

at org.datanucleus.ExecutionContextImpl.newObjectId(ExecutionContextImpl.java:)

at org.datanucleus.state.JDOStateManager.setIdentity(JDOStateManager.java:)

at org.datanucleus.state.JDOStateManager.initialiseForPersistentNew(JDOStateManager.java:)

at org.datanucleus.state.ObjectProviderFactoryImpl.newForPersistentNew(ObjectProviderFactoryImpl.java:)

at org.datanucleus.ExecutionContextImpl.newObjectProviderForPersistentNew(ExecutionContextImpl.java:)

at org.datanucleus.ExecutionContextImpl.persistObjectInternal(ExecutionContextImpl.java:)

at org.datanucleus.ExecutionContextImpl.persistObjectWork(ExecutionContextImpl.java:)

at org.datanucleus.ExecutionContextImpl.persistObject(ExecutionContextImpl.java:)

at org.datanucleus.ExecutionContextThreadedImpl.persistObject(ExecutionContextThreadedImpl.java:)

at org.datanucleus.api.jdo.JDOPersistenceManager.jdoMakePersistent(JDOPersistenceManager.java:)

at org.datanucleus.api.jdo.JDOPersistenceManager.makePersistent(JDOPersistenceManager.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.setMetaStoreSchemaVersion(ObjectStore.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.checkSchema(ObjectStore.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.verifySchema(ObjectStore.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:)

at com.sun.proxy.$Proxy6.verifySchema(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.startMetaStore(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.main(HiveMetaStore.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at org.apache.hadoop.util.RunJar.run(RunJar.java:)

at org.apache.hadoop.util.RunJar.main(RunJar.java:)

报错原因:

mysql数据库的binlog_format参数设置不正确,原来设置的是STATEMENT,修改为MIXED,修改方法,在/usr/my.cnf文件中加上binlog_format=MIXED

然后重启mysql数据库,再次点击重试,全部通过。点击继续

1.16 完成安装

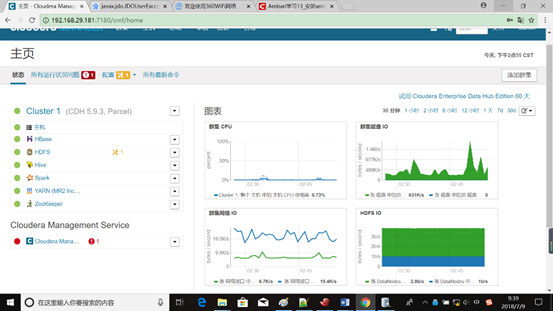

二、调试

2.1 安装完成

2.2 HDFS配置报警告

点击黄色的扳手,查看是NameNode的Java堆栈大小

修改为4吉字节点击保存,框中全部改为4吉字节

重启过时服务

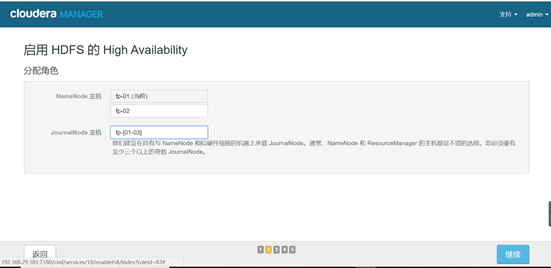

2.2 启用HDFS的高可用

离线安装Cloudera Manager 5和CDH5(最新版5.9.3) 完全教程(七)界面安装的更多相关文章

- 离线安装Cloudera Manager 5和CDH5(最新版5.1.3) 完全教程

关于CDH和Cloudera Manager CDH (Cloudera's Distribution, including Apache Hadoop),是Hadoop众多分支中的一种,由Cloud ...

- 离线安装Cloudera Manager 5和CDH5(最新版5.9.3) 完全教程(六)CM的安装

一.角色分配 Cloudera Manager Agent:向server端报告当前机器服务状态. Cloudera Manager Server:接受agent角色报告服务状态,以视图界面展现,方便 ...

- 离线安装Cloudera Manager 5和CDH5(最新版5.9.3) 完全教程(一)环境说明

关于CDH和Cloudera Manager CDH (Cloudera's Distribution, including Apache Hadoop),是Hadoop众多分支中的一种,由Cloud ...

- 离线安装Cloudera Manager 5和CDH5(最新版5.9.3) 完全教程(二)基础环境安装

一.安装CentOS 6.5 x64 具体安装过程自行百度 1.1 修改IP地址 [root@master ~]# vi /etc/sysconfig/network DEVICE=eth0 TYPE ...

- 离线安装Cloudera Manager 5和CDH5(最新版5.9.3) 完全教程(四)数据库安装(单节点)

一.卸载CentOS自带的MySQL 1.1 查看之前是否安装过mysql [root@master mysql]# rpm -qa|grep -i mysql mysql-libs--.el6.x8 ...

- 离线安装Cloudera Manager 5和CDH5(最新版5.9.3) 完全教程(五)数据库安装(双节点)

一.方案选择 通过Lvs+keepalived+mysql(主主同步)实现数据库层面的高可用方案,需要两台服务器作为数据库提供业务数据的存储,应用服务器通过vip访问数据库,允许同一时间内一台数据库服 ...

- 离线安装Cloudera Manager 5和CDH5(最新版5.9.3) 完全教程(三)重新分配磁盘空间(可选)

一.查看文件系统 [root@master ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/vg_master-lv_ ...

- 离线安装 Cloudera Manager 5 和 CDH5.10

关于CDH和Cloudera Manager CDH (Cloudera's Distribution, including Apache Hadoop),是Hadoop众多分支中的一种,由Cloud ...

- 离线安装Cloudera Manager 5和CDH5

关于CDH和Cloudera Manager CDH (Cloudera's Distribution, including Apache Hadoop),是Cloudera 完全开源的Hadoop ...

随机推荐

- 《深入理解Java虚拟机》(一)Java虚拟机发展史

Java虚拟机发展史 1.Sun Classic/Exact VM 1.Sun Classic:世界第一款商用Java虚拟机. 2.Exact VM:准确式GC:虚拟机可以知道内存中的某个位置的数据具 ...

- js 对url进行编码和解码的三种方式

一.escape 和 unescape escape 原理:对除 ASCII字母.数字.标点符号(@ * _ + - . /) 以外的字符进行编码 .编码的字符被替换成了十六进制的转义序列 不编码的字 ...

- Flume参数小结

名词解释: 1.netcat:通过网络端口获取数据,source的实现类 2.logger:将数据显示到控制台,sink的实现类 3.memory: ,channel的实现类 4.capacity:是 ...

- MySQL ORDER BY主键id加LIMIT限制走错索引

背景及现象 report_product_sales_data表数据量2800万: 经测试,在当前数据量情况下,order by主键id,limit最大到49的时候可以用到索引report_produ ...

- MAC下搭建个人博客

安装homebrew ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/inst ...

- csharp: FTP Client Library using System.Net.FtpWebRequest

using System; using System.Collections.Generic; using System.Linq; using System.Text; using System.D ...

- twindows下omcat8安装后,不能启动服务

原因可能是cmd安装时,不是以管理员的身份运行cmd命令的.解决办法,以管理员身份运行cmd,进入tomcat安装/解压的bin目录下,先执行 service.bat remove 命令卸载服务,之后 ...

- CSS字体超出两行省略

text-overflow: -o-ellipsis-lastline;overflow: hidden;text-overflow: ellipsis;display: -webkit-box;-w ...

- (网页)理解Angular中的$apply()以及$digest()

转自CSDN: 工作有问题上CSDN上转转. $apply()和$digest()在AngularJS中是两个核心概念,但是有时候它们又让人困惑.而为了了解AngularJS的工作方式,首先需要了解$ ...

- python与MongoDB的基本交互:pymongo

本文内容: pymongo的使用: 安装模块 导入模块 连接mongod 获取\切换数据库 选择集合 CRUD操作 首发时间:2018-03-18 20:11 pymongo的使用: 安装模块: pi ...