python2.7 操作ceph-cluster S3对象接口 实现: 上传 下载 查询 删除 顺便使用Docker装个owncloud 实现UI管理

python version: python2.7

需要安装得轮子:

boto

filechunkio command:

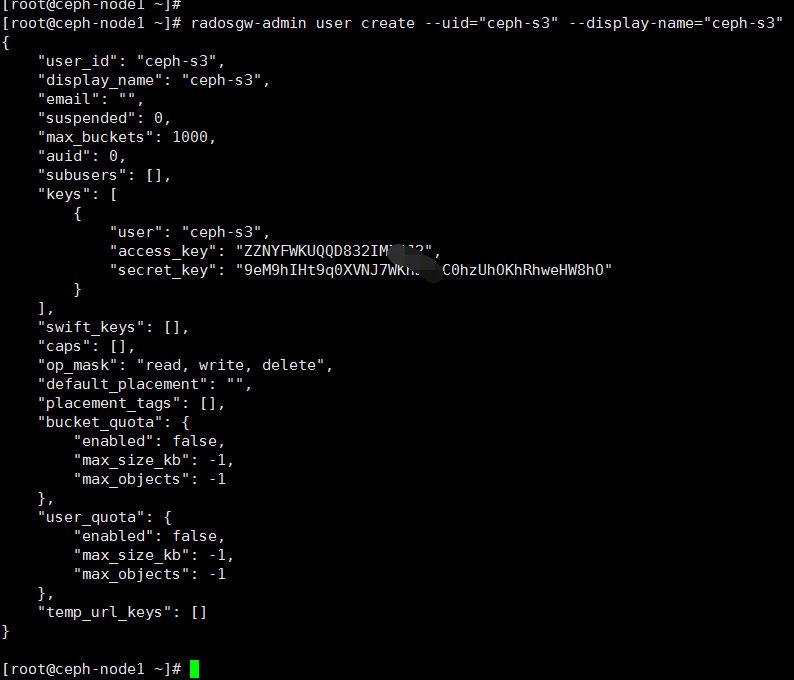

yum install python-pip&& pip install boto filechunkio ceph集群user(ceph-s3) 和 用户access_key,secret_key

代码:

#_*_coding:utf-8_*_

#yum install python-boto

import boto

import boto.s3.connection

#pip install filechunkio

from filechunkio import FileChunkIO

import math

import threading

import os

import Queue

import sys

class Chunk(object):

num = 0

offset = 0

len = 0

def __init__(self,n,o,l):

self.num=n

self.offset=o

self.length=l

#条件判断工具类

class switch(object):

def __init__(self, value):

self.value = value

self.fall = False

def __iter__(self):

"""Return the match method once, then stop"""

yield self.match

raise StopIteration def match(self, *args):

"""Indicate whether or not to enter a case suite"""

if self.fall or not args:

return True

elif self.value in args: # changed for v1.5, see below

self.fall = True

return True

else:

return False class CONNECTION(object):

def __init__(self,access_key,secret_key,ip,port,is_secure=False,chrunksize=8<<20): #chunksize最小8M否则上传过程会报错

self.conn=boto.connect_s3(

aws_access_key_id=access_key,

aws_secret_access_key=secret_key,

host=ip,port=port,

is_secure=is_secure,

calling_format=boto.s3.connection.OrdinaryCallingFormat()

)

self.chrunksize=chrunksize

self.port=port #查询buckets内files

def list_all(self):

all_buckets=self.conn.get_all_buckets()

for bucket in all_buckets:

print u'PG容器名: %s' %(bucket.name)

for key in bucket.list():

print ' '*5,"%-20s%-20s%-20s%-40s%-20s" %(key.mode,key.owner.id,key.size,key.last_modified.split('.')[0],key.name) #查询所有buckets

def get_show_buckets(self):

for bucket in self.conn.get_all_buckets():

print "Ceph-back-Name: {name}\tCreateTime: {created}".format(

name=bucket.name,

created=bucket.creation_date,

) def list_single(self,bucket_name):

try:

single_bucket = self.conn.get_bucket(bucket_name)

except Exception as e:

print 'bucket %s is not exist' %bucket_name

return

print u'容器名: %s' % (single_bucket.name)

for key in single_bucket.list():

print ' ' * 5, "%-20s%-20s%-20s%-40s%-20s" % (key.mode, key.owner.id, key.size, key.last_modified.split('.')[0], key.name) #普通小文件下载:文件大小<=8M

def dowload_file(self,filepath,key_name,bucket_name):

all_bucket_name_list = [i.name for i in self.conn.get_all_buckets()]

if bucket_name not in all_bucket_name_list:

print 'Bucket %s is not exist,please try again' % (bucket_name)

return

else:

bucket = self.conn.get_bucket(bucket_name) all_key_name_list = [i.name for i in bucket.get_all_keys()]

if key_name not in all_key_name_list:

print 'File %s is not exist,please try again' % (key_name)

return

else:

key = bucket.get_key(key_name) if not os.path.exists(os.path.dirname(filepath)):

print 'Filepath %s is not exists, sure to create and try again' % (filepath)

return if os.path.exists(filepath):

while True:

d_tag = raw_input('File %s already exists, sure you want to cover (Y/N)?' % (key_name)).strip()

if d_tag not in ['Y', 'N'] or len(d_tag) == 0:

continue

elif d_tag == 'Y':

os.remove(filepath)

break

elif d_tag == 'N':

return

os.mknod(filepath)

try:

key.get_contents_to_filename(filepath)

except Exception:

pass # 普通小文件上传:文件大小<=8M

def upload_file(self,filepath,key_name,bucket_name):

try:

bucket = self.conn.get_bucket(bucket_name)

except Exception as e:

print 'bucket %s is not exist' % bucket_name

tag = raw_input('Do you want to create the bucket %s: (Y/N)?' % bucket_name).strip()

while tag not in ['Y', 'N']:

tag = raw_input('Please input (Y/N)').strip()

if tag == 'N':

return

elif tag == 'Y':

self.conn.create_bucket(bucket_name)

bucket = self.conn.get_bucket(bucket_name)

all_key_name_list = [i.name for i in bucket.get_all_keys()]

if key_name in all_key_name_list:

while True:

f_tag = raw_input(u'File already exists, sure you want to cover (Y/N)?: ').strip()

if f_tag not in ['Y', 'N'] or len(f_tag) == 0:

continue

elif f_tag == 'Y':

break

elif f_tag == 'N':

return

key=bucket.new_key(key_name)

if not os.path.exists(filepath):

print 'File %s does not exist, please make sure you want to upload file path and try again' %(key_name)

return

try:

f=file(filepath,'rb')

data=f.read()

key.set_contents_from_string(data)

except Exception:

pass #删除bucket内file

def delete_file(self,key_name,bucket_name):

all_bucket_name_list = [i.name for i in self.conn.get_all_buckets()]

if bucket_name not in all_bucket_name_list:

print 'Bucket %s is not exist,please try again' % (bucket_name)

return

else:

bucket = self.conn.get_bucket(bucket_name) all_key_name_list = [i.name for i in bucket.get_all_keys()]

if key_name not in all_key_name_list:

print 'File %s is not exist,please try again' % (key_name)

return

else:

key = bucket.get_key(key_name) try:

bucket.delete_key(key.name)

except Exception:

pass #删除bucket

def delete_bucket(self,bucket_name):

all_bucket_name_list = [i.name for i in self.conn.get_all_buckets()]

if bucket_name not in all_bucket_name_list:

print 'Bucket %s is not exist,please try again' % (bucket_name)

return

else:

bucket = self.conn.get_bucket(bucket_name) try:

self.conn.delete_bucket(bucket.name)

except Exception:

pass #队列生成

def init_queue(self,filesize,chunksize): #8<<20 :8*2**20

chunkcnt=int(math.ceil(filesize*1.0/chunksize))

q=Queue.Queue(maxsize=chunkcnt)

for i in range(0,chunkcnt):

offset=chunksize*i

length=min(chunksize,filesize-offset)

c=Chunk(i+1,offset,length)

q.put(c)

return q #分片上传object

def upload_trunk(self,filepath,mp,q,id):

while not q.empty():

chunk=q.get()

fp=FileChunkIO(filepath,'r',offset=chunk.offset,bytes=chunk.length)

mp.upload_part_from_file(fp,part_num=chunk.num)

fp.close()

q.task_done() #文件大小获取---->S3分片上传对象生成----->初始队列生成(--------------->文件切,生成切分对象)

def upload_file_multipart(self,filepath,key_name,bucket_name,threadcnt=8):

filesize=os.stat(filepath).st_size

try:

bucket=self.conn.get_bucket(bucket_name)

except Exception as e:

print 'bucket %s is not exist' % bucket_name

tag=raw_input('Do you want to create the bucket %s: (Y/N)?' %bucket_name).strip()

while tag not in ['Y','N']:

tag=raw_input('Please input (Y/N)').strip()

if tag == 'N':

return

elif tag == 'Y':

self.conn.create_bucket(bucket_name)

bucket = self.conn.get_bucket(bucket_name)

all_key_name_list=[i.name for i in bucket.get_all_keys()]

if key_name in all_key_name_list:

while True:

f_tag=raw_input(u'File already exists, sure you want to cover (Y/N)?: ').strip()

if f_tag not in ['Y','N'] or len(f_tag) == 0:

continue

elif f_tag == 'Y':

break

elif f_tag == 'N':

return mp=bucket.initiate_multipart_upload(key_name)

q=self.init_queue(filesize,self.chrunksize)

for i in range(0,threadcnt):

t=threading.Thread(target=self.upload_trunk,args=(filepath,mp,q,i))

t.setDaemon(True)

t.start()

q.join()

mp.complete_upload() #文件分片下载

def download_chrunk(self,filepath,key_name,bucket_name,q,id):

while not q.empty():

chrunk=q.get()

offset=chrunk.offset

length=chrunk.length

bucket=self.conn.get_bucket(bucket_name)

resp=bucket.connection.make_request('GET',bucket_name,key_name,headers={'Range':"bytes=%d-%d" %(offset,offset+length)})

data=resp.read(length)

fp=FileChunkIO(filepath,'r+',offset=chrunk.offset,bytes=chrunk.length)

fp.write(data)

fp.close()

q.task_done() #下载 > 8MB file

def download_file_multipart(self,filepath,key_name,bucket_name,threadcnt=8):

all_bucket_name_list=[i.name for i in self.conn.get_all_buckets()]

if bucket_name not in all_bucket_name_list:

print 'Bucket %s is not exist,please try again' %(bucket_name)

return

else:

bucket=self.conn.get_bucket(bucket_name) all_key_name_list = [i.name for i in bucket.get_all_keys()]

if key_name not in all_key_name_list:

print 'File %s is not exist,please try again' %(key_name)

return

else:

key=bucket.get_key(key_name) if not os.path.exists(os.path.dirname(filepath)):

print 'Filepath %s is not exists, sure to create and try again' % (filepath)

return if os.path.exists(filepath):

while True:

d_tag = raw_input('File %s already exists, sure you want to cover (Y/N)?' % (key_name)).strip()

if d_tag not in ['Y', 'N'] or len(d_tag) == 0:

continue

elif d_tag == 'Y':

os.remove(filepath)

break

elif d_tag == 'N':

return

os.mknod(filepath)

filesize=key.size

q=self.init_queue(filesize,self.chrunksize)

for i in range(0,threadcnt):

t=threading.Thread(target=self.download_chrunk,args=(filepath,key_name,bucket_name,q,i))

t.setDaemon(True)

t.start()

q.join() #生成下载URL

def generate_object_download_urls(self,key_name,bucket_name,valid_time=0):

all_bucket_name_list = [i.name for i in self.conn.get_all_buckets()]

if bucket_name not in all_bucket_name_list:

print 'Bucket %s is not exist,please try again' % (bucket_name)

return

else:

bucket = self.conn.get_bucket(bucket_name) all_key_name_list = [i.name for i in bucket.get_all_keys()]

if key_name not in all_key_name_list:

print 'File %s is not exist,please try again' % (key_name)

return

else:

key = bucket.get_key(key_name) try:

key.set_canned_acl('public-read')

download_url = key.generate_url(valid_time, query_auth=False, force_http=True)

if self.port != 80:

x1=download_url.split('/')[0:3]

x2=download_url.split('/')[3:]

s1=u'/'.join(x1)

s2=u'/'.join(x2) s3=':%s/' %(str(self.port))

download_url=s1+s3+s2

print download_url except Exception:

pass #操作对象

class ceph_object(object):

def __init__(self,conn):

self.conn=conn def operation_cephCluster(self,filepath,command):

back = os.path.dirname(filepath).strip('/')

path = os.path.basename(filepath) for case in switch(command):

if case('delfile'):

print "正在删除:"+back+"__BACK文件夹中___FileName:"+path

self.conn.delete_file(path,back)

self.conn.list_single(back)

break

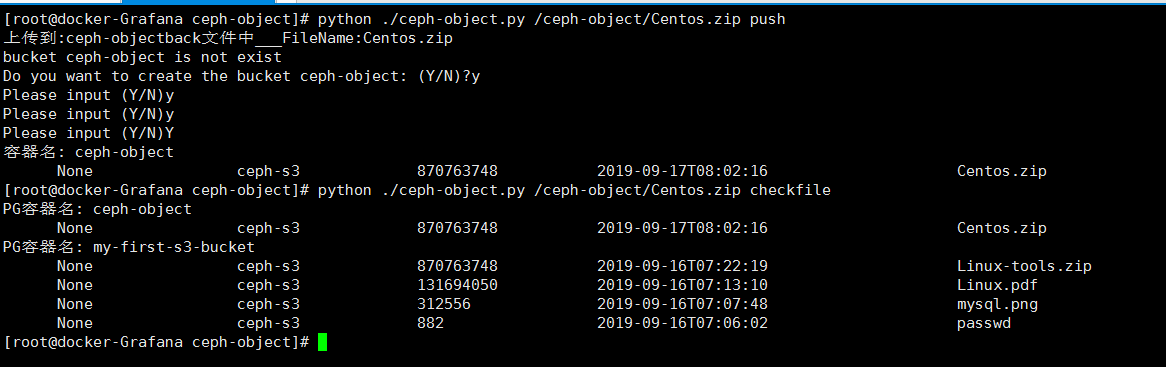

if case('push'): #未实现超过8MB文件上传判断

print "上传到:"+back+"__BACK文件夹中___FileName:"+path

self.conn.create_bucket(back)

self.conn.upload_file_multipart(filepath,path,back)

self.conn.list_single(back)

break

if case('pull'):#未实现超过8MB文件上传判断

print "下载:"+back+"back文件中___FileName:"++path

conn.download_file_multipart(filepath,path,back)

os.system('ls -al')

break

if case('delback'):

print "删除:"+back+"-back文件夹"

self.conn.delete_bucket(back)

self.conn.list_all()

break

if case('check'):

print "ceph-cluster所有back:"

self.conn.get_show_buckets()

break

if case('checkfile'):

self.conn.list_all()

break

if case('creaetback'):

self.conn.create_bucket(back)

self.conn.get_show_buckets()

break

if case(): # default, could also just omit condition or 'if True'

print "something else! input null"

# No need to break here, it'll stop anyway if __name__ == '__main__':

access_key = 'ZZNYFWKUQQD832IMIGJ2'

secret_key = '9eM9hIHt9q0XVNJ7WKhBPlC0hzUhOKhRhweHW8hO'

conn=CONNECTION(access_key,secret_key,'192.168.100.23',7480)

ceph_object(conn).operation_cephCluster(sys.argv[1], sys.argv[2]) #Linux 操作

# ceph_object(conn).operation_cephCluster('/my-first-s31-bucket/Linux.pdf','check')

在来张图吧:

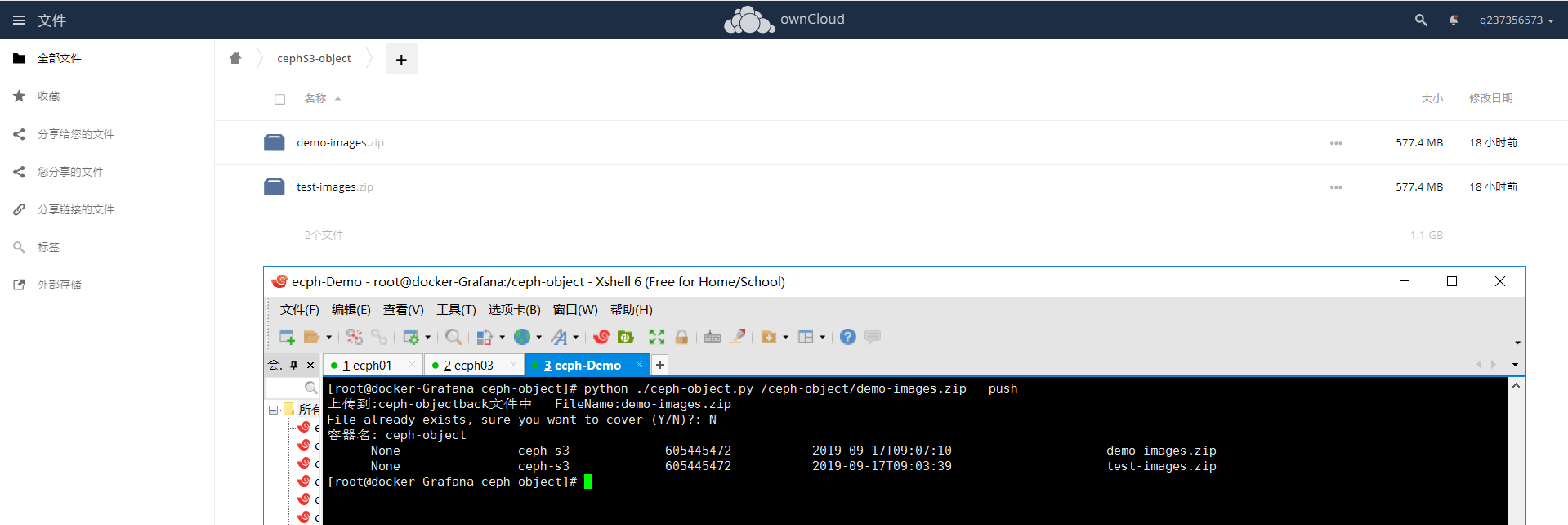

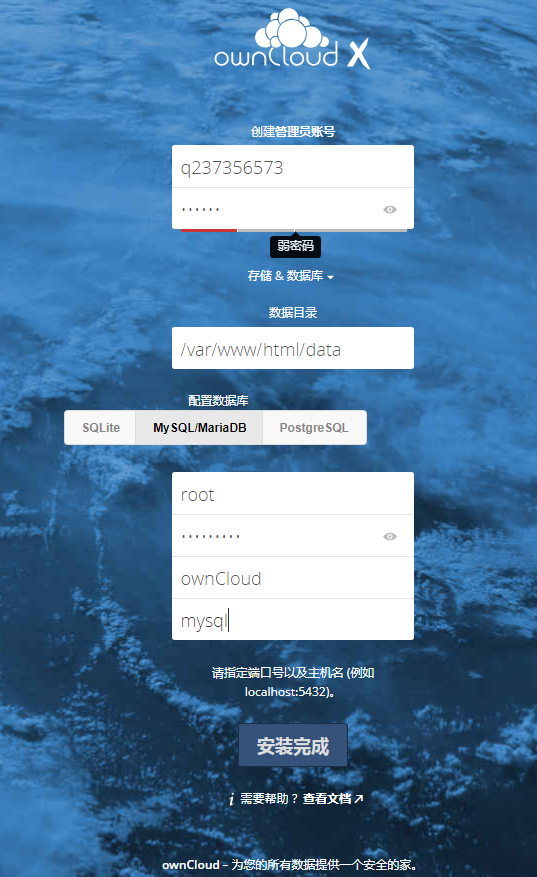

当然你还可以用docker , docker-compose 搭一个owncloud 实现对ceph-cluster WEB Ui界面管理

docker-compose 编排代码如下:

version: ''

services:

owncloud:

image: owncloud

restart: always

links:

- mysql:mysql

volumes:

- "./owncloud-data/owncloud:/var/www/html/data"

ports:

- 80:80

mysql:

image: migs/mysql-5.7

restart: always

volumes:

- "./mysql-data:/var/lib/mysql"

ports:

- 3306:3306

environment:

MYSQL_ROOT_PASSWORD: ""

MYSQL_DATABASE: ownCloud

配置如下:

最终效果

python2.7 操作ceph-cluster S3对象接口 实现: 上传 下载 查询 删除 顺便使用Docker装个owncloud 实现UI管理的更多相关文章

- Java 客户端操作 FastDFS 实现文件上传下载替换删除

FastDFS 的作者余庆先生已经为我们开发好了 Java 对应的 SDK.这里需要解释一下:作者余庆并没有及时更新最新的 Java SDK 至 Maven 中央仓库,目前中央仓库最新版仍旧是 1.2 ...

- Python 自动化paramiko操作linux使用shell命令,以及文件上传下载linux与windows之间的实现

# coding=utf8 import paramiko """ /* python -m pip install paramiko python version 3. ...

- AWS S3 API实现文件上传下载

http://blog.csdn.net/marvin198801/article/details/47662965

- 如何利用京东云的对象存储(OSS)上传下载文件

作者:刘冀 在公有云厂商里都有对象存储,京东云也不例外,而且也兼容S3的标准因此可以利用相关的工具去上传下载文件,本文主要记录一下利用CloudBerry Explorer for Amazon S3 ...

- Django 08 Django模型基础3(关系表的数据操作、表关联对象的访问、多表查询、聚合、分组、F、Q查询)

Django 08 Django模型基础3(关系表的数据操作.表关联对象的访问.多表查询.聚合.分组.F.Q查询) 一.关系表的数据操作 #为了能方便学习,我们进入项目的idle中去执行我们的操作,通 ...

- java微信接口之四—上传素材

一.微信上传素材接口简介 1.请求:该请求是使用post提交地址为: https://api.weixin.qq.com/cgi-bin/media/uploadnews?access_token=A ...

- jm解决乱码问题-参数化-数据库操作-文件上传下载

jm解决乱码问题-参数化-数据库操作-文件上传下载 如果JM出果运行结果是乱码(解决中文BODY乱码的问题) 找到JM的安装路径,例如:C:\apache-jmeter-3.1\bin 用UE打开jm ...

- 配置允许匿名用户登录访问vsftpd服务,进行文档的上传下载、文档的新建删除等操作

centos7环境下 临时关闭防火墙 #systemctl stop firewalld 临时关闭selinux #setenforce 0 安装ftp服务 #yum install vsftpd - ...

- SFTP上传下载文件、文件夹常用操作

SFTP上传下载文件.文件夹常用操作 1.查看上传下载目录lpwd 2.改变上传和下载的目录(例如D盘):lcd d:/ 3.查看当前路径pwd 4.下载文件(例如我要将服务器上tomcat的日志文 ...

随机推荐

- mybatis源码探索笔记-1(构建SqlSessionFactory)

前言 mybatis是目前进行java开发 dao层较为流行的框架,其较为轻量级的特性,避免了类似hibernate的重量级封装.同时将sql的查询与与实现分离,实现了sql的解耦.学习成本较hibe ...

- [ DLPytorch ] 文本预处理&语言模型&循环神经网络基础

文本预处理 实现步骤(处理语言模型数据集距离) 文本预处理的实现步骤 读入文本:读入zip / txt 等数据集 with zipfile.ZipFile('./jaychou_lyrics.txt. ...

- 删除Autorun.inf的方法

你的电脑的每个分区根目录都有一个autorun.inf的文件夹,查看属性是只读+隐藏,且无法删除.无法取得权限!点进去,却显示的是控制面板的内容? 其实这个不是病毒,而是用来防病毒,一些系统封装工具本 ...

- 变量的注释(python3.6以后的功能)

有时候导入模块,然后使用这个变量的时候,却没点出后面的智能提示.用以下方法可以解决:https://www.cnblogs.com/xieqiankun/p/type_hints_in_python3 ...

- GUI编程与CLI编程

作为一名多年的iOS开发人员,多次触发我思酌“GUI编程与CLI编程”各自的优劣,尤其是在当我为界面交互花费大占比时间时,时常怀疑自己的工作性质,我终究还是为互联网工作的码农,而不是让互联网为我所用的 ...

- Django中defer和only区别

defer('id', 'name'):取出对象,字段除了id和name都有 only('id', 'name'):取出对象, 只有id和name ret=models.Author.objects. ...

- 解决HTML5(富文本内容)连续数字、字母不自动换行

最近开发了一个与富文本相关的功能,大概描述一下:通过富文本编辑器添加的内容,通过input展示出来(这里用到了 Vue 的 v-html 指令). 也是巧合,编辑了一个只有数字组成的长文本,等到展示的 ...

- 吴裕雄--天生自然PythonDjangoWeb企业开发:解决ModuleNotFoundError: No module named 'config'报错

使用创建完模块应用之后python manage.py startapp test_app,您应该进入settings.py并将其注册到

- jquery $.ajax status为200 却调用了error方法

参考: https://blog.csdn.net/shuifa2008/article/details/41121269 https://blog.csdn.net/shuifa2008/artic ...

- C 语言入门第十二章---C语言文件操作

C语言具有操作文件的能力,比如打开文件.读取和追加数据.插入和删除数据.关闭文件.删除文件等. 在操作系统中,为了同意对各种硬件的操作,简化接口,不同的硬件设备也都被看成一个文件.对这些文件的操作,等 ...