YOLO-V3实战(darknet)

一. 准备工作

1)实验环境:

darknet 是由 C 和 CUDA 开发的,不需要配置其他深度学习的框架(如,tensorflow、caffe 等),支持 CPU 和 GPU 运算,而且安装过程非常简单。本文使用的 CUDA 的版本如下所示:

CUDA:9.0

CUDNN:7.0

2)下载 github 源码:

git clone https://github.com/pjreddie/darknet.git

3)配置darknet编译环境:

|

Ⅰ. 编译一直在darknet文件夹下; Ⅱ. 每次修改 Makefile 文件后需重新 make 一下才能生效; Ⅲ. 默认的 Makefile 是使用 CPU; |

修改Makefile编译环境配置文件:

GPU=1 # 是否打开GPU

CUDNN=1 # 是否打开cudnn

OPENCV=0 # 是否打开opencv

OPENMP=0

DEBUG=1 # 是否进行debug ARCH= -gencode arch=compute_61,code=compute_61 # 根据GPU计算能力选择对应数值(GTX 1080Ti:61、Tesla K80:37)官网查看:https://developer.nvidia.com/cuda-gpus

...

NVCC=/usr/local/cuda-9.0/bin/nvcc #修改nvcc路径

...

ifeq ($(GPU), 1) #修改cuda路径--黄色部分,若不需要更改删除黄色部分即可

COMMON+= -DGPU -I/usr/local/cuda-9.0/include

CFLAGS+= -DGPU

LDFLAGS+= -L/usr/local/cuda-9.0/lib64 -lcuda -lcudart -lcublas -lcurand

endif

ifeq ($(CUDNN), 1) #修改cudnn路径--黄色部分,若不需要更改删除黄色部分即可

COMMON+= -DCUDNN -I/usr/local/cuda-9.0/include

CFLAGS+= -DCUDNN

LDFLAGS+= -L/usr/local/cuda-9.0/lib64 -lcudnn

endif

重新编译

make clean

make

执行./darknet就可以执行编译

4) 实验环境测试

下载预训练模型权重yolov3.weights

下载地址:https://pjreddie.com/media/files/yolov3.weights

基于yolov3.weights模型权重的测试

测试单张图片(下面两个指令相同)

./darknet detector test cfg/coco.data cfg/yolov3.cfg yolov3.weights data/dog.jpg

./darknet detect cfg/yolov3.cfg yolov3.weights data/dog.jpg

测试多张图片

./darknet detect cfg/yolov3.cfg yolov3.weights

~根据提示输入图片路径

输出:保存./darknet目录下的predict.png

5) ./darknet编译格式

./darknet detector test <data_cfg> <models_cfg> <weights> <test_file> [-thresh] [-out]

./darknet detector train <data_cfg> <models_cfg> <weights> [-thresh] [-gpu] [-gpus] [-clear]

./darknet detector valid <data_cfg> <models_cfg> <weights> [-out] [-thresh]

./darknet detector recall <data_cfg> <models_cfg> <weights> [-thresh] '<>'必选项,’[ ]‘可选项

data_cfg:数据配置文件,eg:cfg/voc.data

models_cfg:模型配置文件,eg:cfg/yolov3-voc.cfg

weights:权重配置文件,eg:weights/yolov3.weights

test_file:测试文件,eg:*/*/*/test.txt

-thresh:显示被检测物体中confidence大于等于 [-thresh] 的bounding-box,默认0.005

-out:输出文件名称,默认路径为results文件夹下,eg:-out "" //输出class_num个文件,文件名为class_name.txt;若不选择此选项,则默认输出文件名为comp4_det_test_"class_name".txt

-i/-gpu:指定单个gpu,默认为0,eg:-gpu 2

-gpus:指定多个gpu,默认为0,eg:-gpus 0,1,2

二. 实验步骤

|

基于darknet在VOC格式的数据集上训练yolov3 |

1)下载 Pascal VOC 2007 和 2012 的数据集

wget https://pjreddie.com/media/files/VOCtrainval_11-May-2012.tar

wget https://pjreddie.com/media/files/VOCtrainval_06-Nov-2007.tar

wget https://pjreddie.com/media/files/VOCtest_06-Nov-2007.tar

tar xf VOCtrainval_11-May-2012.tar

tar xf VOCtrainval_06-Nov-2007.tar

tar xf VOCtest_06-Nov-2007.tar

注:若非voc格式的数据集需先生成voc格式的数据集,数据集的目录严格按照voc数据集的目录结构

2)生成 VOC 数据集的标签

darknet 需要一个“.txt”格式的标签文件,每行表示一张图像的信息,包括(x, y, w, h)格式为:<object-class> <x> <y> <width> <height>

darknet 官网提供了一个针对 VOC 数据集,处理标签的脚本,darknet/scripts文件夹下的voc_label.py文件,若无执行如下命令下载文件:

wget https://pjreddie.com/media/files/voc_label.py # 获取脚本

修改 voc_label.py(4处)

①sets=[('', 'train'), ('', 'val'), ('', 'test')] #替换为自己的数据集

②classes = ["head", "eye", "nose"] #修改为自己的类别

③in_file = open('VOCdevkit/VOC%s/Annotations/%s.xml'%(year, image_id)) #将数据集放于当前目录下

④os.system("cat 2007_train.txt 2007_val.txt > train.txt") #修改为自己的数据集用作训练

修改完成后执行:

python voc_label.py # 执行脚本,获得所需的“.txt”文件

生成的xml文件在 VOCdevkit/VOC%s/labels 目录下

标签处理完成后,在voc_label.py所在目录下生成如下几个文件:

2007_text.txt、2007_train.txt、2007_val.txt、2012_text.txt、2012_train.txt、train.txt、train_all.txt

3)修改配置文件

Ⅰ. 数据配置文件——cfg/voc.data

classes= 20 # 类别总数

train = /home/xieqi/project/train.txt # 训练数据所在的位置

valid = /home/xieqi/project/2007_test.txt # 测试数据所在的位置

names = data/voc.names # 修改见voc.names

backup = backup # 输出的权重信息保存的文件夹

eval =

results =

Ⅱ. 模型配置文件——cfg/yolov3-voc.cfg

batch= # 一批训练样本的样本数量,每batch个样本更新一次参数

subdivisions= # 它会让你的每一个batch不是一下子都丢到网络里。而是分成subdivision对应数字的份数,一份一份的跑完后,在一起打包算作完成一次iteration

width= # 只可以设置成32的倍数

height= # 只可以设置成32的倍数 channels= # 若为灰度图,则chennels=1,另外还需修改/scr/data.c文件中的load_data_detection函数;若为RGB则 channels=3 ,无需修改/scr/data.c文件 momentum=0.9 # 最优化方法的动量参数,这个值影响着梯度下降到最优值得速度

decay=0.0005 # 权重衰减正则项,防止过拟合

angle= # 通过旋转角度来生成更多训练样本

saturation = 1.5 # 通过调整饱和度来生成更多训练样本

exposure = 1.5 # 通过调整曝光量来生成更多训练样本

hue=. # 通过调整色调来生成更多训练样本 learning_rate=0.001 # 学习率, 刚开始训练时, 以 0.01 ~ 0.001 为宜, 一定轮数过后,逐渐减缓。

burn_in= # 在迭代次数小于burn_in时,其学习率的更新有一种方式,大于burn_in时,才采用policy的更新方式

max_batches = # 训练步数

policy=steps # 学习率调整的策略

steps=, # 开始衰减的步数

scales=.,. # 在第40000和第45000次迭代时,学习率衰减10倍

...

[convolutional]——YOLO层前一层卷积层

...

filters= # 每一个[yolo]层前的最后一个卷积层中的 filters=num(yolo层个数)*(classes+)

... [yolo]

mask = ,,

anchors = ,, ,, ,, ,, ,, ,, ,, ,, , #如果想修改默认anchors数值,使用k-means即可;

classes= # 修改为自己的类别数

num= # 每个grid cell预测几个box,和anchors的数量一致。调大num后训练时Obj趋近0的话可以尝试调大object_scale

jitter=. # 利用数据抖动产生更多数据, jitter是crop的参数, jitter=.,就是在0~.3中进行crop

ignore_thresh = . # 决定是否需要计算IOU误差的参数,大于thresh,IOU误差不会夹在cost function中

truth_thresh =

random= # 如果为1,每次迭代图片大小随机从320到608,步长为32,如果为0,每次训练大小与输入大小一致

...

Ⅲ. 标签配置文件——data/voc.names

head #自己需要探测的类别,一行一个

eye

nose

Ⅳ. 修改数据格式——scr/data.c

data load_data_detection(int n, char **paths, int m, int w, int h, int boxes, int classes, float jitter, float hue, float saturation, float exposure)

{

char **random_paths = get_random_paths(paths, n, m);

int i;

data d = {};

d.shallow = ; d.X.rows = n;

d.X.vals = calloc(d.X.rows, sizeof(float*));

d.X.cols = h*w; //灰阶图

//d.X.cols = h*w*3; //RGB图 ...

4)下载预训练的参数(卷积权重)

“darknet53.conv.74”是使用 Imagenet 数据集进行预训练:

wget https://pjreddie.com/media/files/darknet53.conv.74 # 下载预训练的网络模型参数

获取其他模型类似 darknet53.conv.74 的预训练权重

Ⅰ. 下载官方预训练模型的权重

https://pjreddie.com/darknet/yolo/ //COCO数据集训练的

https://pjreddie.com/darknet/imagenet/ //Imagenet数据集训练的

eg: wget https://pjreddie.com/media/files/yolov3-tiny.weights

Ⅱ. 转换为类似darknet.conv.74的预训练权重

eg: ./darknet partial cfg/yolov3-tiny yolov3-tiny.weights yolov-tiny.conv.

5)训练模型:

Ⅰ. 单GPU训练: ./darknet detector train <data_cfg> <train_cfg> <weights> -gpu <gpu_id>

./darknet detector train cfg/voc.data cfg/yolov3-voc.cfg darknet53.conv.74 -i 1

Ⅱ. 多GPU训练: ./darknet detector train <data_cfg> <model_cfg> <weights> -gpus <gpu_list>

./darknet detector train cfg/voc.data cfg/yolov3-voc.cfg darknet53.conv. -gpus ,,,

Ⅲ. 从checkpoint继续训练

./darknet detector train cfg/voc.data cfg/yolov3-voc.cfg backup/yolov3-voc.backup -gpus 0,1,2,3

Ⅳ. CPU训练:

./darknet detector train <data_cfg> <model_cfg> <weights> -nogpu

Ⅴ. 生成loss-iter曲线

在执行训练命令的时候加一下管道,tee一下log:

./darknet detector train cfg/voc.data cfg/yolov3-voc.cfg | tee result/log/training.log

将下面的python代码保存为drawcurve.py。并执行

python drawcurve.py training.log 0

~drawcurve.py

import argparse

import sys

import matplotlib.pyplot as plt

def main(argv):

parser = argparse.ArgumentParser()

parser.add_argument("log_file", help = "path to log file" )

parser.add_argument( "option", help = "0 -> loss vs iter" )

args = parser.parse_args()

f = open(args.log_file)

lines = [line.rstrip("\n") for line in f.readlines()]

# skip the first 3 lines

lines = lines[3:]

numbers = {'','','','','','','','','',''}

iters = []

loss = []

for line in lines:

if line[0] in numbers:

args = line.split(" ")

if len(args) >3:

iters.append(int(args[0][:-1]))

loss.append(float(args[2]))

plt.plot(iters,loss)

plt.xlabel('iters')

plt.ylabel('loss')

plt.grid()

plt.show()

if __name__ == "__main__":

main(sys.argv)

6)训练过程:

训练的log格式如下:

Loaded: 4.533954 seconds

Region Avg IOU: 0.262313, Class: 1.000000, Obj: 0.542580, No Obj: 0.514735, Avg Recall: 0.162162, count:

Region Avg IOU: 0.175988, Class: 1.000000, Obj: 0.499655, No Obj: 0.517558, Avg Recall: 0.070423, count:

Region Avg IOU: 0.200012, Class: 1.000000, Obj: 0.483404, No Obj: 0.514622, Avg Recall: 0.075758, count:

Region Avg IOU: 0.279284, Class: 1.000000, Obj: 0.447059, No Obj: 0.515849, Avg Recall: 0.134615, count:

: 629.763611, 629.763611 avg, 0.001000 rate, 6.098687 seconds, images

Loaded: 2.957771 seconds

Region Avg IOU: 0.145857, Class: 1.000000, Obj: 0.051285, No Obj: 0.031538, Avg Recall: 0.069767, count:

Region Avg IOU: 0.257284, Class: 1.000000, Obj: 0.048616, No Obj: 0.027511, Avg Recall: 0.078947, count:

Region Avg IOU: 0.174994, Class: 1.000000, Obj: 0.030197, No Obj: 0.029943, Avg Recall: 0.088889, count:

Region Avg IOU: 0.196278, Class: 1.000000, Obj: 0.076030, No Obj: 0.030472, Avg Recall: 0.087719, count:

: 84.804230, 575.267700 avg, 0.001000 rate, 5.959159 seconds, images

iter 总损失 平均损失 学习率 花费时间 参与训练的图片总数

| Region | cfg文件中yolo-layer的索引 |

| Avg IOU | 当前迭代中,预测的box与标注的box的平均交并比,越大越好,期望数值为1 |

| Class | 标注物体的分类准确率,越大越好,期望数值为1 |

| obj | 越大越好,期望数值为1 |

| No obj | 越小越好,但不为零 |

| .5R | 以IOU=0.5为阈值时候的recall; recall = 检出的正样本/实际的正样本 |

| .75R | 以IOU=0.75为阈值时候的recall |

| count | 正样本数目 |

Region Avg IOU: 0.798032, Class: 0.559781, Obj: 0.515851, No Obj: 0.006533, .5R: 1.000000, .75R: 1.000000, count:

Region Avg IOU: 0.725307, Class: 0.830518, Obj: 0.506567, No Obj: 0.000680, .5R: 1.000000, .75R: 0.750000, count:

Region Avg IOU: 0.579333, Class: 0.322556, Obj: 0.020537, No Obj: 0.000070, .5R: 1.000000, .75R: 0.000000, count:

以上输出显示了所有训练图片的一个批次(batch),批次大小的划分根据我们在 .cfg 文件中设置的subdivisions参数。

在我使用的 .cfg 文件中 batch = 64 ,subdivision = 16,所以在训练输出中,训练迭代包含了16组,每组又包含了4张图片,跟设定的batch和subdivision的值一致。

但是此处有16*3条信息,每组包含三条信息,分别是:Region 82、Region 94、Region 106。

三个尺度上预测不同大小的框:

82卷积层 为最大的预测尺度,使用较大的mask,但是可以预测出较小的物体;

94卷积层 为中间的预测尺度,使用中等的mask;

106卷积层为最小的预测尺度,使用较小的mask,可以预测出较大的物体

每个batch都会有这样一个输出:

: 1.350835, 1.386559 avg, 0.001000 rate, 3.323842 seconds, images

batch 总损失 平均损失 学习率 花费时间 参与训练的图片总数 = 2706 * 64

三. 模型评价

1)更改配置文件:

Ⅰ. 模型参数(cfg/yoloc3.cfg):

batch=1

subdivisions=1

| 注:测评模型时batch、subdivisions必须为1 |

Ⅱ. 更改为批处理(example/detector.c):

①. 在detector.c中增加头文件:

#include <unistd.h> /* Many POSIX functions (but not all, by a large margin) */

#include <fcntl.h> /* open(), creat() - and fcntl() */

②. 在前面添加GetFilename(char *fullname)函数

#include <unistd.h>

#include <fcntl.h> #include "darknet.h"

#include <sys/stat.h>

#include <stdio.h>

#include <time.h>

#include <sys/types.h> static int coco_ids[] = {,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

36,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

67,,,,,,,,,,,,,,,,,,,};

//产生的文件名与原文件名相同(去除路径和格式)

char *GetFilename(char *fullname)

{

int from,to,i;

char *newstr,*temp;

if(fullname!=NULL){

//if not find dot

if((temp=strchr(fullname,'.'))==NULL){

newstr = fullname;

}

else

{

from = strlen(fullname) - strlen(temp);

to = (temp-fullname);

//the first dot's index

for (i=from; i<=to; i--){

if (fullname[i]=='.') break;//find the last dot

}

newstr = (char*)malloc(i+);

strncpy(newstr,fullname,i);

*(newstr+i)=;

}

}

static char name[] = {""};

char *q = strrchr(newstr,'/') + ;

strncpy(name,q,);

return name;

}

③. 用下面代码替换detector.c文件的void test_detector函数(注意有3处要改成自己的路径)

void test_detector(char *datacfg, char *cfgfile, char *weightfile, char *filename, float thresh, float hier_thresh, char *outfile, int fullscreen)

{

list *options = read_data_cfg(datacfg);

char *name_list = option_find_str(options, "names", "data/names.list");

char **names = get_labels(name_list); image **alphabet = load_alphabet();

network *net = load_network(cfgfile, weightfile, 0);

set_batch_network(net, 1);

srand(2222222);

double time;

char buff[256];

char *input = buff;

float nms=.45;

int i=0;

while(1){

if(filename){

strncpy(input, filename, 256);

image im = load_image_color(input,0,0);

image sized = letterbox_image(im, net->w, net->h);

//image sized = resize_image(im, net->w, net->h);

//image sized2 = resize_max(im, net->w);

//image sized = crop_image(sized2, -((net->w - sized2.w)/2), -((net->h - sized2.h)/2), net->w, net->h);

//resize_network(net, sized.w, sized.h);

layer l = net->layers[net->n-1]; float *X = sized.data;

time=what_time_is_it_now();

network_predict(net, X);

printf("%s: Predicted in %f seconds.\n", input, what_time_is_it_now()-time);

int nboxes = 0;

detection *dets = get_network_boxes(net, im.w, im.h, thresh, hier_thresh, 0, 1, &nboxes);

//printf("%d\n", nboxes);

//if (nms) do_nms_obj(boxes, probs, l.w*l.h*l.n, l.classes, nms);

if (nms) do_nms_sort(dets, nboxes, l.classes, nms);

draw_detections(im, dets, nboxes, thresh, names, alphabet, l.classes);

free_detections(dets, nboxes);

if(outfile)

{

save_image(im, outfile);

}

else{

save_image(im, "predictions");

#ifdef OPENCV

cvNamedWindow("predictions", CV_WINDOW_NORMAL);

if(fullscreen){

cvSetWindowProperty("predictions", CV_WND_PROP_FULLSCREEN, CV_WINDOW_FULLSCREEN);

}

show_image(im, "predictions");

cvWaitKey(0);

cvDestroyAllWindows();

#endif

}

free_image(im);

free_image(sized);

if (filename) break;

}

else {

printf("Enter Image Path: ");

fflush(stdout);

input = fgets(input, 256, stdin);

if(!input) return;

strtok(input, "\n"); list *plist = get_paths(input);

char **paths = (char **)list_to_array(plist);

printf("Start Testing!\n");

int m = plist->size;

if(access("/home/xieqi/darknet/data/out_img",0)==-1) //修改成自己的路径

{

if (mkdir("/home/xieqi/darknet/data/out_img",0777)) //修改成自己的路径

{

printf("creat file bag failed!!!");

}

}

for(i = 0; i < m; ++i){

char *path = paths[i];

image im = load_image_color(path,0,0);

image sized = letterbox_image(im, net->w, net->h);

//image sized = resize_image(im, net->w, net->h);

//image sized2 = resize_max(im, net->w);

//image sized = crop_image(sized2, -((net->w - sized2.w)/2), -((net->h - sized2.h)/2), net->w, net->h);

//resize_network(net, sized.w, sized.h);

layer l = net->layers[net->n-1]; float *X = sized.data;

time=what_time_is_it_now();

network_predict(net, X);

printf("Try Very Hard:");

printf("%s: Predicted in %f seconds.\n", path, what_time_is_it_now()-time);

int nboxes = 0;

detection *dets = get_network_boxes(net, im.w, im.h, thresh, hier_thresh, 0, 1, &nboxes);

//printf("%d\n", nboxes);

//if (nms) do_nms_obj(boxes, probs, l.w*l.h*l.n, l.classes, nms);

if (nms) do_nms_sort(dets, nboxes, l.classes, nms);

draw_detections(im, dets, nboxes, thresh, names, alphabet, l.classes);

free_detections(dets, nboxes);

if(outfile){

save_image(im, outfile);

}

else{

char b[2048];

sprintf(b,"/home/xieqi/darknet/data/out_img/%s",GetFilename(path)); //修改成自己的路径 save_image(im, b);

printf("save %s successfully!\n",GetFilename(path));

#ifdef OPENCV

cvNamedWindow("predictions", CV_WINDOW_NORMAL);

if(fullscreen){

cvSetWindowProperty("predictions", CV_WND_PROP_FULLSCREEN, CV_WINDOW_FULLSCREEN);

}

show_image(im, "predictions");

cvWaitKey(0);

cvDestroyAllWindows();

#endif

} free_image(im);

free_image(sized);

if (filename) break;

}

}

}

}

④. validate_detector_recall函数定义和调用改为:

void validate_detector_recall(char *datacfg, char *cfgfile, char *weightfile)

validate_detector_recall(datacfg, cfg, weights);

⑤.validate_detector_recall内的plist和paths的如下初始化代码:

list *plist = get_paths("data/voc.2007.test");

char **paths = (char **)list_to_array(plist);

修改为:

list *options = read_data_cfg(datacfg);

char *valid_images = option_find_str(options, "valid", "data/train.list");

list *plist = get_paths(valid_images);

char **paths = (char **)list_to_array(plist);

Ⅲ. 在darknet下重新make

make clean

make

2). 测试单张图片

Ⅰ. ./darknet detector test <data_cfg> <models_cfg> <weights> <image_path> # 本次测试无opencv支持

Ⅱ. <models_cfg>文件中batch和subdivisions两项必须为1;

Ⅲ. 测试时还可以用-thresh和-hier选项指定对应参数;

Ⅳ. 结果都保存在./data/out_img 文件夹下

./darknet detector test cfg/voc.data cfg/yolov3-voc.cfg backup/yolov3-voc_final.weights Eminem.jpg # 测试单张图片

3). 生成预测结果

Ⅰ. 批量测试——输出文本检测结果

./darknet detector valid cfg/voc.data cfg/yolov3-voc.cfg backup/yolov3-voc_final.weights -i 1 -out ""

① ./darknet detector valid <data_cfg> <models_cfg> <weights>;

② 结果生成在<data_cfg>的指定的目录下以<out_file>开头的若干文件中,若<data_cfg>没有指定results,那么默认为<darknet_root>/results;

③ <models_cfg>文件中batch和subdivisions两项必须为1;

④ 若-out 未指定字符串,则在results文件夹下生成comp4_det_test_[类名].txt文件并保存测试结果;

⑤ 本次实验在results文件夹下生成 [类名].txt 文件;

Ⅱ. 批量测试——输出图片检测结果

./darknet detector test cfg/voc.data cfg/yolov3-voc.cfg backup/yolov3-voc_final.weights -i 2 #enter Enter Image Path: data/voc/2007_test.txt

① ./darknet detector test <data_cfg> <models_cfg> <weights>;

② <models_cfg>文件中batch和subdivisions两项必须为1;

③ 本次实验结果,在<darknet_root>/data/out_img文件夹下(detector.c/test_detector函数更改的路径),文件名以原图片名前6位命名(detector.c/GetFilename函数定义的位数);

4). 计算recall(执行这个命令需要修改detector.c文件,修改信息请参考“detector.c修改”)

Ⅰ. ./darknet detector recall <data_cfg> <test_cfg> <weights>;

Ⅱ. <test_cfg>文件中batch和subdivisions两项必须为1;

Ⅲ. 输出在stderr里,重定向时请注意;

Ⅳ. RPs/Img、IOU、Recall都是到当前测试图片的均值;

Ⅴ. detector.c中对目录处理有错误,可以参照validate_detector对validate_detector_recall最开始几行的处理进行修改;

./darknet detector valid cfg/voc.data cfg/yolov3-voc.cfg backup/yolov3-voc_20000.weights

最后得到的log如下:

306 746 783 RPs/Img: 21.45 IOU: 75.59% Recall:95.27%

307 748 785 RPs/Img: 21.43 IOU: 75.62% Recall:95.29%

308 750 787 RPs/Img: 21.42 IOU: 75.59% Recall:95.30%

309 752 789 RPs/Img: 21.43 IOU: 75.62% Recall:95.31%

310 754 791 RPs/Img: 21.44 IOU: 75.63% Recall:95.32%

No. Correct total

输出的具体格式为:

| Number | 处理的第几张图 |

| Correct |

正确的识别出了多少bbox。这个值算出来的步骤是这样的,丢进网络一张图片,网络会预测出很多bbox,每个bbox都有其置信概率,概率大于threshold的bbox与实际的bbox,也就是labels中txt的内容计算IOU, 找出IOU最大的bbox,如果这个最大值大于预设的IOU的threshold,那么correct加一 |

| Total | 实际有多少个bbox |

| Rps/img | 平均每个图片会预测出来多少个bbox |

| IOU | 预测出的bbox和实际标注的bbox的交集 除以 他们的并集。显然,这个数值越大,说明预测的结果越好 |

| Recall | 召回率, 检测出物体的个数 除以 标注的所有物体个数。通过代码我们也能看出来就是Correct除以Total的值 |

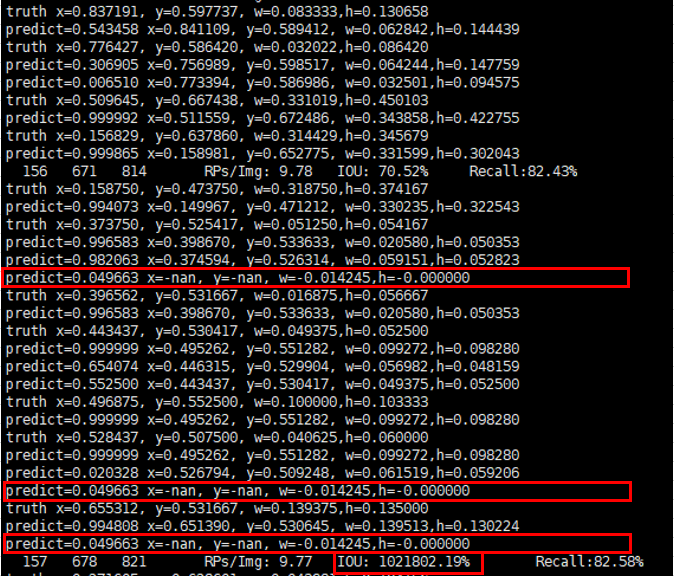

error:

本次实验出现 IOU:inf% —— 交并比 数值爆炸

打印bbox详细信息(修改detector.c/validate_detector_recall)

for (j = ; j < num_labels; ++j) {

++total;

box t = {truth[j].x, truth[j].y, truth[j].w, truth[j].h};

printf("truth x=%f, y=%f, w=%f,h=%f\n",truth[j].x,truth[j].y,truth[j].w,truth[j].h);//添加代码

float best_iou = ;

for(k = ; k < l.w*l.h*l.n; ++k){

float iou = box_iou(dets[k].bbox, t);

if(dets[k].objectness > thresh && iou > best_iou){

printf("predict=%f x=%f, y=%f, w=%f,h=%f\n",dets[k].objectness,dets[k].bbox.x,dets[k].bbox.y,dets[k].bbox.w,dets[k].bbox.h);//添加代码

best_iou = iou;

}

}

由显示结果可以看出,预测框的中心坐标x和y出现-nan

解决方法:修改函数

void validate_detector_recall(char *datacfg, char *cfgfile, char *weightfile)

{

...

//layer l = net->layers[net->n-1]; //注释掉

...

for(k = ; k < l.w*l.h*l.n; ++k){ //改为for(k = 0; k < nboxes; ++k){

...

5). 计算AP、mAP

先使用 3)-Ⅰ计算出验证集结果,再使用py-faster-rcnn下的voc_eval.py计算AP、mAP

Ⅰ. 下载voc_eval.py到 darknet 的根目录

voc_eval.py下载地址:https://github.com/rbgirshick/py-faster-rcnn/blob/master/lib/datasets/voc_eval.py

根据标签修改voc_eval.py的内容

.xml文件结构中

①若无pose

22 #obj_struct['pose'] = obj.find('pose').text # 注释掉

②若无truncated

23 #obj_struct['truncated'] = int(obj.find('truncated').text) # 注释掉

③若无difficult

24 #obj_struct['difficult'] = int(obj.find('difficult').text) # 注释掉

137 #if use_diff: # 注释掉

138 #difficult = np.array([False for x in R]).astype(np.bool) # 注释掉

139 #else: # 注释掉

140 #difficult = np.array([x['difficult'] for x in R]).astype(np.bool) # 注释掉

142 npos = npos +len(R) # 更改npos = npos + sum(~difficult)

145 #'difficult': difficult, # 注释掉

197 #if not R['difficult'][jmax]: # 注释掉

Ⅱ. 计算单类AP

①. 在darknet根目录下新建computer_mAP.py

#!/home/xieqi/anaconda2/envs/py2.7/bin python2.7

# -*- coding:utf-8 -*- from voc_eval import voc_eval print(voc_eval('/home/xieqi/darknet/results/{}.txt', '/home/xieqi/project/traffic_object_detection/voc_data/Annotations/{}.xml',

'/home/xieqi/project/traffic_object_detection/voc_data/ImageSets/Main/test.txt', 'vehicle', '/home/xieqi/project/traffic_object_detection/result/')) # "第一个参数为detector valid 按类别分类后的txt路径"

# "第二个参数为验证集对应的xml标签路径"

# "第三个为验证集txt文本路径,内容必须是无路径无后缀的图片名"

# "第四个为待验证的类别名"

# "第五个为pkl文件保存的路径"

②. 用python2执行computer_mAP.py

① 重复执行,检测其他类别需要删除生成的annots.pkl文件或改变computer_mAP.py中pkl文件保存的路径

② 输出两个array(),分别为rec和prec,最后一个数字为单类AP。

Ⅲ. 计算总mAP

在darknet根目录下新建computer_all_mAP.py

#!/home/xieqi/anaconda2/envs/py2.7/bin python2.7

# -*- coding:utf-8 -*- from voc_eval import voc_eval import os current_path = os.getcwd()

results_path = current_path+"/results"

sub_files = os.listdir(results_path) mAP = []

for i in range(len(sub_files)):

class_name = sub_files[i].split(".txt")[0]

rec, prec, ap = voc_eval('/home/xieqi/darknet/results/{}.txt',

'/home/xieqi/project/traffic_object_detection/voc_data/Annotations/{}.xml',

'/home/xieqi/project/traffic_object_detection/voc_data/ImageSets/Main/test.txt',

class_name, '/home/xieqi/project/traffic_object_detection/result/mAP')

print("{} :\t {} ".format(class_name, ap))

mAP.append(ap) mAP = tuple(mAP) print("***************************")

print("mAP :\t {}".format( float( sum(mAP)/len(mAP)) )) # results文件夹只能有'类名.txt'文件

# test.txt文件包含所有results中txt文件的图片名(保证是共同的验证集)

# 保证最后的输出路径下无pkl文件。

# 上述代码中的ap就是针对输入单一类别计算出的AP。

四、文件解析

1). ~detector.c

#include <unistd.h>

#include <fcntl.h> #include "darknet.h"

#include <sys/stat.h>

#include <stdio.h>

#include <time.h>

#include <sys/types.h> static int coco_ids[] = {,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,}; char *GetFilename(char *fullname)

{

int from,to,i;

char *newstr,*temp;

if(fullname!=NULL){

//if not find dot

if((temp=strchr(fullname,'.'))==NULL){

newstr = fullname;

}

else

{

from = strlen(fullname) - strlen(temp);

to = (temp-fullname);

//the first dot's index

for (i=from; i<=to; i--){

if (fullname[i]=='.') break;//find the last dot

}

newstr = (char*)malloc(i+);

strncpy(newstr,fullname,i);

*(newstr+i)=;

}

}

static char name[] = {""};

char *q = strrchr(newstr,'/') + ;

strncpy(name,q,);

return name;

} void train_detector(char *datacfg, char *cfgfile, char *weightfile, int *gpus, int ngpus, int clear)

{

list *options = read_data_cfg(datacfg); //解析data文件,用自定义链表options存储训练集基本信息,函数位于option_list.c

char *train_images = option_find_str(options, "train", "data/train.list"); //从options中找训练集

char *backup_directory = option_find_str(options, "backup", "/backup/"); //从options中找backup路径 srand(time()); //初始化随机种子数

char *base = basecfg(cfgfile); //此函数位于utils.c,返回cfg文件不带后缀的名字

printf("%s\n", base);

float avg_loss = -;

network **nets = calloc(ngpus, sizeof(network)); srand(time());

int seed = rand();

int i;

for(i = ; i < ngpus; ++i){

srand(seed);

#ifdef GPU

cuda_set_device(gpus[i]);

#endif

nets[i] = load_network(cfgfile, weightfile, clear);

nets[i]->learning_rate *= ngpus;

}

srand(time());

network *net = nets[]; int imgs = net->batch * net->subdivisions * ngpus;

printf("Learning Rate: %g, Momentum: %g, Decay: %g\n", net->learning_rate, net->momentum, net->decay);

data train, buffer; layer l = net->layers[net->n - ]; int classes = l.classes;

float jitter = l.jitter; list *plist = get_paths(train_images);

//int N = plist->size;

char **paths = (char **)list_to_array(plist); load_args args = get_base_args(net);

args.coords = l.coords;

args.paths = paths;

args.n = imgs;

args.m = plist->size;

args.classes = classes;

args.jitter = jitter;

args.num_boxes = l.max_boxes;

args.d = &buffer;

args.type = DETECTION_DATA;

//args.type = INSTANCE_DATA;

args.threads = ; pthread_t load_thread = load_data(args);

double time;

int count = ;

//while(i*imgs < N*120){

while(get_current_batch(net) < net->max_batches){

if(l.random && count++% == ){

printf("Resizing\n");

int dim = (rand() % + ) * ;

if (get_current_batch(net)+ > net->max_batches) dim = ;

//int dim = (rand() % 4 + 16) * 32;

printf("%d\n", dim);

args.w = dim;

args.h = dim; pthread_join(load_thread, );

train = buffer;

free_data(train);

load_thread = load_data(args); #pragma omp parallel for

for(i = ; i < ngpus; ++i){

resize_network(nets[i], dim, dim);

}

net = nets[];

}

time=what_time_is_it_now();

pthread_join(load_thread, );

train = buffer;

load_thread = load_data(args); /*

int k;

for(k = 0; k < l.max_boxes; ++k){

box b = float_to_box(train.y.vals[10] + 1 + k*5);

if(!b.x) break;

printf("loaded: %f %f %f %f\n", b.x, b.y, b.w, b.h);

}

*/

/*

int zz;

for(zz = 0; zz < train.X.cols; ++zz){

image im = float_to_image(net->w, net->h, 3, train.X.vals[zz]);

int k;

for(k = 0; k < l.max_boxes; ++k){

box b = float_to_box(train.y.vals[zz] + k*5, 1);

printf("%f %f %f %f\n", b.x, b.y, b.w, b.h);

draw_bbox(im, b, 1, 1,0,0);

}

show_image(im, "truth11");

cvWaitKey(0);

save_image(im, "truth11");

}

*/ printf("Loaded: %lf seconds\n", what_time_is_it_now()-time); time=what_time_is_it_now();

float loss = ;

#ifdef GPU

if(ngpus == ){

loss = train_network(net, train);

} else {

loss = train_networks(nets, ngpus, train, );

}

#else

loss = train_network(net, train);

#endif

if (avg_loss < ) avg_loss = loss;

avg_loss = avg_loss*. + loss*.; i = get_current_batch(net);

printf("%ld: %f, %f avg, %f rate, %lf seconds, %d images\n", get_current_batch(net), loss, avg_loss, get_current_rate(net), what_time_is_it_now()-time, i*imgs);

if(i%==){

#ifdef GPU

if(ngpus != ) sync_nets(nets, ngpus, );

#endif

char buff[];

sprintf(buff, "%s/%s.backup", backup_directory, base);

save_weights(net, buff);

}

if(i%== || (i < && i% == )){

#ifdef GPU

if(ngpus != ) sync_nets(nets, ngpus, );

#endif

char buff[];

sprintf(buff, "%s/%s_%d.weights", backup_directory, base, i);

save_weights(net, buff);

}

free_data(train);

}

#ifdef GPU

if(ngpus != ) sync_nets(nets, ngpus, );

#endif

char buff[];

sprintf(buff, "%s/%s_final.weights", backup_directory, base);

save_weights(net, buff);

} static int get_coco_image_id(char *filename)

{

char *p = strrchr(filename, '/');

char *c = strrchr(filename, '_');

if(c) p = c;

return atoi(p+);

} static void print_cocos(FILE *fp, char *image_path, detection *dets, int num_boxes, int classes, int w, int h)

{

int i, j;

int image_id = get_coco_image_id(image_path);

for(i = ; i < num_boxes; ++i){

float xmin = dets[i].bbox.x - dets[i].bbox.w/.;

float xmax = dets[i].bbox.x + dets[i].bbox.w/.;

float ymin = dets[i].bbox.y - dets[i].bbox.h/.;

float ymax = dets[i].bbox.y + dets[i].bbox.h/.; if (xmin < ) xmin = ;

if (ymin < ) ymin = ;

if (xmax > w) xmax = w;

if (ymax > h) ymax = h; float bx = xmin;

float by = ymin;

float bw = xmax - xmin;

float bh = ymax - ymin; for(j = ; j < classes; ++j){

if (dets[i].prob[j]) fprintf(fp, "{\"image_id\":%d, \"category_id\":%d, \"bbox\":[%f, %f, %f, %f], \"score\":%f},\n", image_id, coco_ids[j], bx, by, bw, bh, dets[i].prob[j]);

}

}

} void print_detector_detections(FILE **fps, char *id, detection *dets, int total, int classes, int w, int h)

{

int i, j;

for(i = ; i < total; ++i){

float xmin = dets[i].bbox.x - dets[i].bbox.w/. + ;

float xmax = dets[i].bbox.x + dets[i].bbox.w/. + ;

float ymin = dets[i].bbox.y - dets[i].bbox.h/. + ;

float ymax = dets[i].bbox.y + dets[i].bbox.h/. + ; if (xmin < ) xmin = ;

if (ymin < ) ymin = ;

if (xmax > w) xmax = w;

if (ymax > h) ymax = h; for(j = ; j < classes; ++j){

if (dets[i].prob[j]) fprintf(fps[j], "%s %f %f %f %f %f\n", id, dets[i].prob[j],

xmin, ymin, xmax, ymax);

}

}

} void print_imagenet_detections(FILE *fp, int id, detection *dets, int total, int classes, int w, int h)

{

int i, j;

for(i = ; i < total; ++i){

float xmin = dets[i].bbox.x - dets[i].bbox.w/.;

float xmax = dets[i].bbox.x + dets[i].bbox.w/.;

float ymin = dets[i].bbox.y - dets[i].bbox.h/.;

float ymax = dets[i].bbox.y + dets[i].bbox.h/.; if (xmin < ) xmin = ;

if (ymin < ) ymin = ;

if (xmax > w) xmax = w;

if (ymax > h) ymax = h; for(j = ; j < classes; ++j){

int class = j;

if (dets[i].prob[class]) fprintf(fp, "%d %d %f %f %f %f %f\n", id, j+, dets[i].prob[class],

xmin, ymin, xmax, ymax);

}

}

} void validate_detector_flip(char *datacfg, char *cfgfile, char *weightfile, char *outfile)

{

int j;

list *options = read_data_cfg(datacfg);

char *valid_images = option_find_str(options, "valid", "data/train.list");

char *name_list = option_find_str(options, "names", "data/names.list");

char *prefix = option_find_str(options, "results", "results");

char **names = get_labels(name_list);

char *mapf = option_find_str(options, "map", );

int *map = ;

if (mapf) map = read_map(mapf); network *net = load_network(cfgfile, weightfile, );

set_batch_network(net, );

fprintf(stderr, "Learning Rate: %g, Momentum: %g, Decay: %g\n", net->learning_rate, net->momentum, net->decay);

srand(time()); list *plist = get_paths(valid_images);

char **paths = (char **)list_to_array(plist); layer l = net->layers[net->n-];

int classes = l.classes; char buff[];

char *type = option_find_str(options, "eval", "voc");

FILE *fp = ;

FILE **fps = ;

int coco = ;

int imagenet = ;

if(==strcmp(type, "coco")){

if(!outfile) outfile = "coco_results";

snprintf(buff, , "%s/%s.json", prefix, outfile);

fp = fopen(buff, "w");

fprintf(fp, "[\n");

coco = ;

} else if(==strcmp(type, "imagenet")){

if(!outfile) outfile = "imagenet-detection";

snprintf(buff, , "%s/%s.txt", prefix, outfile);

fp = fopen(buff, "w");

imagenet = ;

classes = ;

} else {

if(!outfile) outfile = "comp4_det_test_";

fps = calloc(classes, sizeof(FILE *));

for(j = ; j < classes; ++j){

snprintf(buff, , "%s/%s%s.txt", prefix, outfile, names[j]);

fps[j] = fopen(buff, "w");

}

} int m = plist->size;

int i=;

int t; float thresh = .;

float nms = .; int nthreads = ;

image *val = calloc(nthreads, sizeof(image));

image *val_resized = calloc(nthreads, sizeof(image));

image *buf = calloc(nthreads, sizeof(image));

image *buf_resized = calloc(nthreads, sizeof(image));

pthread_t *thr = calloc(nthreads, sizeof(pthread_t)); image input = make_image(net->w, net->h, net->c*); load_args args = {};

args.w = net->w;

args.h = net->h;

//args.type = IMAGE_DATA;

args.type = LETTERBOX_DATA; for(t = ; t < nthreads; ++t){

args.path = paths[i+t];

args.im = &buf[t];

args.resized = &buf_resized[t];

thr[t] = load_data_in_thread(args);

}

double start = what_time_is_it_now();

for(i = nthreads; i < m+nthreads; i += nthreads){

fprintf(stderr, "%d\n", i);

for(t = ; t < nthreads && i+t-nthreads < m; ++t){

pthread_join(thr[t], );

val[t] = buf[t];

val_resized[t] = buf_resized[t];

}

for(t = ; t < nthreads && i+t < m; ++t){

args.path = paths[i+t];

args.im = &buf[t];

args.resized = &buf_resized[t];

thr[t] = load_data_in_thread(args);

}

for(t = ; t < nthreads && i+t-nthreads < m; ++t){

char *path = paths[i+t-nthreads];

char *id = basecfg(path);

copy_cpu(net->w*net->h*net->c, val_resized[t].data, , input.data, );

flip_image(val_resized[t]);

copy_cpu(net->w*net->h*net->c, val_resized[t].data, , input.data + net->w*net->h*net->c, ); network_predict(net, input.data);

int w = val[t].w;

int h = val[t].h;

int num = ;

detection *dets = get_network_boxes(net, w, h, thresh, ., map, , &num);

if (nms) do_nms_sort(dets, num, classes, nms);

if (coco){

print_cocos(fp, path, dets, num, classes, w, h);

} else if (imagenet){

print_imagenet_detections(fp, i+t-nthreads+, dets, num, classes, w, h);

} else {

print_detector_detections(fps, id, dets, num, classes, w, h);

}

free_detections(dets, num);

free(id);

free_image(val[t]);

free_image(val_resized[t]);

}

}

for(j = ; j < classes; ++j){

if(fps) fclose(fps[j]);

}

if(coco){

fseek(fp, -, SEEK_CUR);

fprintf(fp, "\n]\n");

fclose(fp);

}

fprintf(stderr, "Total Detection Time: %f Seconds\n", what_time_is_it_now() - start);

} void validate_detector(char *datacfg, char *cfgfile, char *weightfile, char *outfile)

{

int j;

list *options = read_data_cfg(datacfg);

char *valid_images = option_find_str(options, "valid", "/home/xieqi/project/traffic_object_detection/voc_data/test.txt");

char *name_list = option_find_str(options, "names", "data/53w.names");

char *prefix = option_find_str(options, "results", "results");

char **names = get_labels(name_list);

char *mapf = option_find_str(options, "map", );

int *map = ;

if (mapf) map = read_map(mapf); network *net = load_network(cfgfile, weightfile, );

set_batch_network(net, );

fprintf(stderr, "Learning Rate: %g, Momentum: %g, Decay: %g\n", net->learning_rate, net->momentum, net->decay);

srand(time()); list *plist = get_paths(valid_images);

char **paths = (char **)list_to_array(plist); layer l = net->layers[net->n-];

int classes = l.classes; char buff[];

char *type = option_find_str(options, "eval", "voc");

FILE *fp = ;

FILE **fps = ;

int coco = ;

int imagenet = ;

if(==strcmp(type, "coco")){

if(!outfile) outfile = "coco_results";

snprintf(buff, , "%s/%s.json", prefix, outfile);

fp = fopen(buff, "w");

fprintf(fp, "[\n");

coco = ;

} else if(==strcmp(type, "imagenet")){

if(!outfile) outfile = "imagenet-detection";

snprintf(buff, , "%s/%s.txt", prefix, outfile);

fp = fopen(buff, "w");

imagenet = ;

classes = ;

} else {

if(!outfile) outfile = "comp4_det_test_";

fps = calloc(classes, sizeof(FILE *));

for(j = ; j < classes; ++j){

snprintf(buff, , "%s/%s%s.txt", prefix, outfile, names[j]);

fps[j] = fopen(buff, "w");

}

} int m = plist->size;

int i=;

int t; float thresh = .;

float nms = .; int nthreads = ;

image *val = calloc(nthreads, sizeof(image));

image *val_resized = calloc(nthreads, sizeof(image));

image *buf = calloc(nthreads, sizeof(image));

image *buf_resized = calloc(nthreads, sizeof(image));

pthread_t *thr = calloc(nthreads, sizeof(pthread_t)); load_args args = {};

args.w = net->w;

args.h = net->h;

//args.type = IMAGE_DATA;

args.type = LETTERBOX_DATA; for(t = ; t < nthreads; ++t){

args.path = paths[i+t];

args.im = &buf[t];

args.resized = &buf_resized[t];

thr[t] = load_data_in_thread(args);

}

double start = what_time_is_it_now();

for(i = nthreads; i < m+nthreads; i += nthreads){

fprintf(stderr, "%d\n", i);

for(t = ; t < nthreads && i+t-nthreads < m; ++t){

pthread_join(thr[t], );

val[t] = buf[t];

val_resized[t] = buf_resized[t];

}

for(t = ; t < nthreads && i+t < m; ++t){

args.path = paths[i+t];

args.im = &buf[t];

args.resized = &buf_resized[t];

thr[t] = load_data_in_thread(args);

}

for(t = ; t < nthreads && i+t-nthreads < m; ++t){

char *path = paths[i+t-nthreads];

char *id = basecfg(path);

float *X = val_resized[t].data;

network_predict(net, X);

int w = val[t].w;

int h = val[t].h;

int nboxes = ;

detection *dets = get_network_boxes(net, w, h, thresh, ., map, , &nboxes);

if (nms) do_nms_sort(dets, nboxes, classes, nms);

if (coco){

print_cocos(fp, path, dets, nboxes, classes, w, h);

} else if (imagenet){

print_imagenet_detections(fp, i+t-nthreads+, dets, nboxes, classes, w, h);

} else {

print_detector_detections(fps, id, dets, nboxes, classes, w, h);

}

free_detections(dets, nboxes);

free(id);

free_image(val[t]);

free_image(val_resized[t]);

}

}

for(j = ; j < classes; ++j){

if(fps) fclose(fps[j]);

}

if(coco){

fseek(fp, -, SEEK_CUR);

fprintf(fp, "\n]\n");

fclose(fp);

}

fprintf(stderr, "Total Detection Time: %f Seconds\n", what_time_is_it_now() - start);

} void validate_detector_recall(char *datacfg, char *cfgfile, char *weightfile)

{

network *net = load_network(cfgfile, weightfile, );

set_batch_network(net, );

fprintf(stderr, "Learning Rate: %g, Momentum: %g, Decay: %g\n", net->learning_rate, net->momentum, net->decay);

srand(time()); list *options = read_data_cfg(datacfg);

char *valid_images = option_find_str(options, "valid", "/home/xieqi/project/traffic_object_detection/voc_data/test.txt");

list *plist = get_paths(valid_images);

char **paths = (char **)list_to_array(plist); //layer l = net->layers[net->n-1]; int j, k; int m = plist->size; //测试的图片总数

int i=; float thresh = .;

float iou_thresh = .;

float nms = .; int total = ; //实际有多少个bbox

int correct = ; //正确识别出了多少个bbox

int proposals = ; //测试集预测的bbox总数

float avg_iou = ; //printf("l.w*l.h*l.n = %d\n",l.w*l.h*l.n);

for(i = ; i < m; ++i){

char *path = paths[i];

image orig = load_image_color(path, , );

image sized = resize_image(orig, net->w, net->h);

char *id = basecfg(path);

network_predict(net, sized.data);

int nboxes = ;

detection *dets = get_network_boxes(net, sized.w, sized.h, thresh, ., , , &nboxes);

if (nms) do_nms_obj(dets, nboxes, , nms); char labelpath[];

find_replace(path, "images", "labels", labelpath);

find_replace(labelpath, "JPEGImages", "labels", labelpath);

find_replace(labelpath, ".jpg", ".txt", labelpath);

find_replace(labelpath, ".JPEG", ".txt", labelpath); int num_labels = ; //测试集实际的标注框数量

box_label *truth = read_boxes(labelpath, &num_labels);

for(k = ; k < nboxes; ++k){

if(dets[k].objectness > thresh){

++proposals;

}

}

for (j = ; j < num_labels; ++j) {

++total;

box t = {truth[j].x, truth[j].y, truth[j].w, truth[j].h};

//printf("truth x=%f, y=%f, w=%f,h=%f\n",truth[j].x,truth[j].y,truth[j].w,truth[j].h);

float best_iou = ;

//对每一个标注的框

for(k = ; k < nboxes; ++k){

//for(k = 0; k < l.w*l.h*l.n; ++k){

float iou = box_iou(dets[k].bbox, t);

//printf("predict=%f iou=%f x=%f, y=%f, w=%f,h=%f\n",dets[k].objectness,iou,dets[k].bbox.x,dets[k].bbox.y,dets[k].bbox.w,dets[k].bbox.h);

if(dets[k].objectness > thresh && iou > best_iou){

best_iou = iou;

}

}

//printf("best_iou=%f\n",best_iou);

avg_iou += best_iou;

if(best_iou > iou_thresh){

++correct;

}

}

fprintf(stderr, "%5d %5d %5d\tRPs/Img: %.2f\tIOU: %.2f%%\tRecall:%.2f%%\n", i, correct, total, (float)proposals/(i+), avg_iou*/total, .*correct/total);

free(id);

free_image(orig);

free_image(sized);

}

} void test_detector(char *datacfg, char *cfgfile, char *weightfile, char *filename, float thresh, float hier_thresh, char *outfile, int fullscreen)

{

list *options = read_data_cfg(datacfg); //options存储分类的标签等基本训练信息

char *name_list = option_find_str(options, "names", "data/names.list"); //抽取标签名称

char **names = get_labels(name_list); image **alphabet = load_alphabet(); //加载位于data/labels下的字符图片,用于显示矩形框名称

network *net = load_network(cfgfile, weightfile, ); //用netweork.h中自定义的network结构体存储模型文件,函数位于parser.c

set_batch_network(net, );

srand();

double start_time;

double end_time;

double img_time;

double sum_time=0.0;

char buff[];

char *input = buff;

float nms=.;

int i=;

while(){

//读取结构对应的权重文件

if(filename){

strncpy(input, filename, );

image im = load_image_color(input,,);

image sized = letterbox_image(im, net->w, net->h); //输入图片大小经过resize至输入大小

//image sized = resize_image(im, net->w, net->h);

//image sized2 = resize_max(im, net->w);

//image sized = crop_image(sized2, -((net->w - sized2.w)/2), -((net->h - sized2.h)/2), net->w, net->h);

//resize_network(net, sized.w, sized.h);

layer l = net->layers[net->n-]; float *X = sized.data; //X指向图片的data元素,即图片像素

start_time=what_time_is_it_now();

network_predict(net, X); //network_predict函数负责预测当前图片的数据X

end_time=what_time_is_it_now();

img_time= end_time - start_time; printf("%s: Predicted in %f seconds.\n", input, img_time);

int nboxes = ;

detection *dets = get_network_boxes(net, im.w, im.h, thresh, hier_thresh, , , &nboxes);

//printf("%d\n", nboxes);

//if (nms) do_nms_obj(boxes, probs, l.w*l.h*l.n, l.classes, nms);

if (nms) do_nms_sort(dets, nboxes, l.classes, nms);

draw_detections(im, dets, nboxes, thresh, names, alphabet, l.classes);

free_detections(dets, nboxes);

if(outfile)

{

save_image(im, outfile);

}

else{

//save_image(im, "predictions");

char image[];

sprintf(image,"./data/predict/%s",GetFilename(filename));

save_image(im,image);

printf("predict %s successfully!\n",GetFilename(filename));

#ifdef OPENCV

cvNamedWindow("predictions", CV_WINDOW_NORMAL);

if(fullscreen){

cvSetWindowProperty("predictions", CV_WND_PROP_FULLSCREEN, CV_WINDOW_FULLSCREEN);

}

show_image(im, "predictions");

cvWaitKey();

cvDestroyAllWindows();

#endif

}

free_image(im);

free_image(sized);

if (filename) break;

}

else {

printf("Enter Image Path: ");

fflush(stdout);

input = fgets(input, , stdin);

if(!input) return;

strtok(input, "\n"); list *plist = get_paths(input);

char **paths = (char **)list_to_array(plist);

printf("Start Testing!\n");

int m = plist->size;

if(access("/home/xieqi/darknet/data/out_img",)==-)//修改成自己的路径

{

if (mkdir("/home/xieqi/darknet/data/out_img",))//修改成自己的路径

{

printf("creat file bag failed!!!");

}

}

for(i = ; i < m; ++i){

char *path = paths[i];

image im = load_image_color(path,,);

image sized = letterbox_image(im, net->w, net->h); //输入图片大小经过resize至输入大小

//image sized = resize_image(im, net->w, net->h);

//image sized2 = resize_max(im, net->w);

//image sized = crop_image(sized2, -((net->w - sized2.w)/2), -((net->h - sized2.h)/2), net->w, net->h);

//resize_network(net, sized.w, sized.h);

layer l = net->layers[net->n-]; float *X = sized.data; //X指向图片的data元素,即图片像素

start_time = what_time_is_it_now();

network_predict(net, X); //network_predict函数负责预测当前图片的数据X

end_time = what_time_is_it_now();

img_time = end_time - start_time;

sum_time = sum_time+img_time;

printf("Try Very Hard:");

printf("%s: Predicted in %f seconds.\n", path, img_time);

int nboxes = ;

detection *dets = get_network_boxes(net, im.w, im.h, thresh, hier_thresh, , , &nboxes);

//printf("%d\n", nboxes);

//if (nms) do_nms_obj(boxes, probs, l.w*l.h*l.n, l.classes, nms);

if (nms) do_nms_sort(dets, nboxes, l.classes, nms);

draw_detections(im, dets, nboxes, thresh, names, alphabet, l.classes);

free_detections(dets, nboxes);

if(outfile){

save_image(im, outfile);

}

else{

char b[];

sprintf(b,"/home/xieqi/darknet/data/out_img/%s",GetFilename(path));//修改成自己的路径 save_image(im, b);

printf("save %s successfully!\n",GetFilename(path));

#ifdef OPENCV

cvNamedWindow("predictions", CV_WINDOW_NORMAL);

if(fullscreen){

cvSetWindowProperty("predictions", CV_WND_PROP_FULLSCREEN, CV_WINDOW_FULLSCREEN);

}

show_image(im, "predictions");

cvWaitKey();

cvDestroyAllWindows();

#endif

}

free_image(im);

free_image(sized);

if (filename) break;

}

printf("fps: %.2f totall image %d\n",(float)m/sum_time,m);

}

}

} /*

void test_detector(char *datacfg, char *cfgfile, char *weightfile, char *filename, float thresh, float hier_thresh, char *outfile, int fullscreen)

{

list *options = read_data_cfg(datacfg);

char *name_list = option_find_str(options, "names", "data/names.list");

char **names = get_labels(name_list); image **alphabet = load_alphabet();

network *net = load_network(cfgfile, weightfile, 0);

set_batch_network(net, 1);

srand(2222222);

double time;

char buff[256];

char *input = buff;

float nms=.45;

while(1){

if(filename){

strncpy(input, filename, 256);

} else {

printf("Enter Image Path: ");

fflush(stdout);

input = fgets(input, 256, stdin);

if(!input) return;

strtok(input, "\n");

}

image im = load_image_color(input,0,0);

image sized = letterbox_image(im, net->w, net->h);

//image sized = resize_image(im, net->w, net->h);

//image sized2 = resize_max(im, net->w);

//image sized = crop_image(sized2, -((net->w - sized2.w)/2), -((net->h - sized2.h)/2), net->w, net->h);

//resize_network(net, sized.w, sized.h);

layer l = net->layers[net->n-1]; float *X = sized.data;

time=what_time_is_it_now();

network_predict(net, X);

printf("%s: Predicted in %f seconds.\n", input, what_time_is_it_now()-time);

int nboxes = 0;

detection *dets = get_network_boxes(net, im.w, im.h, thresh, hier_thresh, 0, 1, &nboxes);

//printf("%d\n", nboxes);

//if (nms) do_nms_obj(boxes, probs, l.w*l.h*l.n, l.classes, nms);

if (nms) do_nms_sort(dets, nboxes, l.classes, nms);

draw_detections(im, dets, nboxes, thresh, names, alphabet, l.classes);

free_detections(dets, nboxes);

if(outfile){

save_image(im, outfile);

}

else{

save_image(im, "predictions");

#ifdef OPENCV

make_window("predictions", 512, 512, 0);

show_image(im, "predictions", 0);

#endif

} free_image(im);

free_image(sized);

if (filename) break;

}

} void censor_detector(char *datacfg, char *cfgfile, char *weightfile, int cam_index, const char *filename, int class, float thresh, int skip)

{

#ifdef OPENCV

char *base = basecfg(cfgfile);

network *net = load_network(cfgfile, weightfile, 0);

set_batch_network(net, 1); srand(2222222);

CvCapture * cap; int w = 1280;

int h = 720; if(filename){

cap = cvCaptureFromFile(filename);

}else{

cap = cvCaptureFromCAM(cam_index);

} if(w){

cvSetCaptureProperty(cap, CV_CAP_PROP_FRAME_WIDTH, w);

}

if(h){

cvSetCaptureProperty(cap, CV_CAP_PROP_FRAME_HEIGHT, h);

} if(!cap) error("Couldn't connect to webcam.\n");

cvNamedWindow(base, CV_WINDOW_NORMAL);

cvResizeWindow(base, 512, 512);

float fps = 0;

int i;

float nms = .45; while(1){

image in = get_image_from_stream(cap);

//image in_s = resize_image(in, net->w, net->h);

image in_s = letterbox_image(in, net->w, net->h);

layer l = net->layers[net->n-1]; float *X = in_s.data;

network_predict(net, X);

int nboxes = 0;

detection *dets = get_network_boxes(net, in.w, in.h, thresh, 0, 0, 0, &nboxes);

//if (nms) do_nms_obj(boxes, probs, l.w*l.h*l.n, l.classes, nms);

if (nms) do_nms_sort(dets, nboxes, l.classes, nms); for(i = 0; i < nboxes; ++i){

if(dets[i].prob[class] > thresh){

box b = dets[i].bbox;

int left = b.x-b.w/2.;

int top = b.y-b.h/2.;

censor_image(in, left, top, b.w, b.h);

}

}

show_image(in, base);

cvWaitKey(10);

free_detections(dets, nboxes); free_image(in_s);

free_image(in); float curr = 0;

fps = .9*fps + .1*curr;

for(i = 0; i < skip; ++i){

image in = get_image_from_stream(cap);

free_image(in);

}

}

#endif

} void extract_detector(char *datacfg, char *cfgfile, char *weightfile, int cam_index, const char *filename, int class, float thresh, int skip)

{

#ifdef OPENCV

char *base = basecfg(cfgfile);

network *net = load_network(cfgfile, weightfile, 0);

set_batch_network(net, 1); srand(2222222);

CvCapture * cap; int w = 1280;

int h = 720; if(filename){

cap = cvCaptureFromFile(filename);

}else{

cap = cvCaptureFromCAM(cam_index);

} if(w){

cvSetCaptureProperty(cap, CV_CAP_PROP_FRAME_WIDTH, w);

}

if(h){

cvSetCaptureProperty(cap, CV_CAP_PROP_FRAME_HEIGHT, h);

} if(!cap) error("Couldn't connect to webcam.\n");

cvNamedWindow(base, CV_WINDOW_NORMAL);

cvResizeWindow(base, 512, 512);

float fps = 0;

int i;

int count = 0;

float nms = .45; while(1){

image in = get_image_from_stream(cap);

//image in_s = resize_image(in, net->w, net->h);

image in_s = letterbox_image(in, net->w, net->h);

layer l = net->layers[net->n-1]; show_image(in, base); int nboxes = 0;

float *X = in_s.data;

network_predict(net, X);

detection *dets = get_network_boxes(net, in.w, in.h, thresh, 0, 0, 1, &nboxes);

//if (nms) do_nms_obj(boxes, probs, l.w*l.h*l.n, l.classes, nms);

if (nms) do_nms_sort(dets, nboxes, l.classes, nms); for(i = 0; i < nboxes; ++i){

if(dets[i].prob[class] > thresh){

box b = dets[i].bbox;

int size = b.w*in.w > b.h*in.h ? b.w*in.w : b.h*in.h;

int dx = b.x*in.w-size/2.;

int dy = b.y*in.h-size/2.;

image bim = crop_image(in, dx, dy, size, size);

char buff[2048];

sprintf(buff, "results/extract/%07d", count);

++count;

save_image(bim, buff);

free_image(bim);

}

}

free_detections(dets, nboxes); free_image(in_s);

free_image(in); float curr = 0;

fps = .9*fps + .1*curr;

for(i = 0; i < skip; ++i){

image in = get_image_from_stream(cap);

free_image(in);

}

}

#endif

}

*/ /*

void network_detect(network *net, image im, float thresh, float hier_thresh, float nms, detection *dets)

{

network_predict_image(net, im);

layer l = net->layers[net->n-1];

int nboxes = num_boxes(net);

fill_network_boxes(net, im.w, im.h, thresh, hier_thresh, 0, 0, dets);

if (nms) do_nms_sort(dets, nboxes, l.classes, nms);

}

*/ // ./darknet [xxx]中如果命令如果第二个xxx参数是detector,则调用这个

void run_detector(int argc, char **argv)

{

char *prefix = find_char_arg(argc, argv, "-prefix", ); //寻找是否有参数prefix, 默认参数0,argv为二维数组,存储了参数字符串

float thresh = find_float_arg(argc, argv, "-thresh", .); //寻找是否有参数thresh,thresh为输出的阈值,默认参数0.24

float hier_thresh = find_float_arg(argc, argv, "-hier", .); //寻找是否有参数hier_thresh,默认0.5

int cam_index = find_int_arg(argc, argv, "-c", ); //寻找是否有参数c,默认0

int frame_skip = find_int_arg(argc, argv, "-s", ); //寻找是否有参数s,默认0

int avg = find_int_arg(argc, argv, "-avg", ); //如果输入参数小于4个,输出正确语法如何使用

//printf 等价于 fprintf(stdout, ...),这里stderr和stdout默认输出设备都是屏幕,但是stderr一般指标准出错输入设备

if(argc < ){

fprintf(stderr, "usage: %s %s [train/test/valid] [cfg] [weights (optional)]\n", argv[], argv[]);

return;

}

char *gpu_list = find_char_arg(argc, argv, "-gpus", ); //寻找是否有参数gpus,默认0

char *outfile = find_char_arg(argc, argv, "-out", ); //检查是否指定GPU运算,默认0

int *gpus = ;

int gpu = ;

int ngpus = ;

if(gpu_list){

printf("%s\n", gpu_list);

int len = strlen(gpu_list);

ngpus = ;

int i;

for(i = ; i < len; ++i){

if (gpu_list[i] == ',') ++ngpus;

}

gpus = calloc(ngpus, sizeof(int));

for(i = ; i < ngpus; ++i){

gpus[i] = atoi(gpu_list);

gpu_list = strchr(gpu_list, ',')+;

}

} else {

gpu = gpu_index;

gpus = &gpu;

ngpus = ;

} int clear = find_arg(argc, argv, "-clear"); //检查clear参数

int fullscreen = find_arg(argc, argv, "-fullscreen");

int width = find_int_arg(argc, argv, "-w", );

int height = find_int_arg(argc, argv, "-h", );

int fps = find_int_arg(argc, argv, "-fps", );

//int class = find_int_arg(argc, argv, "-class", 0); char *datacfg = argv[]; //存data文件路径

char *cfg = argv[]; //存cfg文件路径

char *weights = (argc > ) ? argv[] : ; //存weight文件路径

char *filename = (argc > ) ? argv[]: ; //存待检测文件路径 //根据第三个参数的内容,调用不同的函数,并传入之前解析的参数

if(==strcmp(argv[], "test")) test_detector(datacfg, cfg, weights, filename, thresh, hier_thresh, outfile, fullscreen);

else if(==strcmp(argv[], "train")) train_detector(datacfg, cfg, weights, gpus, ngpus, clear);

else if(==strcmp(argv[], "valid")) validate_detector(datacfg, cfg, weights, outfile);

else if(==strcmp(argv[], "valid2")) validate_detector_flip(datacfg, cfg, weights, outfile);

else if(==strcmp(argv[], "recall")) validate_detector_recall(datacfg, cfg, weights);

else if(==strcmp(argv[], "demo")) {

list *options = read_data_cfg(datacfg);

int classes = option_find_int(options, "classes", );

char *name_list = option_find_str(options, "names", "data/names.list");

char **names = get_labels(name_list);

demo(cfg, weights, thresh, cam_index, filename, names, classes, frame_skip, prefix, avg, hier_thresh, width, height, fps, fullscreen);

}

//else if(0==strcmp(argv[2], "extract")) extract_detector(datacfg, cfg, weights, cam_index, filename, class, thresh, frame_skip);

//else if(0==strcmp(argv[2], "censor")) censor_detector(datacfg, cfg, weights, cam_index, filename, class, thresh, frame_skip);

}

"""

Note:If during training you see nan values for avg (loss) field - then training goes wrong,

but if nan is in some other lines - then training goes well.

When should I stop training:

When you see that average loss 0.xxxxxx avg no longer decreases at many iterations then you should stop training.

Once training is stopped, you should take some of last .weights-files from darknet\build\darknet\x64\backup and choose the best of them.

Overfitting - is case when you can detect objects on images from training-dataset,

but can't detect objects on any others images. You should get weights from Early Stopping Point.

IoU (intersect of union) - average instersect of union of objects and detections for a certain threshold = 0.24

How to improve object detection:

Before training:

set flag random=1 in your .cfg-file - it will increase precision by training Yolo for different resolutions.

increase network resolution in your .cfg-file (height=608, width=608 or any value multiple of 32) - it will increase precision.

recalculate anchors for your dataset for width and height from cfg-file:

darknet.exe detector calc_anchors data/obj.data -num_of_clusters 9 -width 416 -height 416 then set the same 9 anchors in each of 3 [yolo]-layers in your cfg-file

设置锚点

desirable that your training dataset include images with objects at diffrent:

scales, rotations, lightings, from different sides, on different backgrounds

样本特点尽量多样化,亮度,旋转,背景,目标位置,尺寸

desirable that your training dataset include images with non-labeled objects that you do not want to detect - negative samples without bounded box (empty .txt files)

可以添加没有标注框的图片和其空的txt文件,作为negative数据

for training with a large number of objects in each image, add the parameter max=200 or higher value in the last layer [region] in your cfg-file

to speedup training (with decreasing detection accuracy) do Fine-Tuning instead of Transfer-Learning,

set param stopbackward=1 in one of the penultimate convolutional layers before the 1-st [yolo]-layer,

for example here: https://github.com/AlexeyAB/darknet/blob/0039fd26786ab5f71d5af725fc18b3f521e7acfd/cfg/yolov3.cfg#L598

可以在第一个[yolo]层之前的倒数第二个[convolutional]层末尾添加 stopbackward=1,以此提升训练速度

After training - for detection:

Increase network-resolution by set in your .cfg-file (height=608 and width=608) or (height=832 and width=832)

or (any value multiple of 32) - this increases the precision and makes it possible to detect small objects,

you do not need to train the network again, just use .weights-file already trained for 416x416 resolution.

即使在用416*416训练完之后,也可以在cfg文件中设置较大的width和height,增加网络对图像的分辨率,从而更可能检测出图像中的小目标,而不需要重新训练

if error Out of memory occurs then in .cfg-file you should increase subdivisions=16, 32 or 64

"""

YOLO-V3实战(darknet)的更多相关文章

- YOLO v3

yolo为you only look once. 是一个全卷积神经网络(FCN),它有75层卷积层,包含跳跃式传递和降采样,没有池化层,当stide=2时用做降采样. yolo的输出是一个特征映射(f ...

- YOLO系列:YOLO v3解析

本文好多内容转载自 https://blog.csdn.net/leviopku/article/details/82660381 yolo_v3 提供替换backbone.要想性能牛叉,backbo ...

- 深度学习笔记(十三)YOLO V3 (Tensorflow)

[代码剖析] 推荐阅读! SSD 学习笔记 之前看了一遍 YOLO V3 的论文,写的挺有意思的,尴尬的是,我这鱼的记忆,看完就忘了 于是只能借助于代码,再看一遍细节了. 源码目录总览 tens ...

- YOLO v3算法介绍

图片来自https://towardsdatascience.com/yolo-v3-object-detection-with-keras-461d2cfccef6 数据前处理 输入的图片维数:(4 ...

- 一文看懂YOLO v3

论文地址:https://pjreddie.com/media/files/papers/YOLOv3.pdf论文:YOLOv3: An Incremental Improvement YOLO系列的 ...

- YOLO V3 原理

基本思想V1: 将输入图像分成S*S个格子,每隔格子负责预测中心在此格子中的物体. 每个格子预测B个bounding box及其置信度(confidence score),以及C个类别概率. bbox ...

- Pytorch从0开始实现YOLO V3指南 part5——设计输入和输出的流程

本节翻译自:https://blog.paperspace.com/how-to-implement-a-yolo-v3-object-detector-from-scratch-in-pytorch ...

- Yolo V3整体思路流程详解!

结合开源项目tensorflow-yolov3(https://link.zhihu.com/?target=https%3A//github.com/YunYang1994/tensorflow-y ...

- Pytorch从0开始实现YOLO V3指南 part1——理解YOLO的工作

本教程翻译自https://blog.paperspace.com/how-to-implement-a-yolo-object-detector-in-pytorch/ 视频展示:https://w ...

- TensorFlow + Keras 实战 YOLO v3 目标检测图文并茂教程

运行步骤 1.从 YOLO 官网下载 YOLOv3 权重 wget https://pjreddie.com/media/files/yolov3.weights 下载过程如图: 2.转换 Darkn ...

随机推荐

- Java实现 LeetCode 78 子集

78. 子集 给定一组不含重复元素的整数数组 nums,返回该数组所有可能的子集(幂集). 说明:解集不能包含重复的子集. 示例: 输入: nums = [1,2,3] 输出: [ [3], [1], ...

- Python——day3

看到右边的时钟了吗? 我想世界最公平的一件事就是每个人的每一小时.每一天.每一年都是相同的,每个人的时间都是一样的. 一直保持温热感是一件很了不起的事,加油,屏幕前的你和我. 明天,还在等你 回顾d ...

- (五)SQLMap工具检测SQL注入漏洞、获取数据库中的数据

目录结构 一.判断被测url的参数是否存在注入点 二.获取数据库系统的所有数据库名称(暴库) 三.获取Web应用当前所连接的数据库 四.获取Web应用当前所操作的DBMS用户 五.列出数据库中的所有用 ...

- Java基础(十一)

一.连接到服务器 telnet是一种用于网络编程的非常强大的测试工具,你可以在命令shell中输入telnet来启动它. 二.实现服务器 服务器循环体: 1.通过输入数据流从客户端接收一个命令. 2. ...

- iOS-Code Data的快速体验

Code Data Core Data 是iOS SDK 里的一个很强大的框架,允许程序员以面向对象的方式储存和管理数据.使用Core Data框架,程序员可以很轻松有效地通过面向对象的接口管理数据 ...

- OAuth + Security - 6 - 自定义授权模式

我们知道OAuth2的官方提供了四种令牌的获取,简化模式,授权码模式,密码模式,客户端模式.其中密码模式中仅仅支持我们通过用户名和密码的方式获取令牌,那么我们如何去实现一个我们自己的令牌获取的模式呢? ...

- javascript 面向对象学习(一)——构造函数

最近在学习设计模式,找了很多资料也没有看懂,看到怀疑智商,怀疑人生,思来想去还是把锅甩到基础不够扎实上.虽然原型继承.闭包.构造函数也都有学习过,但理解得不够透彻,影响到后续提高.这次重新开始学习,一 ...

- ubuntu qwt6.1.0安装

1.ubuntu-12.04 qt-5.1.1 2.sudo apt-get install libgl1-mesa-dev libglu1-mesa-dev 3.qmake 4.make 5.sud ...

- jmeter录制app测试脚本

1.jmeter 下载地址 https://jmeter.apache.org 2.选择下载包 3.下载完成后解压即可使用(也可以配置环境变量,但我一般不配置,可以使用) 4.打开jmeter 创建线 ...

- 兄弟打印机MFC代码示范

m_strModel.LoadString(IDS_MODEL_STRING); //IDS_MODEL_STRING,字符串控件的ID,资源视图-String Table里面设置 m_strSour ...