【tensorflow2.0】处理文本数据

一,准备数据

imdb数据集的目标是根据电影评论的文本内容预测评论的情感标签。

训练集有20000条电影评论文本,测试集有5000条电影评论文本,其中正面评论和负面评论都各占一半。

文本数据预处理较为繁琐,包括中文切词(本示例不涉及),构建词典,编码转换,序列填充,构建数据管道等等。

在tensorflow中完成文本数据预处理的常用方案有两种,第一种是利用tf.keras.preprocessing中的Tokenizer词典构建工具和tf.keras.utils.Sequence构建文本数据生成器管道。

第二种是使用tf.data.Dataset搭配.keras.layers.experimental.preprocessing.TextVectorization预处理层。

第一种方法较为复杂,其使用范例可以参考以下文章。

https://zhuanlan.zhihu.com/p/67697840

第二种方法为TensorFlow原生方式,相对也更加简单一些。

我们此处介绍第二种方法。

首先看一下train.csv中的部分内容是什么:

"0 It really boggles my mind when someone comes across a movie like this and claims it to be one of the worst slasher films out there. This is by far not one of the worst out there" still not a good movie but not the worst nonetheless. Go see something like Death Nurse or Blood Lake and then come back to me and tell me if you think the Night Brings Charlie is the worst. The film has decent camera work and editing which is way more than I can say for many more extremely obscure slasher films.<br /><br />The film doesn't deliver on the on-screen deaths there's one death where you see his pruning saw rip into a neck but all other deaths are hardly interesting. But the lack of on-screen graphic violence doesn't mean this isn't a slasher film just a bad one.<br /><br />The film was obviously intended not to be taken too seriously. The film came in at the end of the second slasher cycle so it certainly was a reflection on traditional slasher elements done in a tongue in cheek way. For example after a kill Charlie goes to the town's 'welcome' sign and marks the population down one less. This is something that can only get a laugh.<br /><br />If you're into slasher films definitely give this film a watch. It is slightly different than your usual slasher film with possibility of two killers but not by much. The comedy of the movie is pretty much telling the audience to relax and not take the movie so god darn serious. You may forget the movie you may remember it. I'll remember it because I love the name.

"0 Mary Pickford becomes the chieftain of a Scottish clan after the death of her father" and then has a romance. As fellow commenter Snow Leopard said the film is rather episodic to begin. Some of it is amusing such as Pickford whipping her clansmen to church while some of it is just there. All in all the story is weak especially the recycled contrived romance plot-line and its climax. The transfer is so dark it's difficult to appreciate the scenery but even accounting for that this doesn't appear to be director Maurice Tourneur's best work. Pickford and Tourneur collaborated once more in the somewhat more accessible 'The Poor Little Rich Girl ' typecasting Pickford as a child character.

"0 Well" at least my theater group did lol. So of course I remember watching Grease since I was a little girl while it was never my favorite musical or story it does still hold a little special place in my heart since it's still a lot of fun to watch. I heard horrible things about Grease 2 and that's why I decided to never watch it but my boyfriend said that it really wasn't all that bad and my friend agreed so I decided to give it a shot but I called them up and just laughed. First off the plot is totally stolen from the first one and it wasn't really clever not to mention they just used the same characters but with different names and actors. Tell me how did the Pink Ladies and T-Birds continue years on after the former gangs left? Not to mention the creator face motor cycle enemy gee what a striking resemblance to the guys in the first film as well as these T-Birds were just stupid and ridiculous.<br /><br />Another year at Rydell and the music and dancing hasn't stopped. But when a new student who is Sandy's cousin comes into the scene he is love struck by a pink lady Stephanie. But she must stick to the code where only Pink Ladies must stick with the T-Birds so the new student decides to train as a T-Bird to win her heart. So he dresses up as a rebel motor cycle bandit who can ride well and defeat the evil bikers from easily kicking the T-Bird's butts. But will he tell Stephanie who he really is or will she find out on her own? Well find out for yourself.<br /><br />Grease 2 is like a silly TV show of some sort that didn't work. The gang didn't click as well as the first Grease did not to mention Frenchy coming back was a bit silly and unbelievable because I thought that she graduated from Rydell but apparently she didn't. The songs were not really that catchy; I'm glad that Michelle was able to bounce back so fast but that's probably because she was the only one with talent in this silly little sequel I wouldn't really recommend this film other than if you are curious but I warned you this is just a pathetic attempt at more money from the famous musical.<br /><br />2/10

"1 I must give How She Move a near-perfect rating because the content is truly great. As a previous reviewer commented" I have no idea how this film has found itself in IMDBs bottom 100 list! That's absolutely ridiculous! Other films--particular those that share the dance theme--can't hold a candle to this one in terms of its combination of top-notch believable acting and amazing dance routines.<br /><br />From start to finish the underlying story (this is not just about winning a competition) is very easy to delve into and surprisingly realistic. None of the main characters in this are 2-dimensional by any means and by the end of the film it's very easy to feel emotionally invested in them. (And even if you're not the crying type you might get a little weepy-eyed before the credits roll.) <br /><br />I definitely recommend this film to dance-lovers and even more so to those who can appreciate a poignant and well-acted storyline. How She Move isn't perfect of course (what film is?) but it's definitely a cut above movies that use pretty faces to hide a half-baked plot and/or characters who lack substance. The actors and settings in this film make for a very realistic ride that is equally enthralling thanks to the amazing talent of the dancers!

"0 I must say" when I read the storyline on the back of the case It sounded really interesting but when I started to watch the movie seemed boring at first and even more at the end. Some scenes are way too long and the story has not been worked out properly.

"0 i am 13 and i hated this film its the worst film on earth i totally wasted my time watching it and was disappointed with it cause on the cover and on the back the film it looks pretty good" but i was wrong its bad. but when i saw delta she was totally different and a bad actress and i really didn't know how old the 2 girls was trying to be i was so confused. the film was in some parts confusing and i didn't enjoy it at all but i watched all the film just to see if it was going to get better but it didn't it was boring dull and did i say BORING.and i don't think many other people liked it as well as me.boring boring boring

"0 The acting may be okay" the more u watch this movie the more u wish you weren't this movie is so horrible that if I could get a hold of every copy I would burn them all and not look back this movie is terrible!!

"0 I've seen some bad things in my time. A half dead cow trying to get out of waist high mud; a head on collision between two cars; a thousand plates smashing on a kitchen floor; human beings living like animals.<br /><br />But never in my life have I seen anything as bad as The Cat in the Hat.<br /><br />This film is worse than 911" worse than Hitler worse than Vllad the Impaler worse than people who put kittens in microwaves.<br /><br />It is the most disturbing film of all time easy.<br /><br />I used to think it was a joke some elaborate joke and that Mike Myers was maybe a high cocaine sniffing drug addled betting junkie who lost a bet or something.<br /><br />I shudder

每一个单元格里面都是一句话。

然后是构造训练集和测试集:

import numpy as np

import pandas as pd

from matplotlib import pyplot as plt

import tensorflow as tf

from tensorflow.keras import models,layers,preprocessing,optimizers,losses,metrics

from tensorflow.keras.layers.experimental.preprocessing import TextVectorization

import re,string train_data_path = "./data/imdb/train.csv"

test_data_path = "./data/imdb/test.csv" MAX_WORDS = 10000 # 仅考虑最高频的10000个词

MAX_LEN = 200 # 每个样本保留200个词的长度

BATCH_SIZE = 20 # 构建管道

def split_line(line):

arr = tf.strings.split(line,"\t")

label = tf.expand_dims(tf.cast(tf.strings.to_number(arr[0]),tf.int32),axis = 0)

text = tf.expand_dims(arr[1],axis = 0)

return (text,label) ds_train_raw = tf.data.TextLineDataset(filenames = [train_data_path]) \

.map(split_line,num_parallel_calls = tf.data.experimental.AUTOTUNE) \

.shuffle(buffer_size = 1000).batch(BATCH_SIZE) \

.prefetch(tf.data.experimental.AUTOTUNE) ds_test_raw = tf.data.TextLineDataset(filenames = [test_data_path]) \

.map(split_line,num_parallel_calls = tf.data.experimental.AUTOTUNE) \

.batch(BATCH_SIZE) \

.prefetch(tf.data.experimental.AUTOTUNE) # 构建词典

def clean_text(text):

lowercase = tf.strings.lower(text)

stripped_html = tf.strings.regex_replace(lowercase, '<br />', ' ')

cleaned_punctuation = tf.strings.regex_replace(stripped_html,

'[%s]' % re.escape(string.punctuation),'')

return cleaned_punctuation vectorize_layer = TextVectorization(

standardize=clean_text,

split = 'whitespace',

max_tokens=MAX_WORDS-1, #有一个留给占位符

output_mode='int',

output_sequence_length=MAX_LEN) ds_text = ds_train_raw.map(lambda text,label: text)

vectorize_layer.adapt(ds_text)

print(vectorize_layer.get_vocabulary()[0:100]) # 单词编码

ds_train = ds_train_raw.map(lambda text,label:(vectorize_layer(text),label)) \

.prefetch(tf.data.experimental.AUTOTUNE)

ds_test = ds_test_raw.map(lambda text,label:(vectorize_layer(text),label)) \

.prefetch(tf.data.experimental.AUTOTUNE)

[b'the', b'and', b'a', b'of', b'to', b'is', b'in', b'it', b'i', b'this', b'that', b'was', b'as', b'for', b'with', b'movie', b'but', b'film', b'on', b'not', b'you', b'his', b'are', b'have', b'be', b'he', b'one', b'its', b'at', b'all', b'by', b'an', b'they', b'from', b'who', b'so', b'like', b'her', b'just', b'or', b'about', b'has', b'if', b'out', b'some', b'there', b'what', b'good', b'more', b'when', b'very', b'she', b'even', b'my', b'no', b'would', b'up', b'time', b'only', b'which', b'story', b'really', b'their', b'were', b'had', b'see', b'can', b'me', b'than', b'we', b'much', b'well', b'get', b'been', b'will', b'into', b'people', b'also', b'other', b'do', b'bad', b'because', b'great', b'first', b'how', b'him', b'most', b'dont', b'made', b'then', b'them', b'films', b'movies', b'way', b'make', b'could', b'too', b'any', b'after', b'characters']

二,定义模型

使用Keras接口有以下3种方式构建模型:使用Sequential按层顺序构建模型,使用函数式API构建任意结构模型,继承Model基类构建自定义模型。

此处选择使用继承Model基类构建自定义模型。

# 演示自定义模型范例,实际上应该优先使用Sequential或者函数式API tf.keras.backend.clear_session() class CnnModel(models.Model):

def __init__(self):

super(CnnModel, self).__init__() def build(self,input_shape):

self.embedding = layers.Embedding(MAX_WORDS,7,input_length=MAX_LEN)

self.conv_1 = layers.Conv1D(16, kernel_size= 5,name = "conv_1",activation = "relu")

self.pool = layers.MaxPool1D()

self.conv_2 = layers.Conv1D(128, kernel_size=2,name = "conv_2",activation = "relu")

self.flatten = layers.Flatten()

self.dense = layers.Dense(1,activation = "sigmoid")

super(CnnModel,self).build(input_shape) def call(self, x):

x = self.embedding(x)

x = self.conv_1(x)

x = self.pool(x)

x = self.conv_2(x)

x = self.pool(x)

x = self.flatten(x)

x = self.dense(x)

return(x) model = CnnModel()

model.build(input_shape =(None,MAX_LEN))

model.summary()

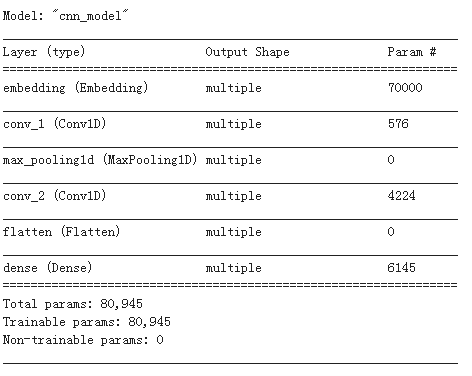

模型结构:

三,训练模型

训练模型通常有3种方法,内置fit方法,内置train_on_batch方法,以及自定义训练循环。此处我们通过自定义训练循环训练模型。

# 打印时间分割线

@tf.function

def printbar():

ts = tf.timestamp()

today_ts = ts%(24*60*60) hour = tf.cast(today_ts//3600+8,tf.int32)%tf.constant(24)

minite = tf.cast((today_ts%3600)//60,tf.int32)

second = tf.cast(tf.floor(today_ts%60),tf.int32) def timeformat(m):

if tf.strings.length(tf.strings.format("{}",m))==1:

return(tf.strings.format("0{}",m))

else:

return(tf.strings.format("{}",m)) timestring = tf.strings.join([timeformat(hour),timeformat(minite),

timeformat(second)],separator = ":")

tf.print("=========="*8,end = "")

tf.print(timestring)

optimizer = optimizers.Nadam()

loss_func = losses.BinaryCrossentropy() train_loss = metrics.Mean(name='train_loss')

train_metric = metrics.BinaryAccuracy(name='train_accuracy') valid_loss = metrics.Mean(name='valid_loss')

valid_metric = metrics.BinaryAccuracy(name='valid_accuracy') @tf.function

def train_step(model, features, labels):

with tf.GradientTape() as tape:

predictions = model(features,training = True)

loss = loss_func(labels, predictions)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables)) train_loss.update_state(loss)

train_metric.update_state(labels, predictions) @tf.function

def valid_step(model, features, labels):

predictions = model(features,training = False)

batch_loss = loss_func(labels, predictions)

valid_loss.update_state(batch_loss)

valid_metric.update_state(labels, predictions) def train_model(model,ds_train,ds_valid,epochs):

for epoch in tf.range(1,epochs+1): for features, labels in ds_train:

train_step(model,features,labels) for features, labels in ds_valid:

valid_step(model,features,labels) #此处logs模板需要根据metric具体情况修改

logs = 'Epoch={},Loss:{},Accuracy:{},Valid Loss:{},Valid Accuracy:{}' if epoch%1==0:

printbar()

tf.print(tf.strings.format(logs,

(epoch,train_loss.result(),train_metric.result(),valid_loss.result(),valid_metric.result())))

tf.print("") train_loss.reset_states()

valid_loss.reset_states()

train_metric.reset_states()

valid_metric.reset_states() train_model(model,ds_train,ds_test,epochs = 6)

训练结果:

================================================================================14:45:06

Epoch=1,Loss:0.474225521,Accuracy:0.7376,Valid Loss:0.336961836,Valid Accuracy:0.8526 ================================================================================14:45:12

Epoch=2,Loss:0.245222151,Accuracy:0.9035,Valid Loss:0.326947063,Valid Accuracy:0.8666 ================================================================================14:45:17

Epoch=3,Loss:0.165854618,Accuracy:0.93795,Valid Loss:0.365531504,Valid Accuracy:0.867 ================================================================================14:45:23

Epoch=4,Loss:0.104812928,Accuracy:0.96395,Valid Loss:0.448238105,Valid Accuracy:0.861 ================================================================================14:45:29

Epoch=5,Loss:0.0595887862,Accuracy:0.98125,Valid Loss:0.602612,Valid Accuracy:0.8624 ================================================================================14:45:35

Epoch=6,Loss:0.0318539739,Accuracy:0.9905,Valid Loss:0.762770712,Valid Accuracy:0.8598

四,评估模型

通过自定义训练循环训练的模型没有经过编译,无法直接使用model.evaluate(ds_valid)方法

def evaluate_model(model,ds_valid):

for features, labels in ds_valid:

valid_step(model,features,labels)

logs = 'Valid Loss:{},Valid Accuracy:{}'

tf.print(tf.strings.format(logs,(valid_loss.result(),valid_metric.result()))) valid_loss.reset_states()

train_metric.reset_states()

valid_metric.reset_states() evaluate_model(model,ds_test)

评估结果:

Valid Loss:0.762770712,Valid Accuracy:0.8598

五,使用模型

可以使用以下方法:

- model.predict(ds_test)

- model(x_test)

- model.call(x_test)

- model.predict_on_batch(x_test)

推荐优先使用model.predict(ds_test)方法,既可以对Dataset,也可以对Tensor使用。

model.predict(ds_test)for x_test,_ in ds_test.take(1):

print(model(x_test))

#以下方法等价:

#print(model.call(x_test))

#print(model.predict_on_batch(x_test))

评估结果:

tf.Tensor(

[[9.9007505e-01]

[9.9999797e-01]

[9.9836570e-01]

[2.6509229e-06]

[4.7592866e-01]

[3.7760619e-05]

[8.0391978e-08]

[1.6816575e-05]

[9.9996006e-01]

[9.9695146e-01]

[1.0000000e+00]

[9.9962234e-01]

[1.9009445e-08]

[9.7622436e-01]

[4.4549329e-06]

[2.8802201e-01]

[1.0730105e-04]

[3.8324962e-03]

[2.2874507e-03]

[9.9966860e-01]], shape=(20, 1), dtype=float32)

六,保存模型

推荐使用TensorFlow原生方式保存模型。

model.save('./data/tf_model_savedmodel', save_format="tf")

print('export saved model.')

model_loaded = tf.keras.models.load_model('./data/tf_model_savedmodel')

model_loaded.predict(ds_test)

结果:

WARNING:tensorflow:From /usr/local/lib/python3.6/dist-packages/tensorflow/python/ops/resource_variable_ops.py:1817: calling BaseResourceVariable.__init__ (from tensorflow.python.ops.resource_variable_ops) with constraint is deprecated and will be removed in a future version.

Instructions for updating:

If using Keras pass *_constraint arguments to layers.

INFO:tensorflow:Assets written to: ./data/tf_model_savedmodel/assets

export saved model.

WARNING:tensorflow:No training configuration found in save file, so the model was *not* compiled. Compile it manually.

array([[0.99007505],

[0.999998 ],

[0.9983657 ],

...,

[0.9962114 ],

[0.94716024],

[1. ]], dtype=float32)

参考:

开源电子书地址:https://lyhue1991.github.io/eat_tensorflow2_in_30_days/

GitHub 项目地址:https://github.com/lyhue1991/eat_tensorflow2_in_30_days

【tensorflow2.0】处理文本数据的更多相关文章

- 【tensorflow2.0】处理图片数据-cifar2分类

1.准备数据 cifar2数据集为cifar10数据集的子集,只包括前两种类别airplane和automobile. 训练集有airplane和automobile图片各5000张,测试集有airp ...

- 【tensorflow2.0】处理时间序列数据

国内的新冠肺炎疫情从发现至今已经持续3个多月了,这场起源于吃野味的灾难给大家的生活造成了诸多方面的影响. 有的同学是收入上的,有的同学是感情上的,有的同学是心理上的,还有的同学是体重上的. 那么国内的 ...

- Google工程师亲授 Tensorflow2.0-入门到进阶

第1章 Tensorfow简介与环境搭建 本门课程的入门章节,简要介绍了tensorflow是什么,详细介绍了Tensorflow历史版本变迁以及tensorflow的架构和强大特性.并在Tensor ...

- JAVASE02-Unit08: 文本数据IO操作 、 异常处理

Unit08: 文本数据IO操作 . 异常处理 * java.io.ObjectOutputStream * 对象输出流,作用是进行对象序列化 package day08; import java.i ...

- JAVASE02-Unit07: 基本IO操作 、 文本数据IO操作

基本IO操作 . 文本数据IO操作 java标准IO(input/output)操作 package day07; import java.io.FileOutputStream; import ja ...

- 10、NFC技术:读写NFC标签中的文本数据

代码实现过程如下: 读写NFC标签的纯文本数据.java import java.nio.charset.Charset; import java.util.Locale; import androi ...

- NFC(12)使用Android Beam技术传输文本数据及它是什么

Android Beam技术是什么 Android Beam的基本理念就是两部(只能是1对1,不可像蓝牙那样1对多)NFC设备靠近时(一般是背靠背),通过触摸一部NFC设备的屏幕,将数据推向另外一部N ...

- C#实现大数据量TXT文本数据快速高效去重

原文 C#实现大数据量TXT文本数据快速高效去重 对几千万的TXT文本数据进行去重处理,查找其中重复的数据,并移除.尝试了各种方法,下属方法是目前尝试到最快的方法.以下代码将重复和不重复数据进行分文件 ...

- 如何使用 scikit-learn 为机器学习准备文本数据

欢迎大家前往云+社区,获取更多腾讯海量技术实践干货哦~ 文本数据需要特殊处理,然后才能开始将其用于预测建模. 我们需要解析文本,以删除被称为标记化的单词.然后,这些词还需要被编码为整型或浮点型,以用作 ...

随机推荐

- Hibernage错误:Could not open Hibernate Session for transaction

今天客户发来的错误,是SSH框架做的项目,是用户在登陆时候出现的错误,但刷新之后就没问题. 提示错误:Could not open Hibernate Session for transaction. ...

- JS如何进行对象的深克隆(深拷贝)?

JS中,一般的赋值传递的都是对象/数组的引用,并没有真正的深拷贝一个对象,如何进行对象的深拷贝呢? var a = {name : 'miay'}; var b = a; b.name = 'Jone ...

- localStorage,sessionStorage的方法重写

本文是针对于localStorage,sessionStorage对于object,string,number,bollean类型的存取方法 我们知道,在布尔类型的值localStorage保存到本地 ...

- seo搜索优化技巧01-seo外链怎么发?

在seo搜索优化中,seo外链的作用并没有早期的作用大了.可是高质量的外链对关键词的排名还是很重要的.星辉信息科技对seo外链怎么发以及seo外链建设中的注意点进行阐述. SEO外链如何做 SEO高质 ...

- WEB渗透 - XSS

听说这个时间点是人类这种生物很重要的一个节点 cross-site scripting 跨站脚本漏洞 类型 存储型(持久) 反射型(非持久) DOM型 利用 先检测,看我们输入的内容是否有返回以及有无 ...

- vquery 一些应用

// JavaScript Document function myAddEvent(obj,sEv,fn){ if(obj.attachEvent){ obj.attachEvent('on'+sE ...

- [红日安全]Web安全Day12 – 会话安全实战攻防

本文由红日安全成员: ruanruan 编写,如有不当,还望斧正. 大家好,我们是红日安全-Web安全攻防小组.此项目是关于Web安全的系列文章分享,还包含一个HTB靶场供大家练习,我们给这个项目起了 ...

- Java并发编程学习前期知识下篇

Java并发编程学习前期知识下篇 通过上一篇<Java并发编程学习前期知识上篇>我们知道了在Java并发中的可见性是什么?volatile的定义以及JMM的定义.我们先来看看几个大厂真实的 ...

- 正则匹配电话号码demo

public static String doFilterTelnum(String sParam) { String result = sParam; if (sParam.length() < ...

- Vysor Pro1.9.3破解,连接 USB 数据线在电脑上远程控制 Android 手机平板/同步显示画面

Vysor PRO 破解方法 1.下载Vysor Pro, Vysor Pro下载地址 ,chrome版需要挂梯子. 下载后,能连接,但是清晰度太低,能使用的功能也很少,下面我们就开始来破解它. ...